Solution

Runpod makes GPU infrastructure simple.

Runpod is the end-to-end AI cloud that

simplifies building and deploying models.

Go from idea to deployment in a single flow.

Runpod simplifies every step of your workflow—so you can build, scale, and optimize without ever managing infrastructure.

No outages. No worries.

Runpod handles failovers, ensuring your workloads run smoothly—even when resources don’t.

Managed orchestration.

Runpod Serverless queues and distributes tasks seamlessly, saving you from building orchestration systems.

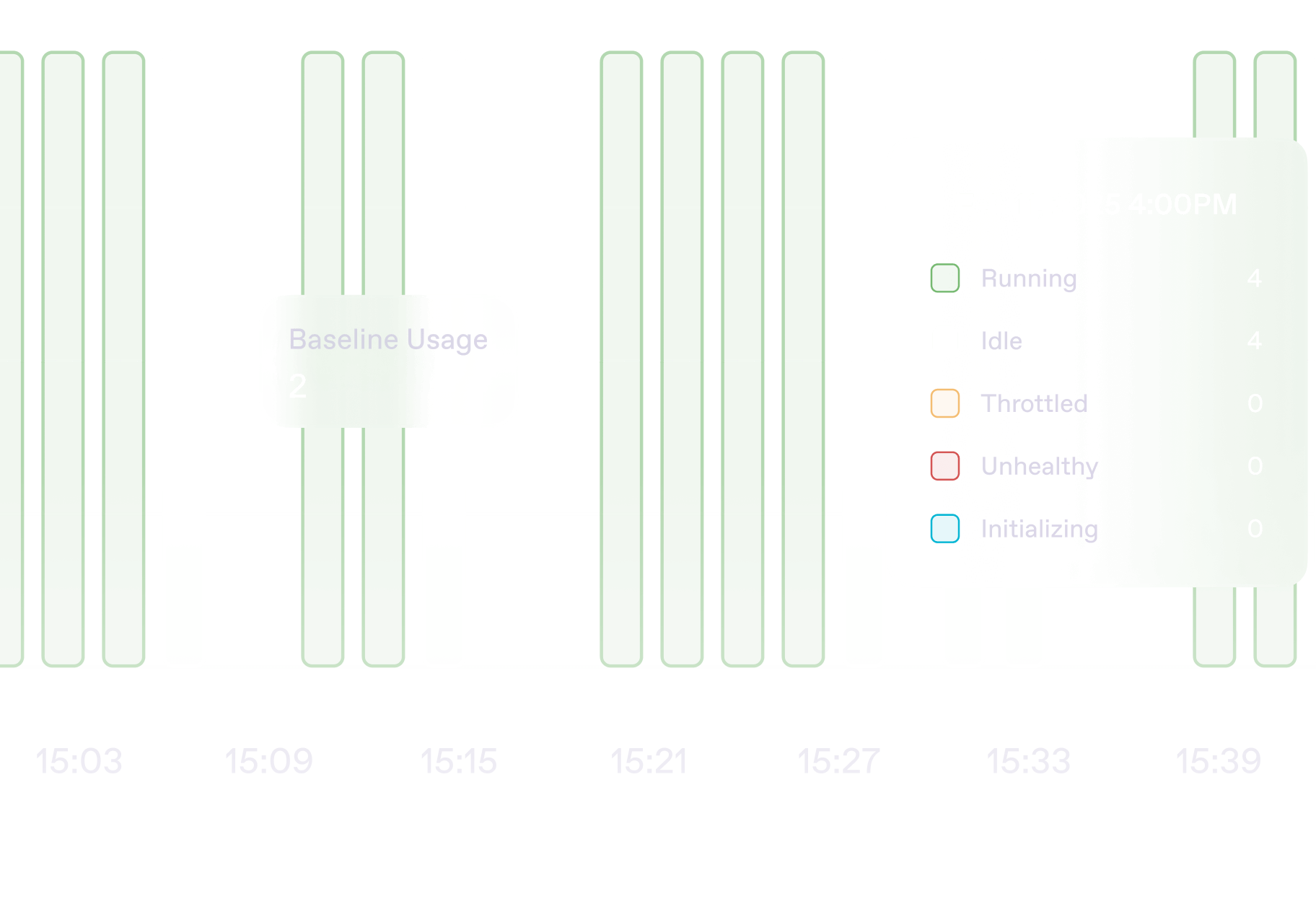

Real-time logs.

Get real-time logs, monitoring, and metrics—no custom frameworks required.

Features

Scale with Serverless when you're ready for production.

Powerful compute, effortless deployment.

Autoscale in seconds

Instantly respond to demand with GPU workers that scale from 0 to 1000s in seconds.

Zero cold-starts with active workers

Always-on GPUs for uninterrupted execution.

<200ms cold-start with FlashBoot

Lightning-fast scaling with

sub-200ms cold-starts.

Persistent data storage

Run full AI pipelines—data ingestion to deployment—without egress fees on our S3 compatible storage.

Case Studies

Loved by developers.

But don’t just take it from us.

Impact

Get more done for every dollar.

More throughput, faster scaling, and higher efficiency—with Runpod, every dollar works harder.

This graphic shows tokens per dollar

>500 million

Serverless requests monthly

57%

Average reduction in setup time

Unlimited

Data processed with zero ingress/egress fees

Enterprise

Enterprise-grade from day one.

Built for scale, secured for trust, and designed to meet your most demanding needs.

.webp)

99.9% uptime

Run critical workloads with confidence, backed by industry-leading reliability.

Secure by default

We are in the process of obtaining SOC2, HIPAA and GDPR certifications.

Scale to hundreds of GPUs

Adapt instantly to demand with infrastructure that grows with you.

.webp)