We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

We're excited to announce that our partner DeepCogito has just released Cogito v2, a groundbreaking collection of hybrid reasoning, multimodal models that represents a fundamental shift in how we approach AI intelligence improvements. This isn't just another model release—it's a proof of concept for scalable superintelligence.

While most recent advances in reasoning models have focused on scaling up thinking token, essentially making models "think longer" to solve problems, Cogito v2 takes a radically different approach. Instead of brute-force searching through longer reasoning chains, these models develop better intuition about which reasoning paths to take.

The results speak for themselves: Cogito models achieve equivalent performance to leading models while using 60% shorter reasoning chains. This isn't just an efficiency gain—it represents a fundamental breakthrough in how we build more intelligent systems. There has been a deep focus across the field on making models smarter, but not necessarily faster or more usable. Larger models are great, but compute time definitely becomes an issue, especially with dense models. It shouldn’t come as a surprise as to why so many large model releases are strictly MoE, for that reason. What DeepCogito has accomplished is a model that not only provides the quality of answer that has become expected in the field, but does it faster and in fewer tokens than comparably sized models, resulting in a direct cost savings when using time-based billing (such as serverless.)

What makes this particularly valuable is that you get the same quality of reasoning with less computational cost. This means:

The Cogito v2 release includes four models designed to meet different computational needs, all released under open license:

Small Models:

Large Models:

All models can answer directly or apply reasoning before answering.

The secret behind Cogito v2's success lies in its approach to iterative policy improvement. Rather than simply scaling inference-time reasoning, the models use a two-step process inspired by successful narrow AI systems like AlphaGo:

This creates a virtuous cycle where each iteration makes the model's base intelligence stronger, rather than just making it search longer. (After all, there are diminishing returns to giving more tokens to the thought process; you aren’t going to have the model find the Unified Field Theory of physics just because you gave it a million thinking token budget.) Because of this cycle, the model develops better "intuition" about which reasoning trajectories are most promising, leading to more efficient and effective problem-solving.

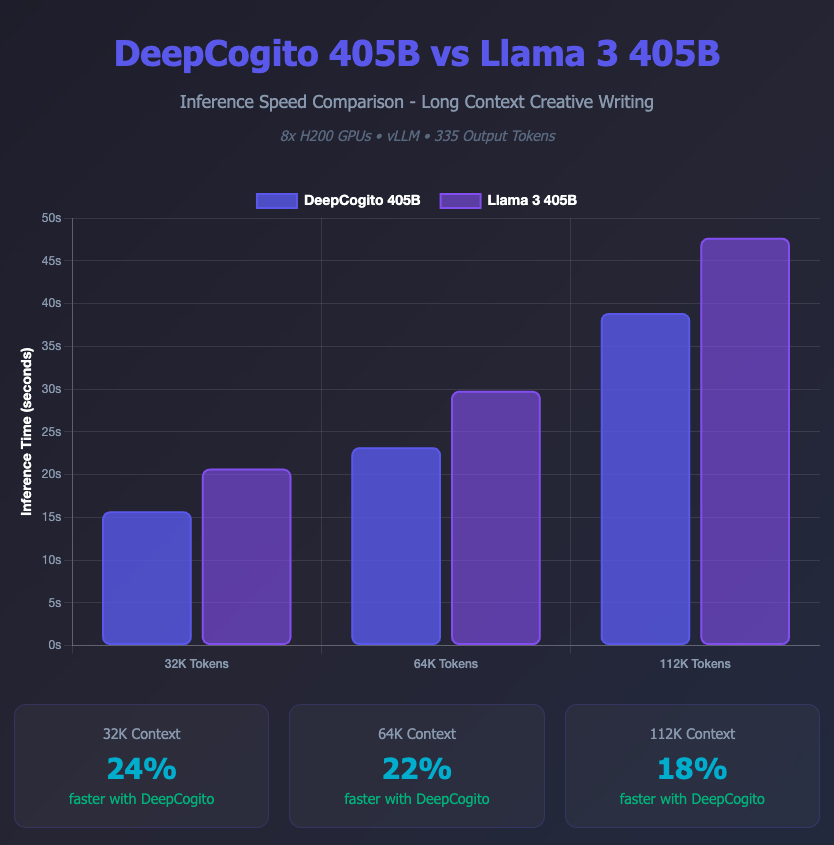

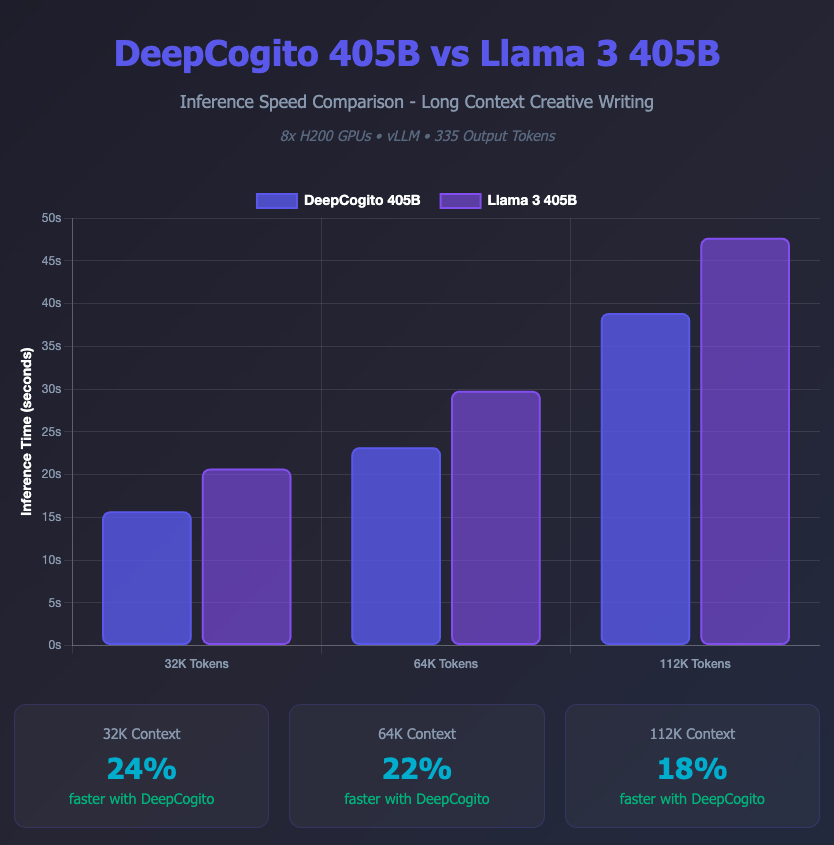

To put this to the test, we pit DeepCogito 405b against Llama-3 405b with the exact same setup on some very long context creative writing tasks (8xH200s, being served on vLLM with the exact same configuration) and DeepCogito’s model demonstrated some pretty significant inference speed improvements, all other things being equal. As stated, this would translate into a direct and proportional cost savings over an equally sized dense model in a serverless architecture.

Getting DeepCogito models up and running on Runpod is straightforward since they're built on the standard transformers architecture. This means all your existing inference engines and deployment workflows will work seamlessly with these new models. We have several templates and package deployment options such as vLLM, sglang, and text-generation-webui; all you need to do is plug in the model you want from the Deep Cogito Huggingface page and you are good to go.

Here are the minimum (8k context max) and recommended (longer context) GPU specs for the suite of models.

For 70B Dense model:

For 109B MoE model:

For 405B Dense model:

For 671B MoE model:

Cogito v2 represents more than just another model release—it's a proof of concept that scalable self-improvement in AI systems is not just possible, but practical. By focusing on improving model intelligence rather than just scaling search, this approach could pave the way for the next generation of AI systems.

As these models become available on Runpod's platform, we're excited to see what the community will build with them. The combination of frontier performance, efficient reasoning, and open accessibility creates unprecedented opportunities for innovation.

The path to superintelligence may be closer than we think, and it might be more elegant than we imagined—sometimes the best solution isn't to think longer, but to think better.

Ready to experience DeepCogito's breakthrough reasoning capabilities? Try out Cogito v2 through our public endpoints and see the future of efficient AI in action. Visit our template marketplace to get started in minutes.

Deploy DeepCogito’s Cogito v2 models on Runpod to experience frontier-level reasoning at lower inference costs—choose from 70B to 671B parameter variants and leverage Runpod’s optimized templates and Instant Clusters for scalable, efficient AI deployment.

We're excited to announce that our partner DeepCogito has just released Cogito v2, a groundbreaking collection of hybrid reasoning, multimodal models that represents a fundamental shift in how we approach AI intelligence improvements. This isn't just another model release—it's a proof of concept for scalable superintelligence.

While most recent advances in reasoning models have focused on scaling up thinking token, essentially making models "think longer" to solve problems, Cogito v2 takes a radically different approach. Instead of brute-force searching through longer reasoning chains, these models develop better intuition about which reasoning paths to take.

The results speak for themselves: Cogito models achieve equivalent performance to leading models while using 60% shorter reasoning chains. This isn't just an efficiency gain—it represents a fundamental breakthrough in how we build more intelligent systems. There has been a deep focus across the field on making models smarter, but not necessarily faster or more usable. Larger models are great, but compute time definitely becomes an issue, especially with dense models. It shouldn’t come as a surprise as to why so many large model releases are strictly MoE, for that reason. What DeepCogito has accomplished is a model that not only provides the quality of answer that has become expected in the field, but does it faster and in fewer tokens than comparably sized models, resulting in a direct cost savings when using time-based billing (such as serverless.)

What makes this particularly valuable is that you get the same quality of reasoning with less computational cost. This means:

The Cogito v2 release includes four models designed to meet different computational needs, all released under open license:

Small Models:

Large Models:

All models can answer directly or apply reasoning before answering.

The secret behind Cogito v2's success lies in its approach to iterative policy improvement. Rather than simply scaling inference-time reasoning, the models use a two-step process inspired by successful narrow AI systems like AlphaGo:

This creates a virtuous cycle where each iteration makes the model's base intelligence stronger, rather than just making it search longer. (After all, there are diminishing returns to giving more tokens to the thought process; you aren’t going to have the model find the Unified Field Theory of physics just because you gave it a million thinking token budget.) Because of this cycle, the model develops better "intuition" about which reasoning trajectories are most promising, leading to more efficient and effective problem-solving.

To put this to the test, we pit DeepCogito 405b against Llama-3 405b with the exact same setup on some very long context creative writing tasks (8xH200s, being served on vLLM with the exact same configuration) and DeepCogito’s model demonstrated some pretty significant inference speed improvements, all other things being equal. As stated, this would translate into a direct and proportional cost savings over an equally sized dense model in a serverless architecture.

Getting DeepCogito models up and running on Runpod is straightforward since they're built on the standard transformers architecture. This means all your existing inference engines and deployment workflows will work seamlessly with these new models. We have several templates and package deployment options such as vLLM, sglang, and text-generation-webui; all you need to do is plug in the model you want from the Deep Cogito Huggingface page and you are good to go.

Here are the minimum (8k context max) and recommended (longer context) GPU specs for the suite of models.

For 70B Dense model:

For 109B MoE model:

For 405B Dense model:

For 671B MoE model:

Cogito v2 represents more than just another model release—it's a proof of concept that scalable self-improvement in AI systems is not just possible, but practical. By focusing on improving model intelligence rather than just scaling search, this approach could pave the way for the next generation of AI systems.

As these models become available on Runpod's platform, we're excited to see what the community will build with them. The combination of frontier performance, efficient reasoning, and open accessibility creates unprecedented opportunities for innovation.

The path to superintelligence may be closer than we think, and it might be more elegant than we imagined—sometimes the best solution isn't to think longer, but to think better.

Ready to experience DeepCogito's breakthrough reasoning capabilities? Try out Cogito v2 through our public endpoints and see the future of efficient AI in action. Visit our template marketplace to get started in minutes.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.