We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

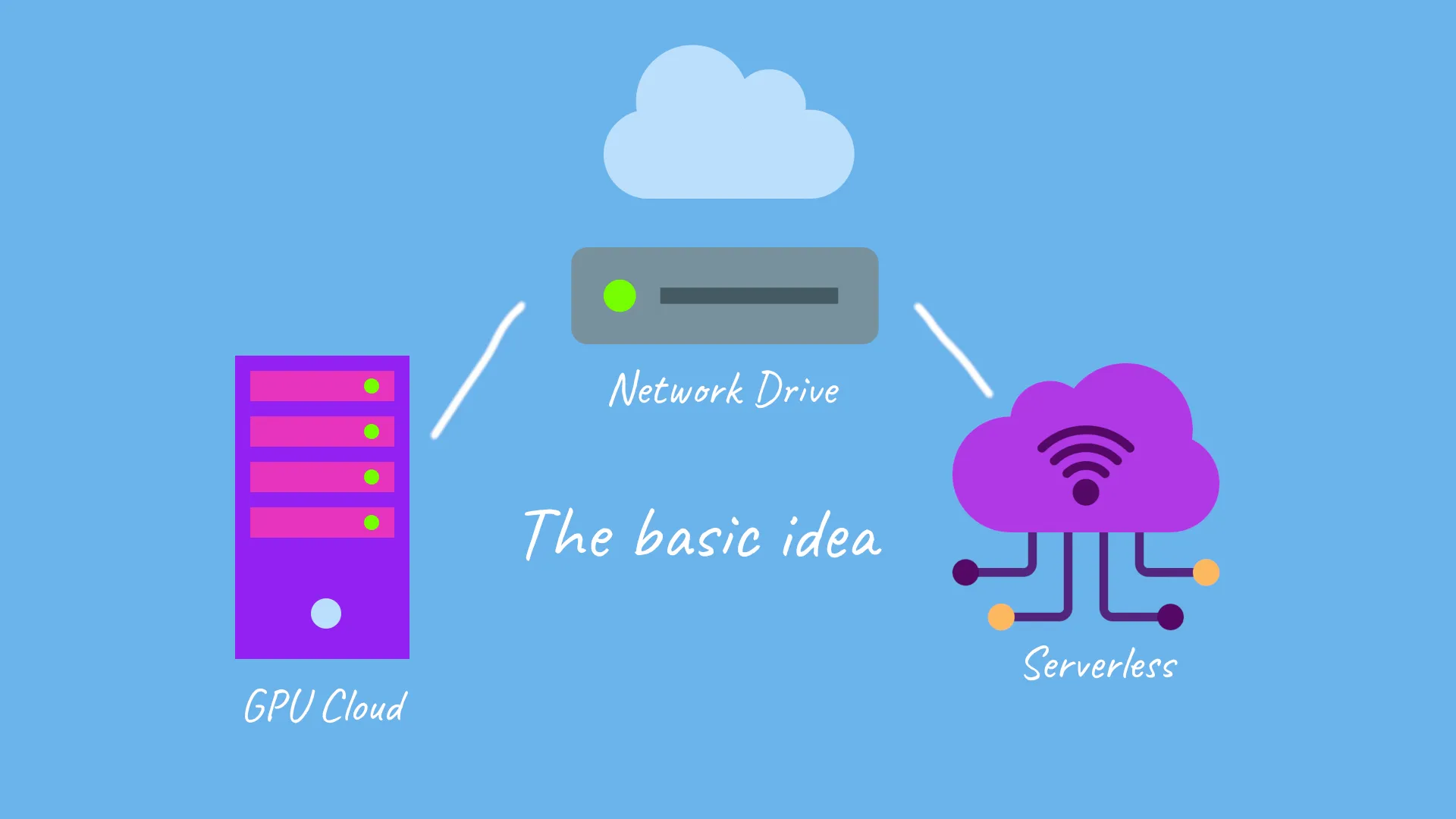

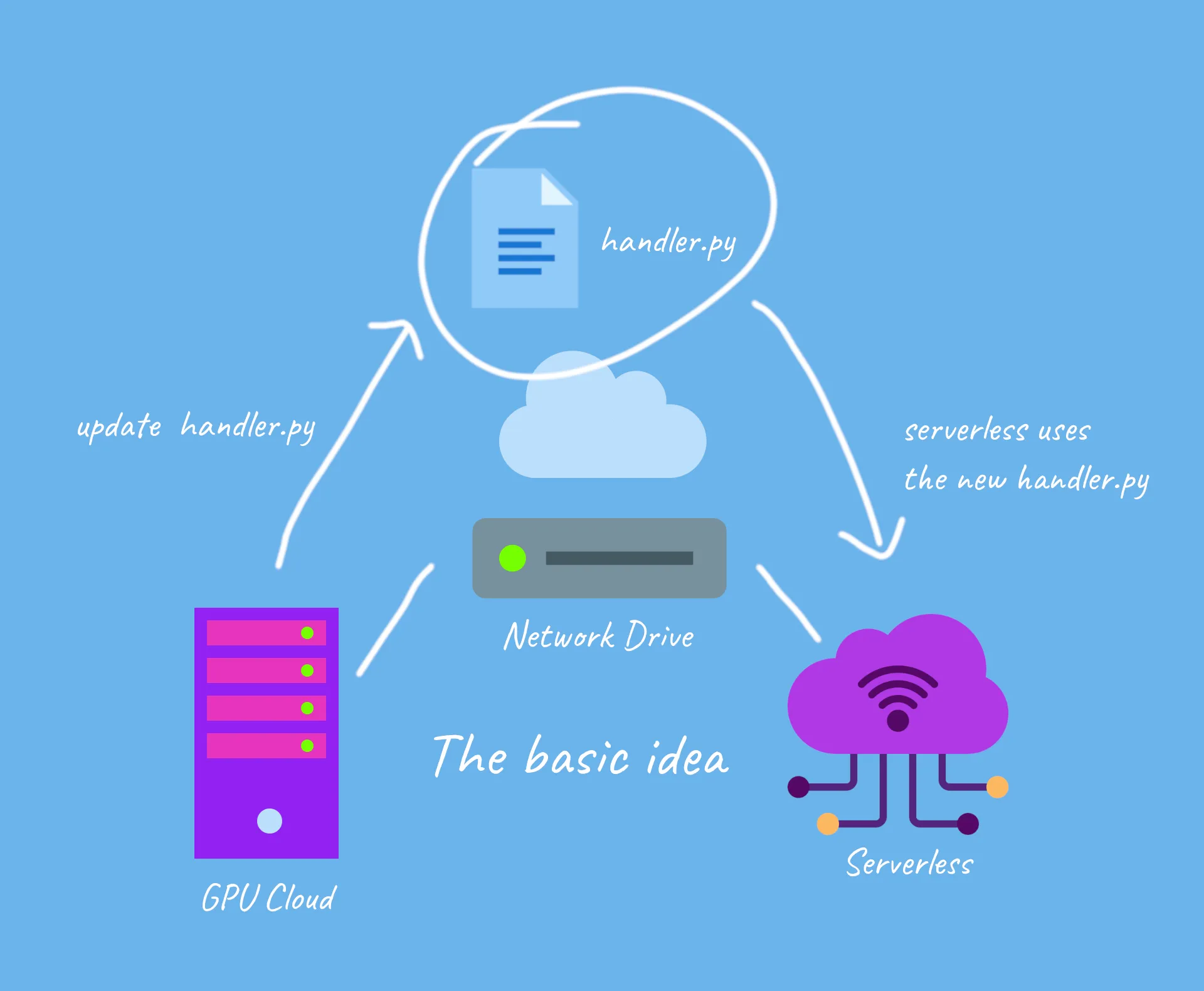

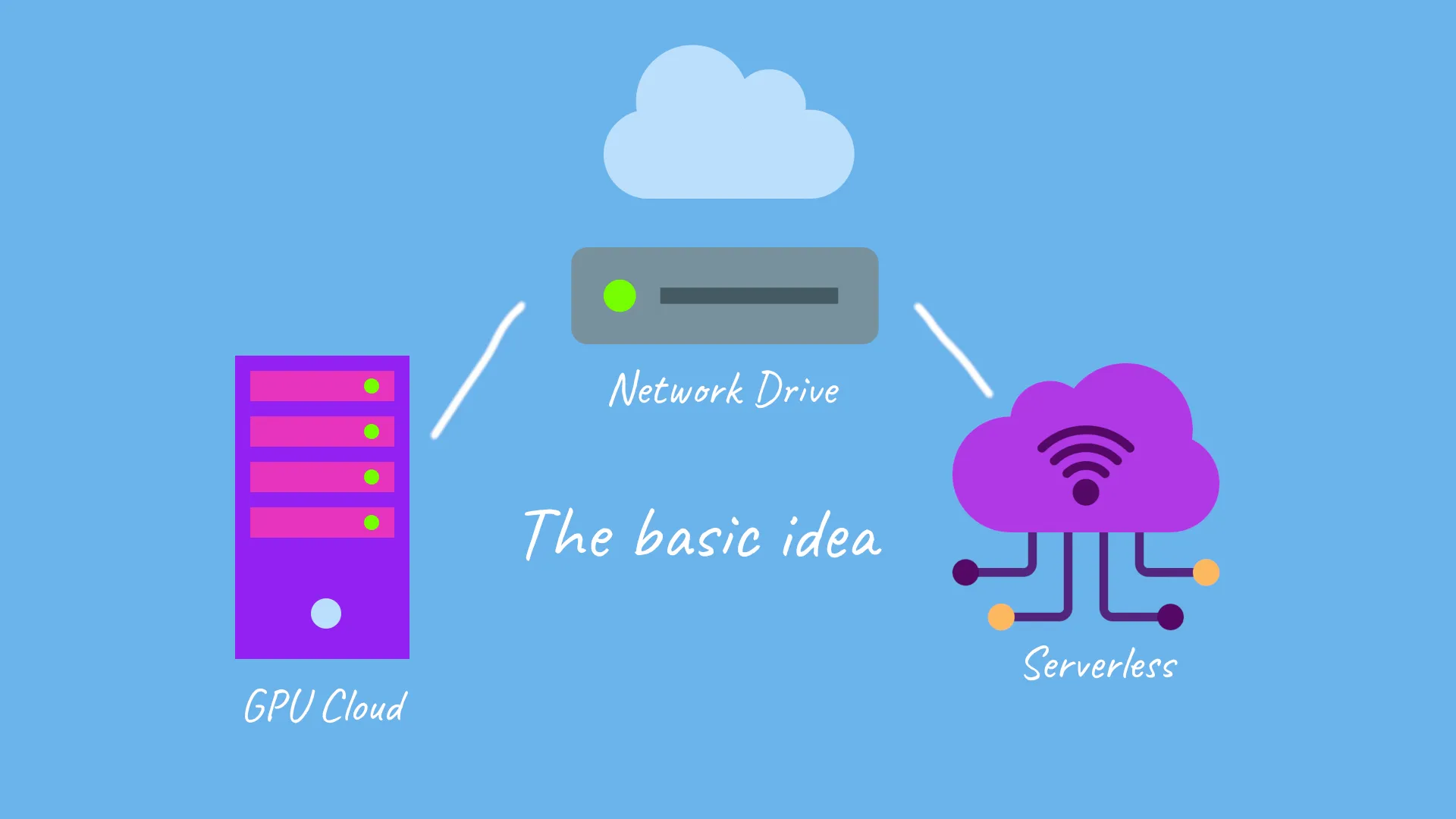

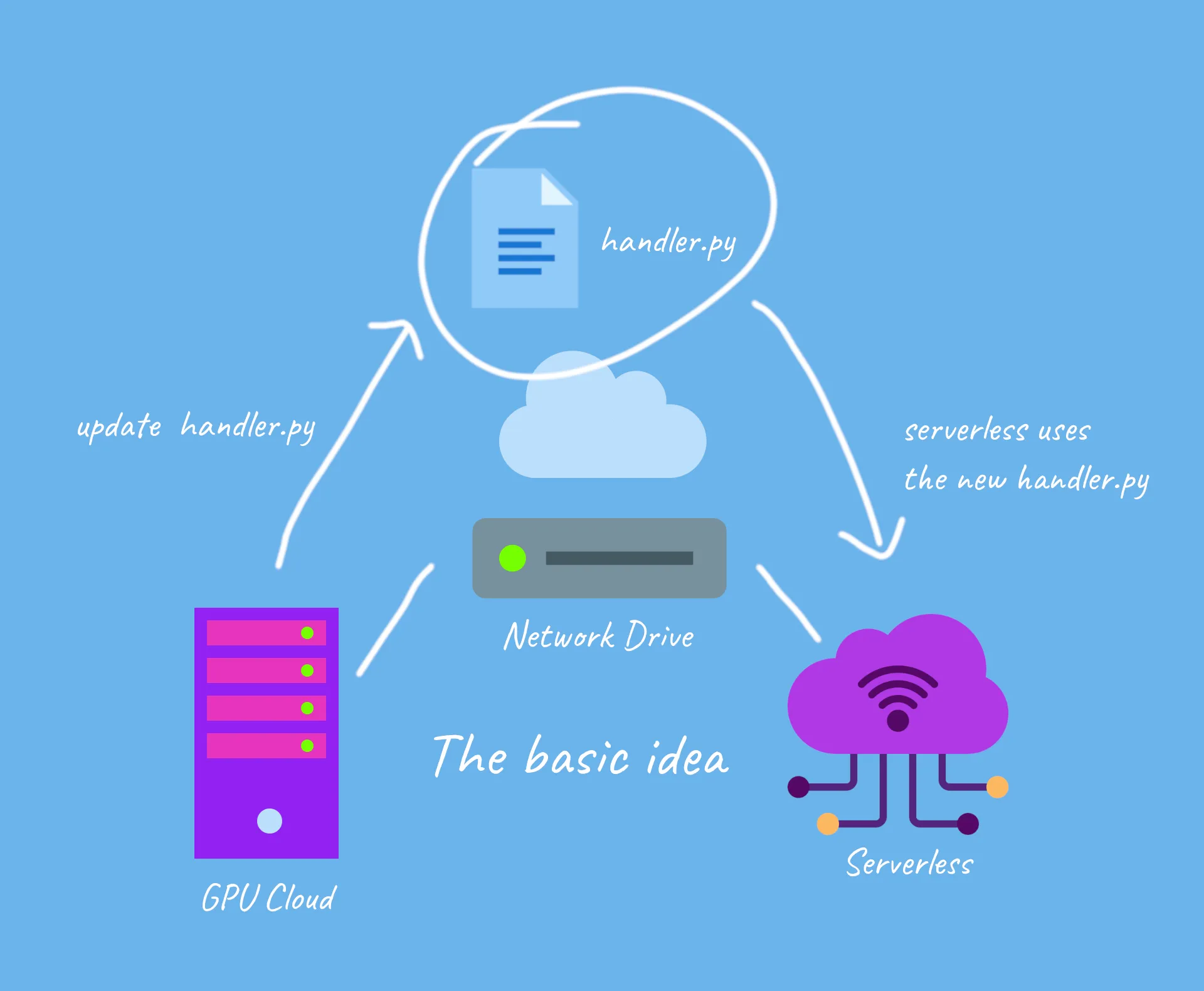

What if I told you, you can now deploy pure python machine learning models with zero-stress on Runpod! Excuse that this is a bit of a hacky workflow at the moment. We'll be providing better abstractions in the future!

For the ease of this tutorial, I am going to do all this in the Jupiter interface (for editing python files), however, this tutorial may be repeated in vscode, if that is a coding environment you are more comfortable with

and for updates

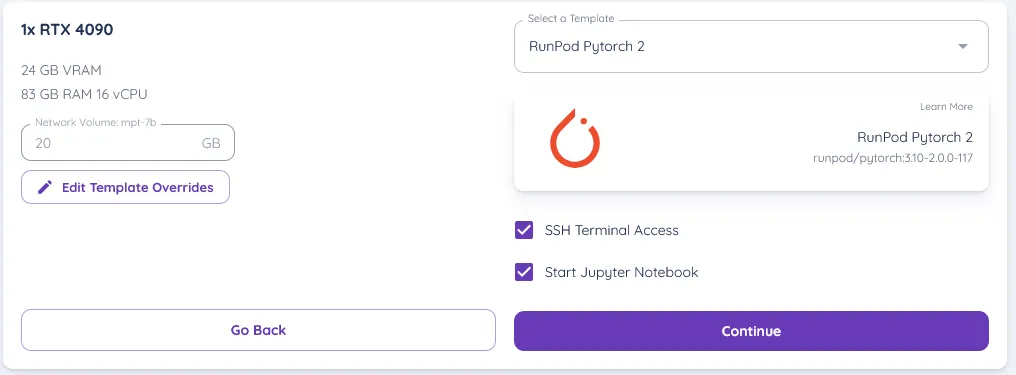

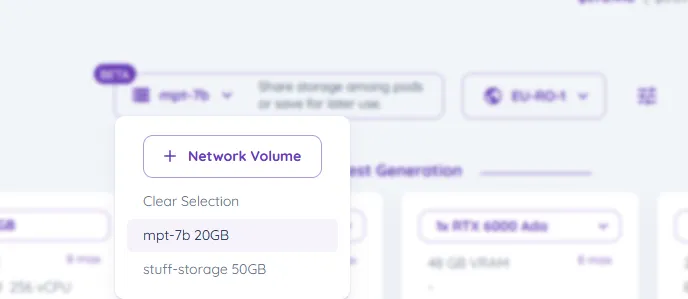

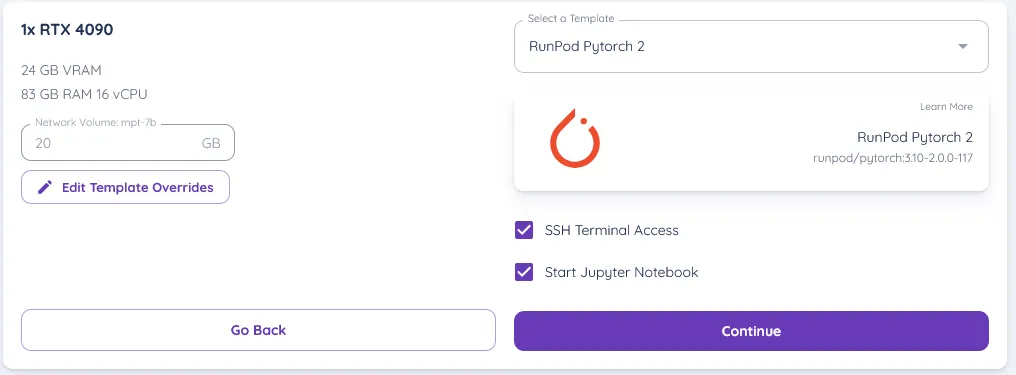

2. Lets start a Runpod Pytorch 2 (you can use any runtime container that you like) template with Runpod, by selecting the pod you wish for with the template

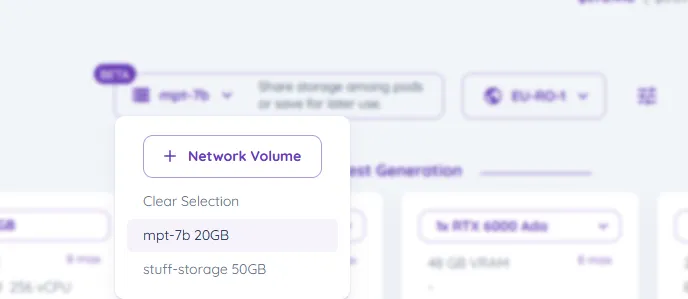

(ensure your network drive is selected on the pod)

3. start the pod and get into the Jupyter Lab interface, and then open a terminal

4. now in the terminal, create a python virtual environment by typing in (ensure your current directory is /workspace)

update ubuntu with

then create a virtual environment

this should create a virtual environment in /workspace

next we activate the virtual environment with

5. for now, I am going to develop and deploy bark, a text-to-speech engine that produces realistic sounding audio

lets install the package in the terminal by typing, as well as runpod and scipy

6. lets create a python file, and type in the following code into it, lets name it "handler.py" and save it in /workspace/handler.py

the python code for running bark

code explanation :

this basically returns the generated audio as a file

7. lets write a startup file - this will be the code used to start docker up with serverless, we'll save this in "/workspace/pod-startup.sh"

2.

1. deactivate the venv we're in in the terminal by typing deactivate

2. try sh pod-startup.sh to test it out

This should show the generation process, and we should be able to see the process being run!

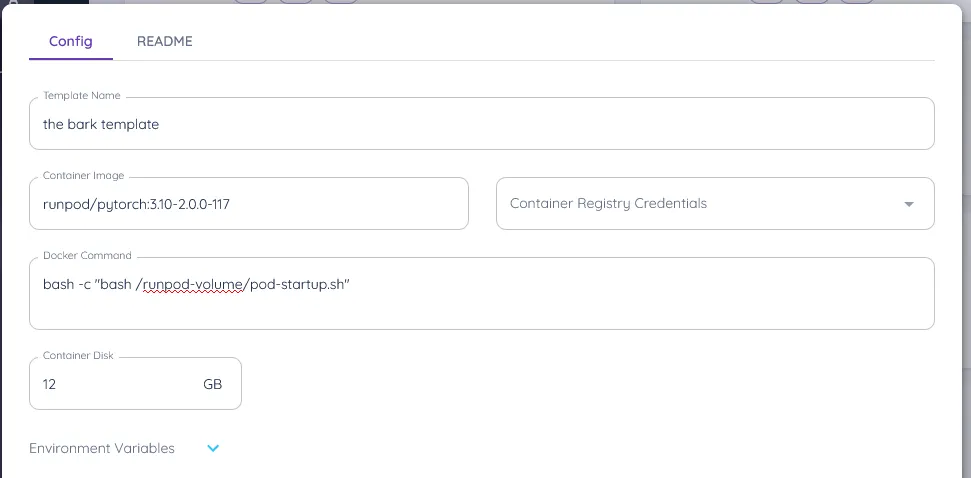

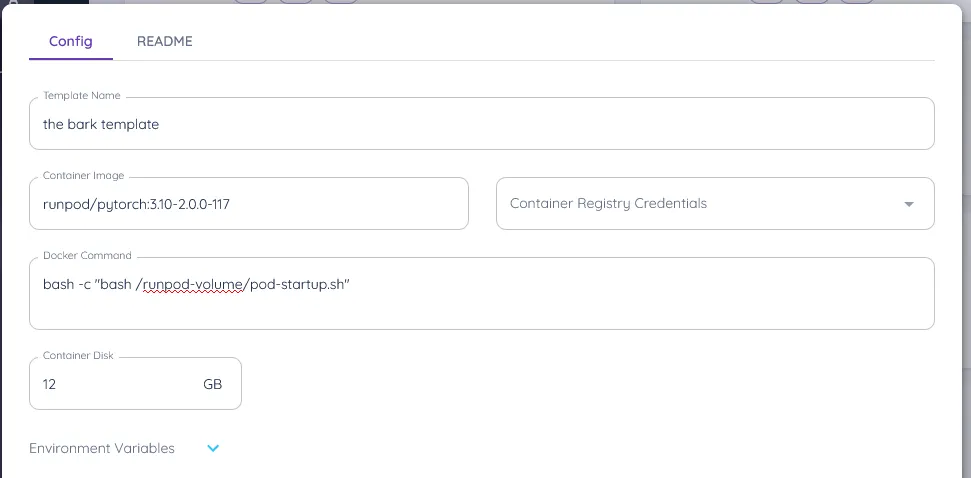

Goto https://www.console.runpod.io/serverless/user/templates and create a new template

2. and set its variables to the following

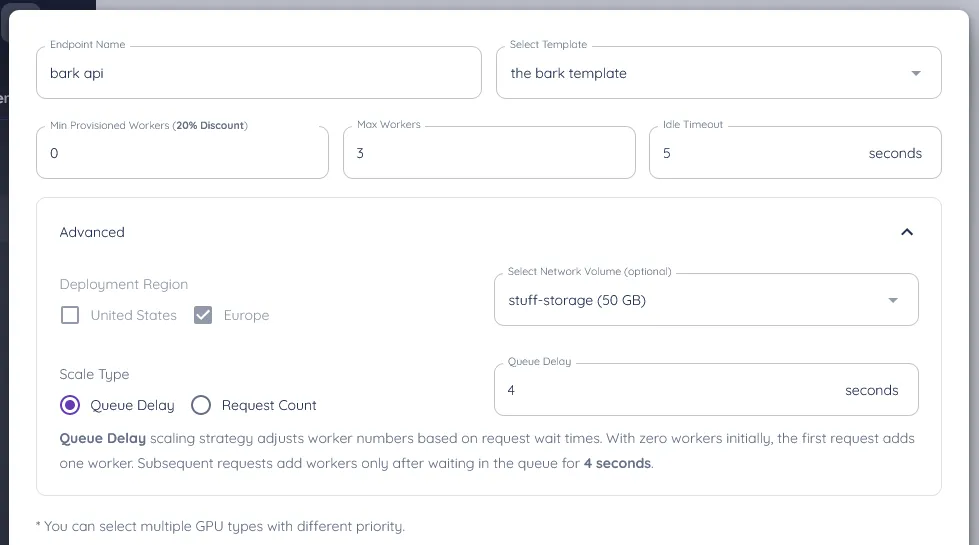

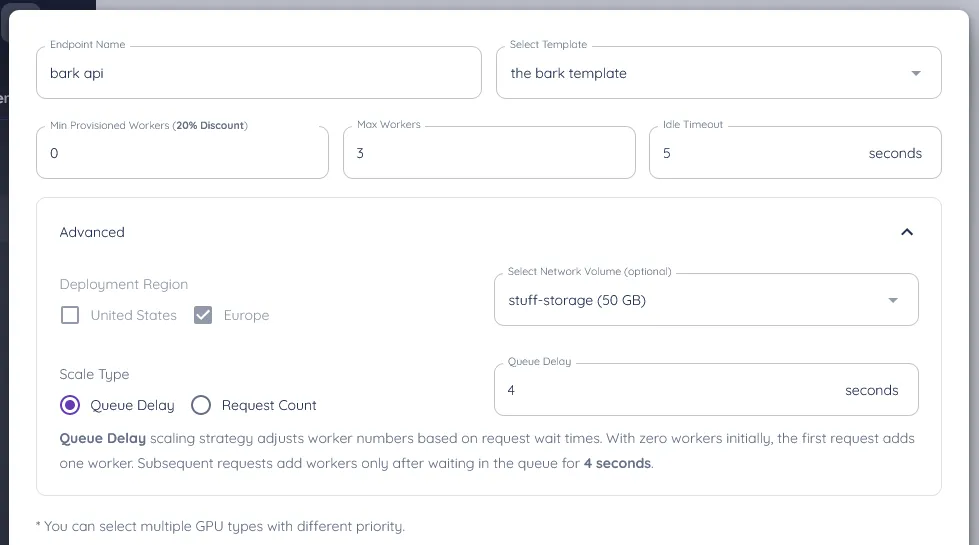

3. now setup a runpod serverless api with your network volume connected, using the template

ensure you connect it to your network volume, and your template

and ta-da, you've setup your api to it!

you can now make requests to it, so here's some sample code to make a request and download a file (ensure you install the requests the library for this with pip install requests)

Learn how to deploy Python machine learning models on Runpod without touching Docker. This guide walks you through using virtual environments, network volumes, and Runpod’s serverless API system to serve custom models like Bark TTS in minutes.

What if I told you, you can now deploy pure python machine learning models with zero-stress on Runpod! Excuse that this is a bit of a hacky workflow at the moment. We'll be providing better abstractions in the future!

For the ease of this tutorial, I am going to do all this in the Jupiter interface (for editing python files), however, this tutorial may be repeated in vscode, if that is a coding environment you are more comfortable with

and for updates

2. Lets start a Runpod Pytorch 2 (you can use any runtime container that you like) template with Runpod, by selecting the pod you wish for with the template

(ensure your network drive is selected on the pod)

3. start the pod and get into the Jupyter Lab interface, and then open a terminal

4. now in the terminal, create a python virtual environment by typing in (ensure your current directory is /workspace)

update ubuntu with

then create a virtual environment

this should create a virtual environment in /workspace

next we activate the virtual environment with

5. for now, I am going to develop and deploy bark, a text-to-speech engine that produces realistic sounding audio

lets install the package in the terminal by typing, as well as runpod and scipy

6. lets create a python file, and type in the following code into it, lets name it "handler.py" and save it in /workspace/handler.py

the python code for running bark

code explanation :

this basically returns the generated audio as a file

7. lets write a startup file - this will be the code used to start docker up with serverless, we'll save this in "/workspace/pod-startup.sh"

2.

1. deactivate the venv we're in in the terminal by typing deactivate

2. try sh pod-startup.sh to test it out

This should show the generation process, and we should be able to see the process being run!

Goto https://www.console.runpod.io/serverless/user/templates and create a new template

2. and set its variables to the following

3. now setup a runpod serverless api with your network volume connected, using the template

ensure you connect it to your network volume, and your template

and ta-da, you've setup your api to it!

you can now make requests to it, so here's some sample code to make a request and download a file (ensure you install the requests the library for this with pip install requests)

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.