We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

Orchestration is the automation layer that makes compute, data, and runs dependable and cost-effective. In practice that means you declare what you want (which GPU, which image, what data) and an automation plane provisions resources, mounts volumes, runs jobs, collects metrics, and tears things down when work is done.

Good orchestration reduces cognitive overhead, speeds iteration, and directly lowers cloud spend by avoiding manual “just-in-case” provisioning and by enabling policies that prevent waste.

How this space compares to familiar tools:

ML teams benefit from orchestration that is GPU-aware, developer-friendly, and policy-driven so teams can iterate quickly without paying for avoidable waste

dstack is an open-source, lightweight alternative to Kubernetes and Slurm — easier to operate day-to-day and built with a GPU-native design. It natively integrates with modern neo-clouds, so you can manage infrastructure on Runpod, other providers, or on-prem clusters from a single control plane.

It exposes three first-class primitives you declare in .dstack.yml:

dstack is declarative and CLI-first: apply a YAML and dstack apply makes the desired state real (create/update/monitor).

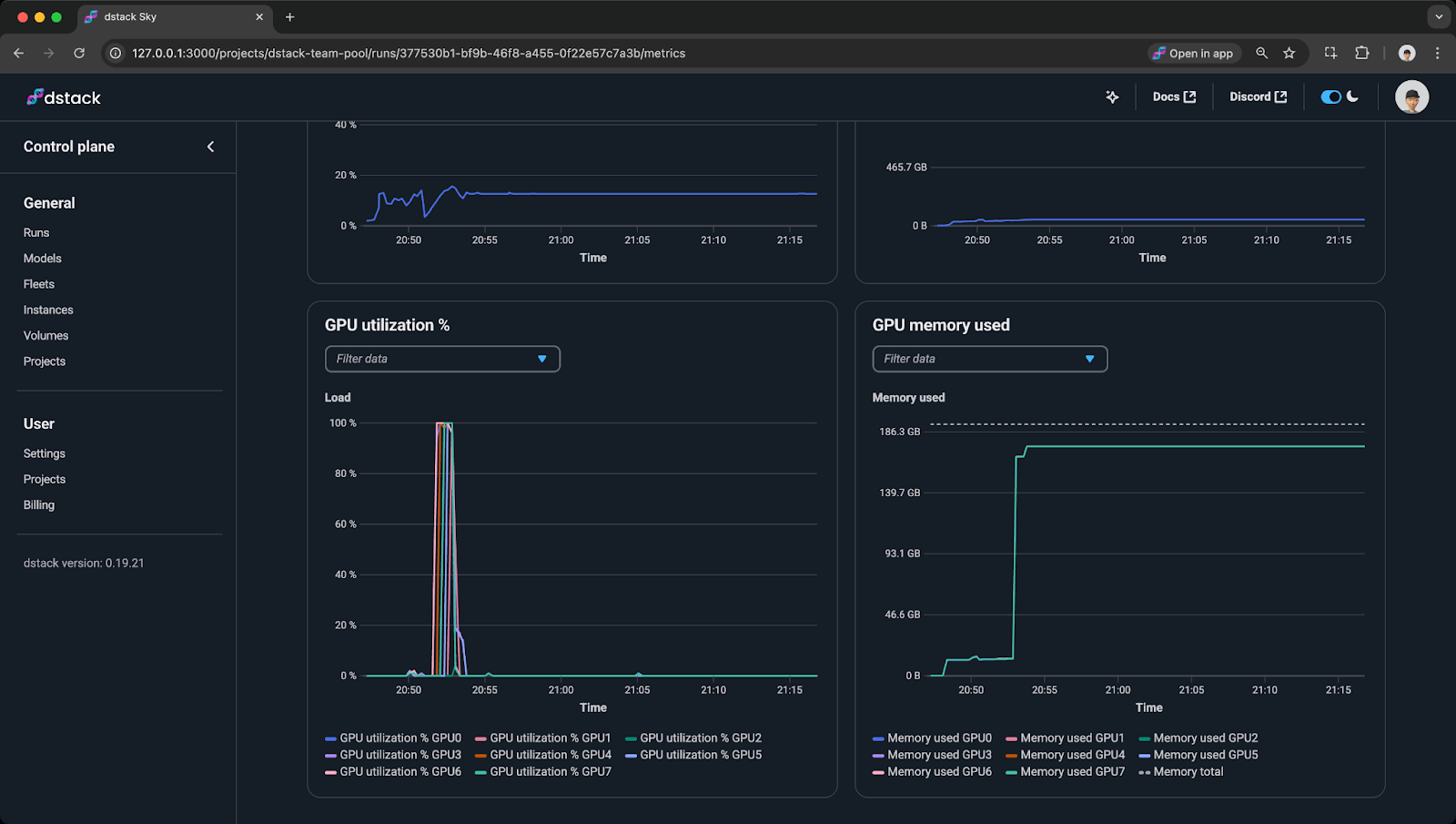

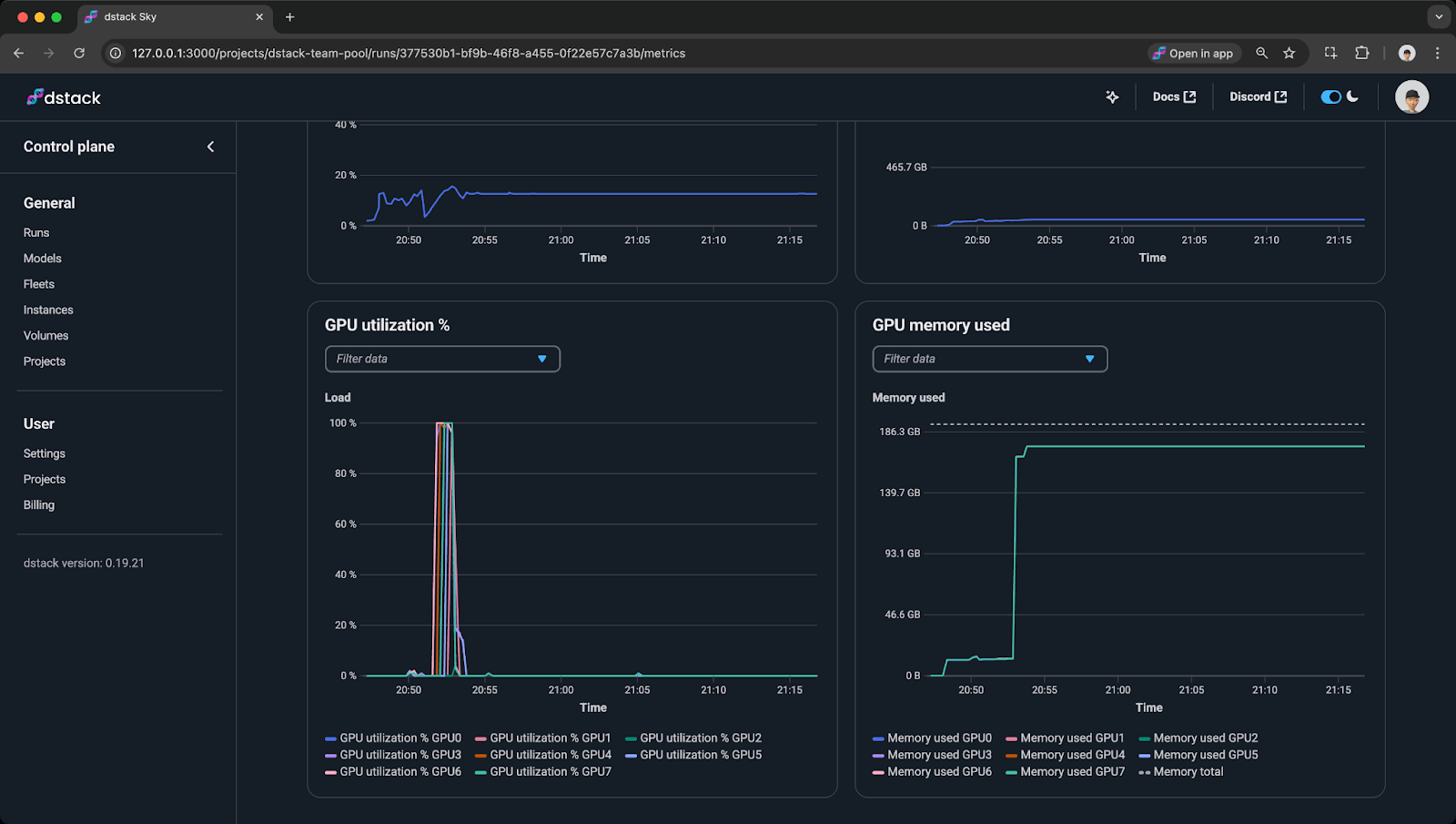

It functions both as an orchestrator (scheduling & provisioning) and as a team control plane (policies, metrics, and reusable project defaults), optimized for dev workflows while supporting production tasks and services.

Poor automation, forgotten sessions and low GPU utilization multiply your effective cost per useful GPU-hour. Two compact scenarios show how this happens.

Scenario A — ~3.5x

Scenario B — 7x

Even small idle padding plus mediocre utilization compounds quickly. The fix is simple in principle: reduce paid hours (auto-shutdown), increase utilization (better pipelines / profiling), and use policies (utilization-based termination, spot strategies, team defaults).

EA case study: Electronic Arts uses dstack to streamline provisioning, improve utilization, and cut GPU costs.

"dstack provisions compute on demand and automatically shuts it down when no longer needed. That alone saves you over three times in cost."

-- Wah Loon Keng, Sr. AI Engineer, Electronic Arts

dstack provides general automation — declarative provisioning, unified logs/metrics, and managed lifecycle — and layered on top are focused policy primitives you can apply per-resource or as project defaults to cut waste.

For interactive work, set inactivity_duration so dev environments stop after a period with no attached user. Example:

type: dev-environment

name: vscode

ide: vscode

# Stop if inactive for 2 hours

inactivity_duration: 2h

resources:

gpu: H100:8For dev environments and tasks, use utilization_policy to terminate tasks when GPUs stay under a utilization threshold for a time window:

type: task

name: train

repos:

- .

python: 3.12

commands:

- uv pip install -r requirements.txt

- python train.py

resources:

gpu: H100:8

utilization_policy:

min_gpu_utilization: 10

time_window: 1hTo reduce hourly spend, prefer spot instances with spot_policy and a price cap; combine with checkpointing and retries for resilience:

type: service

name: llama-2-7b-service

python: 3.12

env:

- HF_TOKEN

- MODEL=NousResearch/Llama-2-7b-chat-hf

commands:

- uv pip install vllm

- |

python -m vllm.entrypoints.openai.api_server \

--model $MODEL \

--port 8000

port: 8000

resources:

gpu: 24GB

# Use spot instances if available

spot_policy: autoLast but not least, dstack’s multi-cloud and hybrid support lets you route jobs to the cheapest or closest backend without changing definitions.

Runpod is natively supported as a dstack backend. That means your dstack server can request Runpod pods directly, letting you combine dstack ergonomics with Runpod’s GPU portfolio.

Quick steps:

.dstack.yml and run dstack apply. Monitor startup with dstack logs.Example backend snippet (~/.dstack/server/config.yml):

projects:

- name: main

backends:

- type: runpod

creds:

type: api_key

api_key: US9XTPDIV8AR42MMINY8TCKRB8S4E7LNRQ6CAUQ9Set various policies in your run configurations to balance cost and resilience on Runpod.

Other useful options to configure and bake into team defaults:

Find even more tips at Protips.

dstack provides a GPU-native control plane that covers the full ML lifecycle — from development to training to inference. Its orchestration combines automation, policy-driven resource management, and utilization monitoring, helping teams eliminate idle and under-used GPUs while speeding iteration.

With Runpod’s flexible GPU options, dstack lets teams focus on building and deploying models, turning orchestration into both efficiency and real cost savings.

dstack is an open-source, GPU-native orchestrator that automates provisioning, scaling, and policies for ML teams—helping cut 3–7× GPU waste while simplifying dev, training, and inference. With Runpod integration, teams can spin up cost-efficient environments and focus on building models, not managing infrastructure.

Orchestration is the automation layer that makes compute, data, and runs dependable and cost-effective. In practice that means you declare what you want (which GPU, which image, what data) and an automation plane provisions resources, mounts volumes, runs jobs, collects metrics, and tears things down when work is done.

Good orchestration reduces cognitive overhead, speeds iteration, and directly lowers cloud spend by avoiding manual “just-in-case” provisioning and by enabling policies that prevent waste.

How this space compares to familiar tools:

ML teams benefit from orchestration that is GPU-aware, developer-friendly, and policy-driven so teams can iterate quickly without paying for avoidable waste

dstack is an open-source, lightweight alternative to Kubernetes and Slurm — easier to operate day-to-day and built with a GPU-native design. It natively integrates with modern neo-clouds, so you can manage infrastructure on Runpod, other providers, or on-prem clusters from a single control plane.

It exposes three first-class primitives you declare in .dstack.yml:

dstack is declarative and CLI-first: apply a YAML and dstack apply makes the desired state real (create/update/monitor).

It functions both as an orchestrator (scheduling & provisioning) and as a team control plane (policies, metrics, and reusable project defaults), optimized for dev workflows while supporting production tasks and services.

Poor automation, forgotten sessions and low GPU utilization multiply your effective cost per useful GPU-hour. Two compact scenarios show how this happens.

Scenario A — ~3.5x

Scenario B — 7x

Even small idle padding plus mediocre utilization compounds quickly. The fix is simple in principle: reduce paid hours (auto-shutdown), increase utilization (better pipelines / profiling), and use policies (utilization-based termination, spot strategies, team defaults).

EA case study: Electronic Arts uses dstack to streamline provisioning, improve utilization, and cut GPU costs.

"dstack provisions compute on demand and automatically shuts it down when no longer needed. That alone saves you over three times in cost."

-- Wah Loon Keng, Sr. AI Engineer, Electronic Arts

dstack provides general automation — declarative provisioning, unified logs/metrics, and managed lifecycle — and layered on top are focused policy primitives you can apply per-resource or as project defaults to cut waste.

For interactive work, set inactivity_duration so dev environments stop after a period with no attached user. Example:

type: dev-environment

name: vscode

ide: vscode

# Stop if inactive for 2 hours

inactivity_duration: 2h

resources:

gpu: H100:8For dev environments and tasks, use utilization_policy to terminate tasks when GPUs stay under a utilization threshold for a time window:

type: task

name: train

repos:

- .

python: 3.12

commands:

- uv pip install -r requirements.txt

- python train.py

resources:

gpu: H100:8

utilization_policy:

min_gpu_utilization: 10

time_window: 1hTo reduce hourly spend, prefer spot instances with spot_policy and a price cap; combine with checkpointing and retries for resilience:

type: service

name: llama-2-7b-service

python: 3.12

env:

- HF_TOKEN

- MODEL=NousResearch/Llama-2-7b-chat-hf

commands:

- uv pip install vllm

- |

python -m vllm.entrypoints.openai.api_server \

--model $MODEL \

--port 8000

port: 8000

resources:

gpu: 24GB

# Use spot instances if available

spot_policy: autoLast but not least, dstack’s multi-cloud and hybrid support lets you route jobs to the cheapest or closest backend without changing definitions.

Runpod is natively supported as a dstack backend. That means your dstack server can request Runpod pods directly, letting you combine dstack ergonomics with Runpod’s GPU portfolio.

Quick steps:

.dstack.yml and run dstack apply. Monitor startup with dstack logs.Example backend snippet (~/.dstack/server/config.yml):

projects:

- name: main

backends:

- type: runpod

creds:

type: api_key

api_key: US9XTPDIV8AR42MMINY8TCKRB8S4E7LNRQ6CAUQ9Set various policies in your run configurations to balance cost and resilience on Runpod.

Other useful options to configure and bake into team defaults:

Find even more tips at Protips.

dstack provides a GPU-native control plane that covers the full ML lifecycle — from development to training to inference. Its orchestration combines automation, policy-driven resource management, and utilization monitoring, helping teams eliminate idle and under-used GPUs while speeding iteration.

With Runpod’s flexible GPU options, dstack lets teams focus on building and deploying models, turning orchestration into both efficiency and real cost savings.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.