We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

The video generation landscape has witnessed a significant leap forward with the release of Wan 2.2, marking a substantial upgrade over its predecessor Wan 2.1. For cloud infrastructure providers and developers running video generation workloads, understanding these improvements is crucial for optimizing deployment strategies and resource allocation.

Wan 2.2 introduces a Mixture-of-Experts (MoE) architecture into video diffusion models, incorporates meticulously curated aesthetic data, and achieves complex motion generation through significantly larger training datasets (+65.6% more images and +83.2% more videos). This is done through a “high noise” and a “low noise” model that work in tandem, which is a departure from most previous video generation models. The high noise expert handles early denoising stages, while the low noise expert manages later staging, refining video textures and details. This means in ComfyUI you now have two sets of sampler variables (CFG, scheduler, etc.) and can configure at which step you want to move from one expert to the next, which adds a great degree of customization to the process — as well as complication.

Wan 2.2's performance gains are significantly attributed to its expanded training corpus:

Notably, despite this greatly increased dataset, the compute costs and memory footprint appear to be about the same. In addition, LoRAs trained on Wan 2.1 should work just fine, and in fact in my experience they work even better as the model architecture remains the same but the models are far more performant from their upgraded dataset. If you wish to retrain, then diffusion-pipe has already been upgraded to support Wan 2.2.

The Text-Image-to-Video 5B (TI2V-5B) model represents perhaps the most significant practical breakthrough in Wan 2.2's lineup. This model supports both text-to-video and image-to-video generation at 720P resolution with 24fps and can also run on consumer-grade graphics cards like 4090.

Understanding the TI2V Architecture

TI2V-5B employs a unified architecture that intelligently handles both text-only and text-with-image inputs through a single model. Unlike the larger A14B models that use Mixture-of-Experts, TI2V-5B utilizes a dense transformer architecture optimized for efficiency and consumer hardware deployment. The model's versatility lies in its conditional processing approach:

Here’s what you’ll need to do to get started with Wan 2.2 t2v on Runpod in just a few minutes.

Select the GPU of your choice; if you just want to test the model’s maximum capabilities cheaply without worrying about OOMing at a high resolution or framerate, an A100 is a good choice, with 48GB cards or lower more economical if you’re not shooting for the moon for video size. H100s and H200s will provide the fastest inference of all, but with a higher price tag.

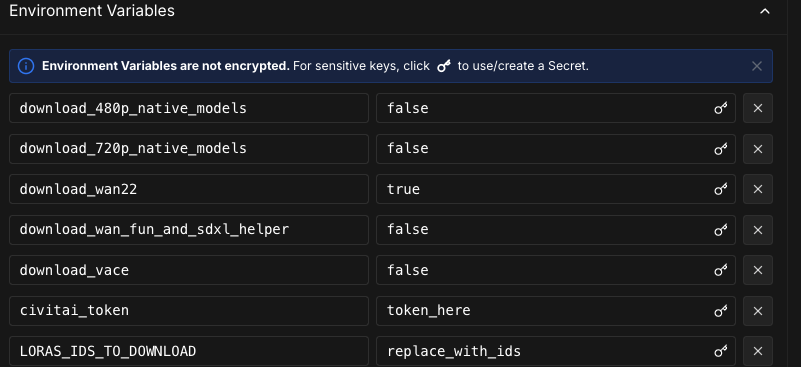

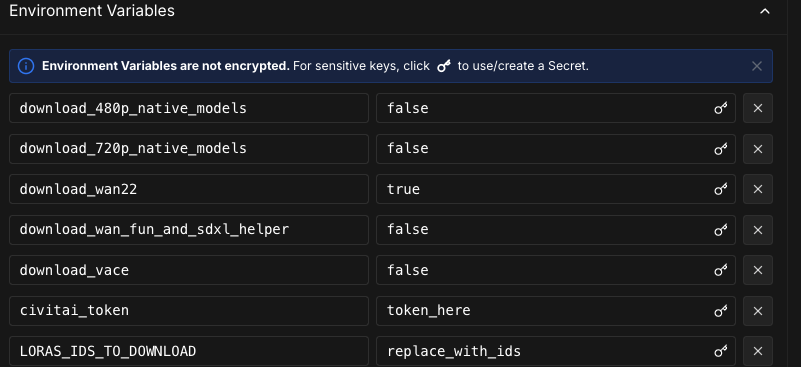

We’ll use the Wan 2.1/2.2 template from Hearmeman; make sure that you edit the environment variables to download 2.2.

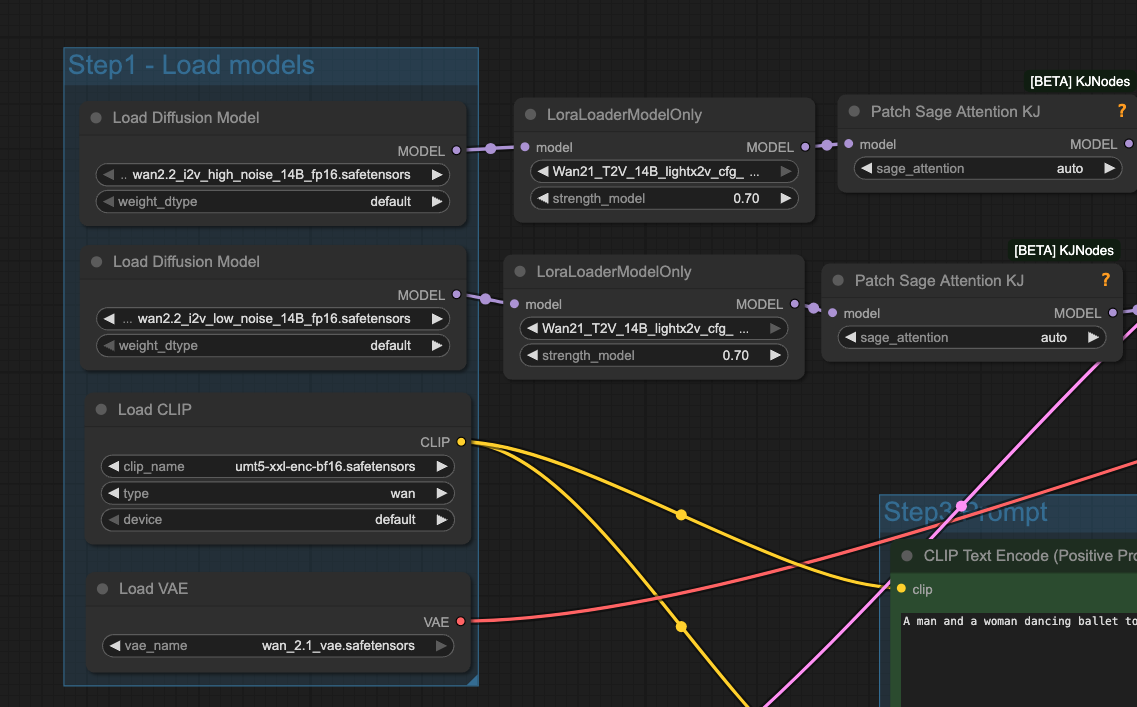

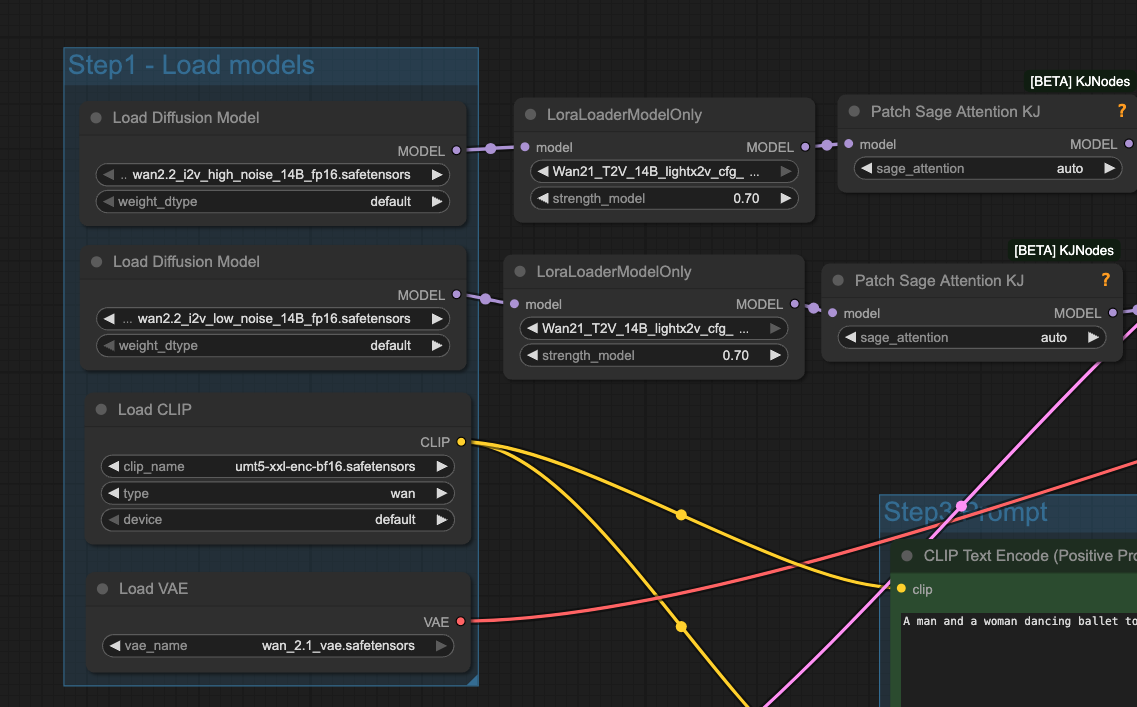

This Reddit post provides some great starter workflows for all modalities; we’ll start by dragging the T2V workflow into our pod’s ComfyUI. Here’s what’s changed in the latest iteration.

You now have two models that work in tandem, as described. Each of these models can have LoRAs applied to them independently, at different strengths. However, they still only use one prompt.

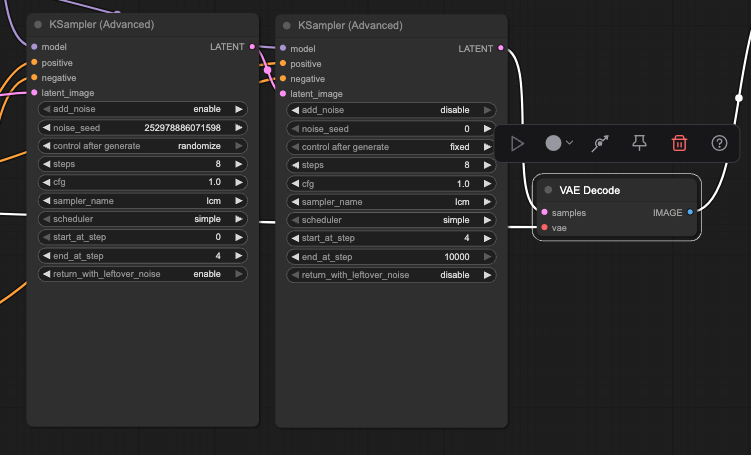

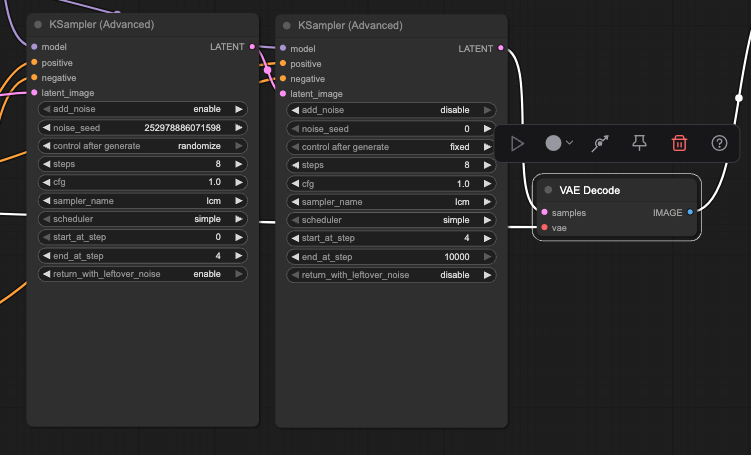

You also have two sets of samplers, one for each model. Note that the default CFG is now very low; the typical setting of 6 will very easily ‘cook’ outputs under this new paradigm. Each expert (high-noise and low-noise) has learned specialized representations, and when CFG scaling is applied, it amplifies the confidence of both experts simultaneously. Remember that the ‘high’ model is the scene director, and the ‘low’ model is the refiner, so a high CFG on the first model will blow it out faster than on the second.

However, you can also specify which proportion of steps are allocated to which model. This can help mitigate oversaturation effects while still allowing for a high CFG, if the amount of generation time spent in high CFG is properly attenuated.

Long story short, you have many, many more levers to pull, each of which interact with each other in different ways, but ultimately allow for a great detail of fine-tuning that wasn’t there in previous models.

Wan 2.2 represents a significant architectural and performance leap over Wan 2.1, introducing MoE efficiency, enhanced training data, and consumer GPU accessibility. The combination of technical innovation and practical deployment considerations makes it a compelling upgrade for organizations operating video generation infrastructure, while its performance and understanding improvements will please hobbyists as well.

For deployment support and infrastructure optimization for Wan 2.2 on Runpod's GPU cloud platform, check out our Discord for customized solutions and scaling strategies.

Deploy Wan 2.2 on Runpod to unlock next-gen video generation with Mixture-of-Experts architecture, TI2V-5B support, and 83% more training data—run text-to-video and image-to-video models at scale using A100–H200 GPUs and customizable ComfyUI workflows.

The video generation landscape has witnessed a significant leap forward with the release of Wan 2.2, marking a substantial upgrade over its predecessor Wan 2.1. For cloud infrastructure providers and developers running video generation workloads, understanding these improvements is crucial for optimizing deployment strategies and resource allocation.

Wan 2.2 introduces a Mixture-of-Experts (MoE) architecture into video diffusion models, incorporates meticulously curated aesthetic data, and achieves complex motion generation through significantly larger training datasets (+65.6% more images and +83.2% more videos). This is done through a “high noise” and a “low noise” model that work in tandem, which is a departure from most previous video generation models. The high noise expert handles early denoising stages, while the low noise expert manages later staging, refining video textures and details. This means in ComfyUI you now have two sets of sampler variables (CFG, scheduler, etc.) and can configure at which step you want to move from one expert to the next, which adds a great degree of customization to the process — as well as complication.

Wan 2.2's performance gains are significantly attributed to its expanded training corpus:

Notably, despite this greatly increased dataset, the compute costs and memory footprint appear to be about the same. In addition, LoRAs trained on Wan 2.1 should work just fine, and in fact in my experience they work even better as the model architecture remains the same but the models are far more performant from their upgraded dataset. If you wish to retrain, then diffusion-pipe has already been upgraded to support Wan 2.2.

The Text-Image-to-Video 5B (TI2V-5B) model represents perhaps the most significant practical breakthrough in Wan 2.2's lineup. This model supports both text-to-video and image-to-video generation at 720P resolution with 24fps and can also run on consumer-grade graphics cards like 4090.

Understanding the TI2V Architecture

TI2V-5B employs a unified architecture that intelligently handles both text-only and text-with-image inputs through a single model. Unlike the larger A14B models that use Mixture-of-Experts, TI2V-5B utilizes a dense transformer architecture optimized for efficiency and consumer hardware deployment. The model's versatility lies in its conditional processing approach:

Here’s what you’ll need to do to get started with Wan 2.2 t2v on Runpod in just a few minutes.

Select the GPU of your choice; if you just want to test the model’s maximum capabilities cheaply without worrying about OOMing at a high resolution or framerate, an A100 is a good choice, with 48GB cards or lower more economical if you’re not shooting for the moon for video size. H100s and H200s will provide the fastest inference of all, but with a higher price tag.

We’ll use the Wan 2.1/2.2 template from Hearmeman; make sure that you edit the environment variables to download 2.2.

This Reddit post provides some great starter workflows for all modalities; we’ll start by dragging the T2V workflow into our pod’s ComfyUI. Here’s what’s changed in the latest iteration.

You now have two models that work in tandem, as described. Each of these models can have LoRAs applied to them independently, at different strengths. However, they still only use one prompt.

You also have two sets of samplers, one for each model. Note that the default CFG is now very low; the typical setting of 6 will very easily ‘cook’ outputs under this new paradigm. Each expert (high-noise and low-noise) has learned specialized representations, and when CFG scaling is applied, it amplifies the confidence of both experts simultaneously. Remember that the ‘high’ model is the scene director, and the ‘low’ model is the refiner, so a high CFG on the first model will blow it out faster than on the second.

However, you can also specify which proportion of steps are allocated to which model. This can help mitigate oversaturation effects while still allowing for a high CFG, if the amount of generation time spent in high CFG is properly attenuated.

Long story short, you have many, many more levers to pull, each of which interact with each other in different ways, but ultimately allow for a great detail of fine-tuning that wasn’t there in previous models.

Wan 2.2 represents a significant architectural and performance leap over Wan 2.1, introducing MoE efficiency, enhanced training data, and consumer GPU accessibility. The combination of technical innovation and practical deployment considerations makes it a compelling upgrade for organizations operating video generation infrastructure, while its performance and understanding improvements will please hobbyists as well.

For deployment support and infrastructure optimization for Wan 2.2 on Runpod's GPU cloud platform, check out our Discord for customized solutions and scaling strategies.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.