New Public Endpoints, Load Balancing Serverless, Billing Updates, Deploy Hub Listings as Pods

New Public Endpoints

We’ve got a plethora of new Public Endpoints for you to try out, and we’re continually adding more as time goes on! Here’s a complete list of new endpoints we’ve added:

- Pruna image edit/t2i: P-Image is focused on lightning quick inference with a 768 × 1344 image often generating in under three seconds. Excellent for rapid prototyping and fast iteration.

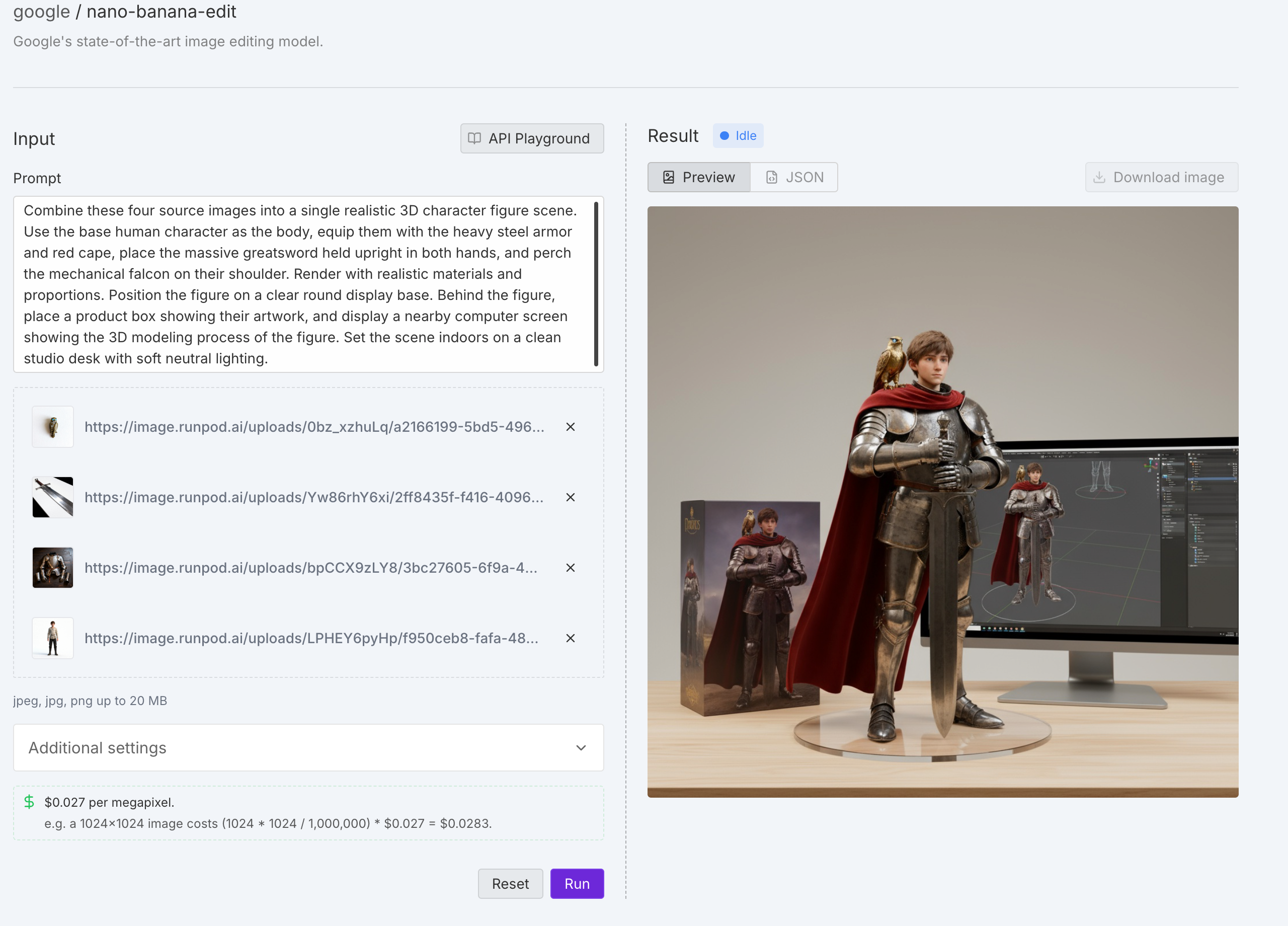

- Nano Banana Pro: Google’s new iteration of its image editing model. Supports resolutions of up to 2k (with upscaling to 4k) with enhanced research capabilities to drive its editing process.

- Sora 2 Pro I2V: OpenAI’s video generation model; accurately models complex movements, synchronized audio generation, supports 1792×1024/1024×1792 resolutions. Generate up to 12 seconds through the endpoint.

- InfiniteTalk- Synchronizes not only lips but also head movements, body posture, and facial expressions with audio input, creating more natural and comprehensive video animations.

- Granite 4.0 - This is a 32B MoE model with 9B active parameters from IBM, using a hybrid architecture that focuses on fast throughput and efficiency—so a smaller active parameter count like this makes it really quick

- Deep Cogito 2.1 671B - MoE model that matches/exceeds Deepseek R1 performance while doing so in fewer tokens, resulting in both cheaper and faster inference at the same quality point. The older version has been removed to make way for the new model.

Private endpoints: Our Public Endpoints have additional configuration options and can be forked into a private version if you have special needs or use cases. What can be done differs from endpoint to endpoint, but click on Fork Private Endpoint on the UI for any Public Endpoint to send our team a message and we will see what we can do for you.

Load balancing serverless endpoints: Provide direct HTTP access to workers, bypassing the traditional queue infrastructure for lower latency responses. Unlike queue-based endpoints that use standardized handlers and fixed /run or /runsync paths, load balancing endpoints allow you to build custom REST APIs with any HTTP framework (FastAPI, Flask, Express.js) and define your own URL paths, methods, and contracts.

Deploy Hub Listings as Pods: You can now deploy listings from the Hub as a Pod in addition to a serverless endpoint. This makes it perfect for troubleshooting, testing, and development as it removes a layer of complication; you can focus on the actual task handling and testing before you decide to push it into a more economical serverless task handling format.

Backup payment methods: You can now add a backup payment method that gets automatically charged should your first one fail. This can help prevent data loss due to an unfunded account having its stopped pods or network volumes terminated.

Slurm Instant Clusters, Model Store in Beta, and New Public Endpoints

Slurm Instant Clusters Live

After months in beta and incredible feedback from our community, Slurm Instant Clusters are now fully live on Runpod. What used to take hours or days to set up now happens in seconds.

- Turnkey HPC Infrastructure. Launch production-ready clusters in just a few clicks.

- True Multi-Node Performance. Built for distributed training and large-scale simulations.

- Simple Web Management. Create, scale, and manage your entire cluster from one interface.

- Pay-As-You-Go. No idle cluster costs, just pure compute as you need it.

Model Store in Beta

We're launching Model Store in beta, a caching system that eliminates model download times when starting workers.

When deploying large models, you're stuck between embedding them in Docker images or downloading at startup. Both mean longer waits and charges during model loading.

Model Store places models on host machines before workers start. When you specify a model from Hugging Face, our scheduler prioritizes hosts with your model already cached. If cached, workers start instantly. If not, the system downloads before your worker starts billing.

New Public Endpoints

Hub Revenue Share for Maintainers + New Pods UX

Runpod Hub - Revenue Share Model

Publish to the RunPod Hub and earn credits when others deploy your repo with up to 7% of compute revenue via monthly tiers (1/3/5/7%). Credits are auto-deposited into your account. Learn more here

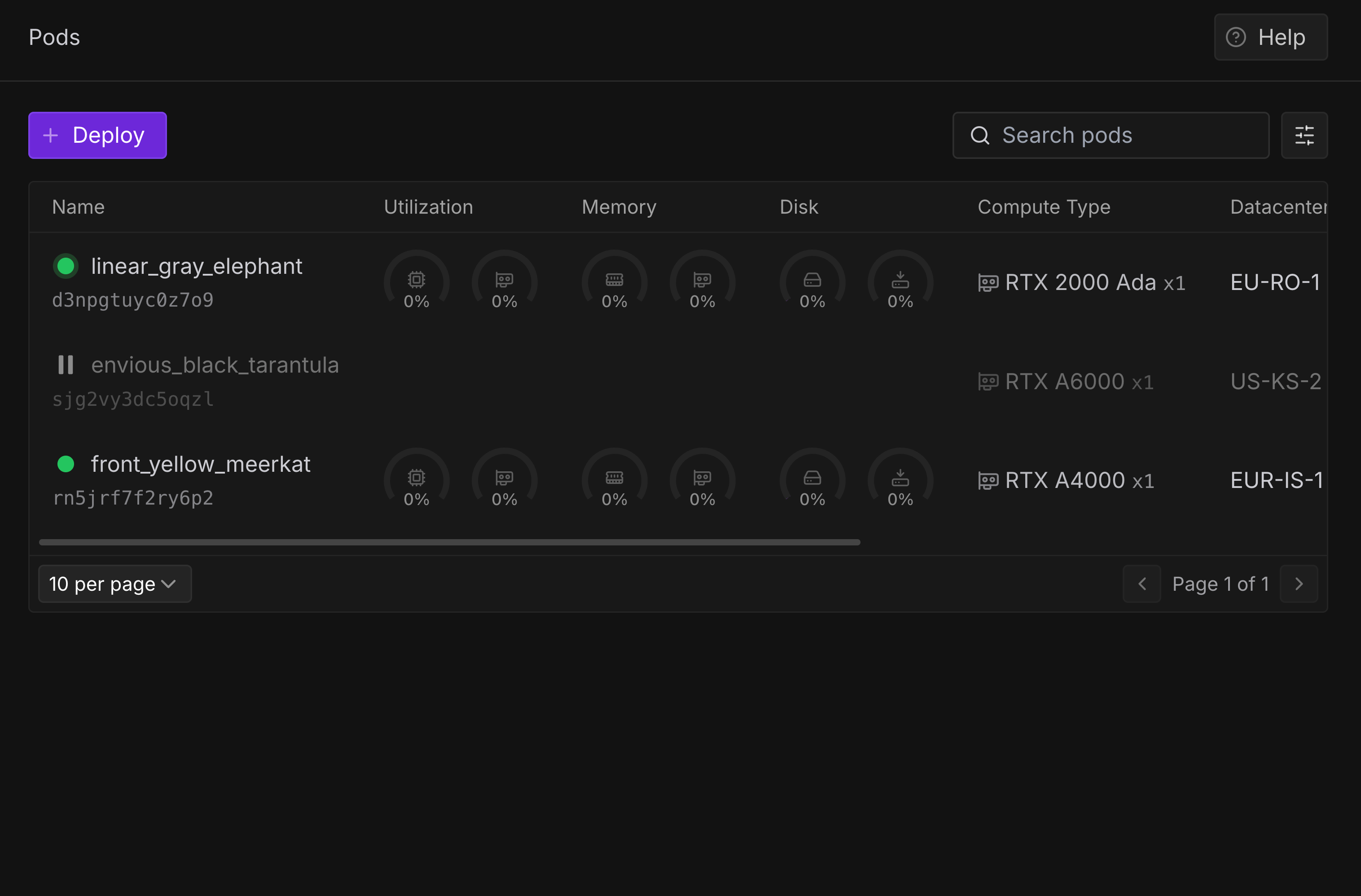

Pods UX Update

Updated modern interface to interact with Runpod pods easier

Public Models via API + One-Click Slurm Clusters

Public Endpoints

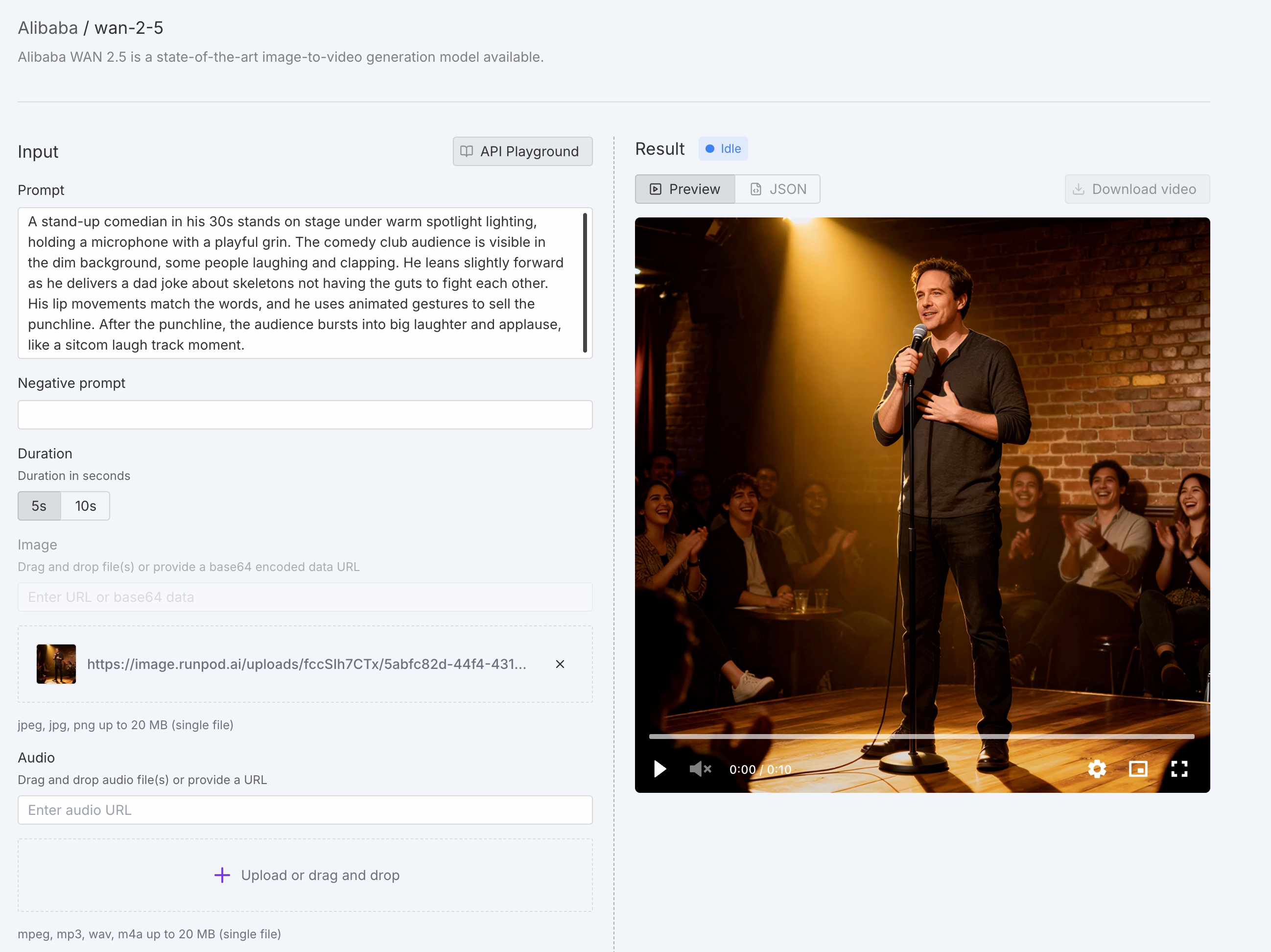

Instant access to state-of-the-art AI models through simple API calls, with an API playground available through the Runpod Hub. Available public endpoints include Whisper-V3-Large, Seedance 1.0 pro, Seedream 3.0, Qwen Image Edit, FLUX.1 Kontext, Deep Cogito v2 Llama 70B, Minimax Speech, and others.

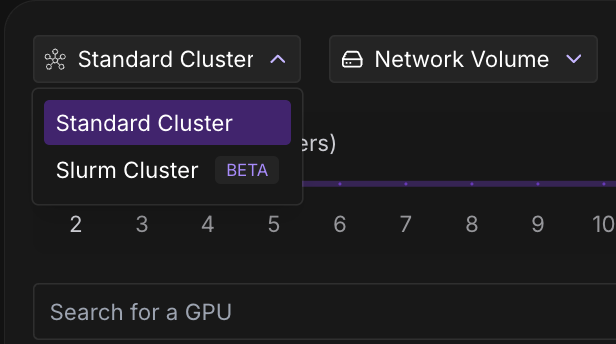

Instant Clusters v2 (Slurm beta)

Create on-demand multi-node clusters instantly with the ability to fully utilize Slurm scheduling in beta.

Upload & Retrieve Files without Compute and Updated Referral Program

S3 API

Upload & retrieve files without compute. Use AWS S3 CLI or Boto3 with zero-config ease. Integrate Runpod storage into any AI pipeline with no rewrites or friction. Manage data with clean, object-level control for teams, models, and apps

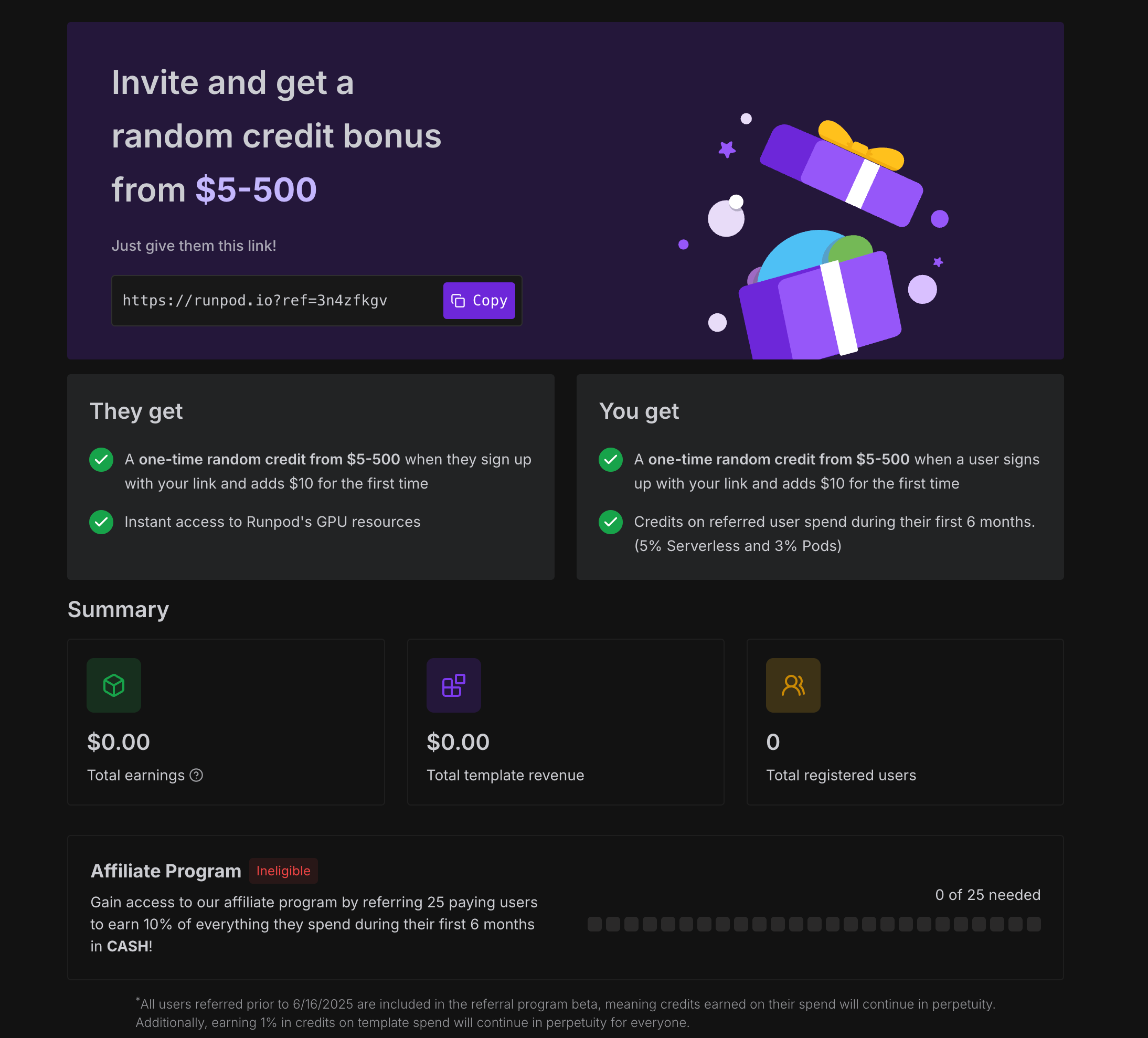

Referrals v2

Updated rewards/tiers with clearer dashboards to track performance.

UX polish, further price relief, and a marketplace + new Python library

Port Labeling

Name exposed ports in UI/API for clearer collaboration (e.g., “Jupyter,” “TensorBoard”).

Price Drops (again!)

Additional reductions on popular SKUs to improve $/hr economics.

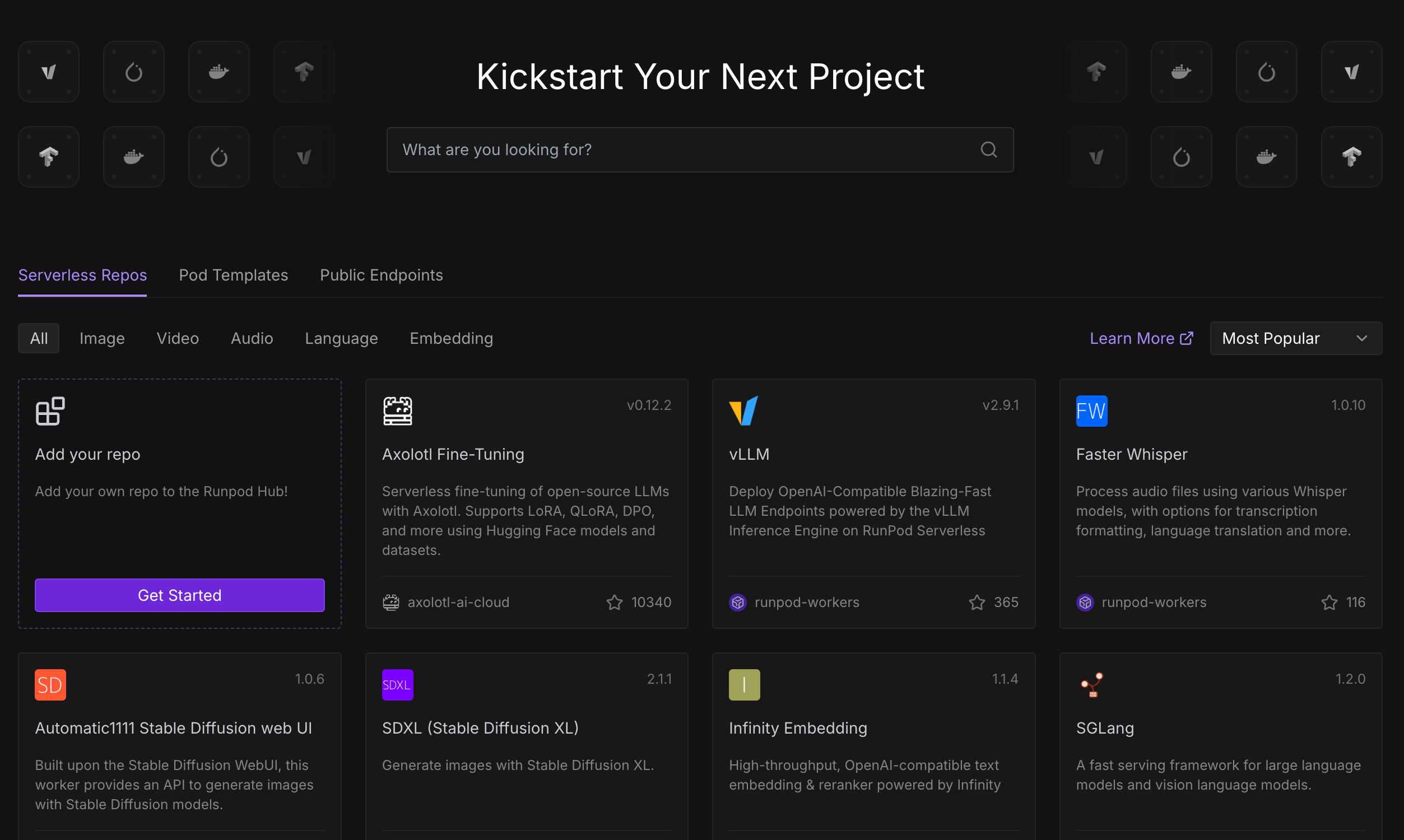

RunPod Serverless Hub

Curated one-click endpoints/templates to fork and deploy community projects quickly.

Tetra Beta Test

A Python library that makes it super easy to run parts of your code on a GPU using RunPod. With Tetra, you can write normal Python code and just add a simple @remote() decorator to the functions that need GPU power. Tetra takes care of the rest offloading the work to RunPod while the rest of your code runs locally. Find repo here and examples here

SSO convenience and wider global mesh.

Login with GitHub

OAuth sign-in/linking for faster onboarding and repo-driven workflows.

5090’s on Runpod

RTX 5090 availability for high perf/cost-efficiency on training and inference.

Global Networking Expansion

Rollout to many additional DCs approaching full global coverage.

Enterprise features: compliance, APIs, clusters, bare metal, and APAC expansion.

CPU Pods get Network Storage Access

GA support for Network Volumes on CPU Pods for persistent, shareable storage.

SOC 2 Type I certification

Independent attestation of security controls for enterprise readiness.

REST API Release

REST API GA with broad resource coverage for full IaC workflows.

Instant Clusters ⚡

Spin up multi-node GPU clusters in minutes with private interconnect and per-second billing.

Bare Metal

Reserve dedicated GPU servers for maximum control, performance, and long-term savings.

AP-JP-1

New Fukushima region for low-latency APAC access and in-country data residency.

Modern API surface in beta and stronger community investment.

REST API Beta Test

RESTful endpoints for pods/endpoints/volumes for simpler automation than GraphQL.

Full time Community Manager hire!

Dedicated programs, content, and faster community response.

Serverless GitHub Integration Release

GA for GitHub-based serverless deploys with production-ready stability.

New silicon options and LLM-centric serverless upgrades.

CPU Pods v2

Docker runtime parity with GPU pods for faster starts; adds Network Volume support.

H200’s on Runpod

NVIDIA H200 GPUs available for larger models and higher memory bandwidth.

Serverless Upgrades

Higher GPU counts per worker, new quick-deploy runtimes, and simpler model selection.

Global Networking rollout continues and GitHub deploys arrive in beta.

Global Networking added to CA-MTL-3, US-GA-1, US-GA-2, US-KS-2

Expanded DC coverage for the private mesh.

Serverless GitHub Integration Beta Test

Deploy endpoints directly from GitHub repos with automatic builds.

Stronger auth for humans and machines.

Scoped API Keys

Least-privilege tokens with fine-grained scopes and expirations for safer automation.

Passkey Auth

Passwordless WebAuthn sign-in for phishing-resistant account access.

More storage coverage and private cross-DC connectivity.

US-GA-2 added to Network Storage

Enable Network Volumes in US-GA-2.

Global Networking!

Private cross-data-center networking with internal DNS for secure service-to-service traffic.

Storage coverage grows, major price cuts, and revamped referrals.

US-TX-3 and EUR-IS-1 added to Network Storage

Network Volumes available in more regions for local persistence.

RunPod Slashes GPU Prices: Powering Your AI Applications for Less

Broad GPU price reductions to lower training/inference TCO.

Referral Program revamp

Updated commissions/bonuses and an affiliate tier with improved tracking.

$20M seed round, community event, and broader serverless/accelerator options.

$20M Seed by Intel Capital and Dell Technologies Capital, thank you!

Funds infra expansion and product acceleration.

First in person Hackathon

Community projects, workshops, and real-world feedback.

Serverless CPU Pods

Scale-to-zero CPU endpoints for services that don’t need a GPU.

AMD GPUs

Add AMD ROCm-compatible GPU SKUs as cost/perf alternatives to NVIDIA

Compute beyond GPUs and first-class automation tooling.

CPU Pods

CPU-only instances with the same networking/storage primitives for cheaper non-GPU stages.

runpodctl

Official CLI for pods/endpoints/volumes to enable scripting and CI/CD workflows.

Console navigation overhaul and documentation refresh.

New Navigational Changes To RunPod UI

Consolidated menus, consistent action placement, and fewer clicks for common tasks.

Docs revamp

New IA, improved search, and more runnable examples/quickstarts.

Zhen AMA

Roadmap Q&A and community feedback session.

New regions and investment in community/support.

US-OR-1

Additional US region for lower latency and more capacity in the Pacific Northwest.

CA-MTL-1

New Canadian region to improve latency and in-country data needs.

Our first Community Manager hire!

Dedicated community programs and faster feedback loops.

Building out The Support Team

Expanded coverage and expertise for complex issues.

Faster starts from templates and better multi-region hygiene.

Serverless Quick Deploy

One-click deploy of curated model templates with sensible defaults.

EU Domain for Serverless (rip)

EU-specific domain briefly offered for data residency; superseded by other region controls.

Data-Center filter for Serverless

Filter and manage endpoints by region for multi-region fleets.

Self-service upgrades, clearer metrics, new pricing model, and cost visibility.

Self service worker upgrade

Rebuild/roll workers from the dashboard without support tickets.

Edit template from endpoint page

Inline edit and redeploy the underlying template directly from the endpoint view.

Improved serverless metrics page

Refinements to charts/filters for quicker root-cause analysis.

Flex and Active Workers

Discounted always-on “Active” capacity for baseline load; burst with on-demand “Flex” workers.

Billing Explorer

Inspect costs by resource/region/time to identify optimization opportunities.