If you’ve landed here, chances are you’ve experienced some of the common challenges that come with using cloud-based GPU rental platforms like Vast AI.

From fluctuating hardware availability and budget pressures to interfaces that can feel less than user-friendly—it’s a familiar story for many developers, researchers, and small teams.

While platforms like Vast AI have helped pave the way for flexible GPU access, ongoing pain points have led some users to explore other options that better align with their evolving needs.

The good news? 2025 has brought a wave of new GPU rental services focused on improving reliability, cost-effectiveness, and overall usability. In this guide, we’ll explore 7 solid alternatives gaining traction this year—so you can find the one that fits your workflow, goals, and budget.

What to Look for in a Vast AI Alternative?

Vast AI offers many valuable features that users appreciate, but if you're seeking greater scalability, consider these key factors when evaluating alternative platforms:

- Pricing transparency with predictable costs and no hidden fees to avoid budget surprises when scaling.

- Robust GPU availability across various NVIDIA models (A100s, H100s, etc.) with minimal wait times during high-demand periods.

- Intuitive user interface with straightforward setup process for both beginners and experienced ML engineers.

- Comprehensive security features including encryption, isolated environments, and compliance certifications relevant to your industry.

- Reliable technical support with quick response times and access to knowledgeable staff who understand ML workflows.

- Flexible scheduling options that allow for both on-demand computing and reserved instances based on project needs.

- Simple integration capabilities with popular ML frameworks and tools in your existing development pipeline.

The 7 Best Vast AI Alternatives

Create a separate subsection for each alternative. Keep the structure consistent for easy scanning.

1. Runpod.io

Via Runpod.io

Runpod.io is a powerful cloud computing platform specifically tailored to deliver scalable and cost-effective GPU resources ideal for AI and machine learning workloads.

It offers unparalleled flexibility, allowing users to quickly deploy GPU and CPU instances via intuitive Pods and Serverless computing options, perfect for those who need resources that adapt instantly to varying workloads.

Users gain access to an impressive range of NVIDIA GPUs, including high-performance models like the H100 PCIe and A100 PCIe, enabling precise hardware selection to match performance and budget requirements.

With over 50 pre-configured templates for popular environments such as PyTorch and TensorFlow, Runpod significantly reduces setup times, letting you dive straight into development.

Its Serverless computing model features automatic scaling and pay-per-second billing, ensuring optimal resource efficiency—pay only for the compute time you actually use.

Key Features

- Instant Deployment: Launch GPU instances in seconds, minimizing setup time and accelerating project timelines.

- Diverse GPU Options: Access a wide range of GPUs, including NVIDIA H100s and A100s, catering to various computational needs.

- Serverless Endpoints: Deploy AI models with auto-scaling capabilities, ensuring efficient resource utilization during inference tasks.

- Pre-configured Templates: Choose from over 50 ready-to-use environments like PyTorch and TensorFlow, simplifying the setup process.

Runpod.io Limitations:

- Complexity for New Users: The array of configurations and options can be overwhelming for individuals new to cloud computing or AI workflows.

Runpod.io Pricing:

- H100 PCIe: Starting at $1.99/hr for Community Cloud and $2.39/hr for Secure Cloud.

- A100 PCIe: Starting at $1.19/hr for Community Cloud and $1.64/hr for Secure Cloud.

- RTX A6000: Starting at $0.33/hr for Community Cloud and $0.59/hr for Secure Cloud.

Storage is billed at $0.10/GB/month for running pods and $0.20/GB/month for idle volumes.

Runpod.io Ratings & Reviews

- On G2, Runpod.io holds a rating of 4.7 out of 5, based on user feedback.

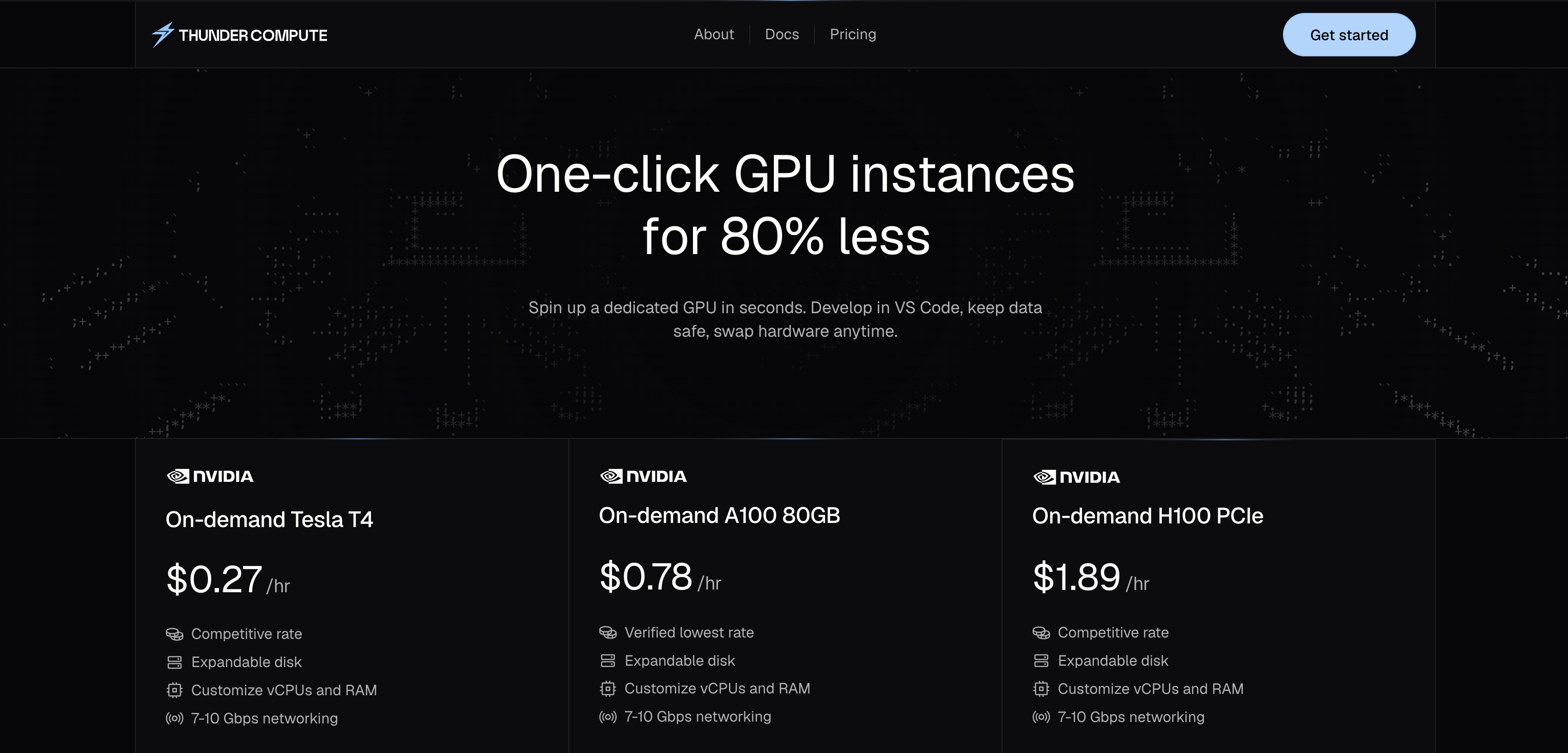

2. Thunder Compute

Via Thunder Compute

Thunder Compute is a cloud GPU platform designed for developers, startups, and research teams who require fast, affordable access to high-performance computing resources. It specializes in delivering GPU-accelerated virtual machines for AI/ML training, fine-tuning, and inference workloads—without the complexity of traditional cloud infrastructure.

Thunder Compute emphasizes simplicity and cost-efficiency, providing instant access to GPUs through a clean, developer-friendly interface. Its architecture is optimized for low overhead and high utilization, allowing users to deploy and manage workloads quickly while maintaining strong performance consistency.

Key Features

- Instant GPU Provisioning: Launch GPU-powered instances in seconds with one-click deployment, enabling rapid iteration for experiments, training, or inference.

- Developer-Focused Tools: Includes a VS Code extension, quick snapshot management, and customizable templates for common frameworks like PyTorch, TensorFlow, and vLLM.

- Transparent Pricing: Offers straightforward hourly rates with up to 80 % savings compared to major hyperscalers, minimizing hidden costs and billing complexity.

- Global Accessibility: Provides access to GPUs across multiple regions, allowing users to deploy workloads closer to their teams or end users.

Thunder Compute Limitations

- Smaller Scale Footprint: While suitable for most AI workloads, it may not yet support extremely large multi-node training clusters or HPC-grade networking.

- Early-Stage Ecosystem: As a newer platform, Thunder Compute’s documentation and support network are still maturing.

Thunder Compute Pricing

- Thunder Compute operates on a pay-as-you-go model, charging by the hour for GPU usage with no long-term commitments.

- Pricing varies by GPU model and region; for instance, NVIDIA A100 instances start around $0.66 per hour, while RTX 4090 instances are among the most cost-efficient options for model fine-tuning and inference.

3. Google Compute Engine

Google Compute Engine (GCE) is ideal for organizations seeking scalable and customizable virtual machine (VM) solutions.

It caters to a wide range of workloads, from small-scale applications to large-scale enterprise operations.

GCE's flexibility allows users to select from various machine types, including standard, high-memory, high-CPU, and memory-optimized configurations, ensuring optimal performance for diverse computing needs.

Additionally, its integration with other Google Cloud services facilitates seamless deployment of complex applications, making it a preferred choice for businesses aiming to leverage cloud infrastructure without the burden of managing physical servers.

Key Features

- Custom Machine Types: Create VMs tailored to specific requirements, optimizing resource allocation and cost-efficiency.

Global Network: Utilize Google's extensive global infrastructure to deploy applications closer to end-users, reducing latency and improving performance.Medium - Sustained Use Discounts: Benefit from automatic discounts for consistent usage, lowering operational costs without the need for long-term commitments.

Google Compute Engine Limitations:

- Complex Pricing Structure: Navigating GCE's pricing can be challenging due to various machine types, storage options, and additional services, potentially leading to unexpected costs.

- Steeper Learning Curve: Users new to Google Cloud may find GCE's extensive features and configurations overwhelming, requiring time to fully grasp and utilize its capabilities.

- Regional Resource Availability: Certain machine types or configurations might not be available in all regions, limiting deployment options based on geographic requirements.

Google Compute Engine Pricing:

- GCE operates on a pay-as-you-go model, charging per-second usage with a one-minute minimum.

- Pricing varies based on machine type, region, and additional resources like storage and networking.

- For instance, standard machine types start at approximately $0.070 per hour for a single vCPU with 3.75 GB RAM.

4. CoreWeave

Via CoreWeave

CoreWeave specializes in providing high-performance cloud computing services optimized for compute-intensive workloads, such as artificial intelligence (AI) model training, machine learning, visual effects (VFX) rendering, and scientific simulations.

Its infrastructure is designed to deliver exceptional performance, making it particularly suitable for organizations requiring substantial computational power without the need for significant upfront hardware investments.

CoreWeave's ability to offer tailored solutions for industries like entertainment, finance, and research institutions positions it as a valuable partner for businesses aiming to accelerate innovation and reduce time-to-market for their products and services.

Key Features

- Diverse GPU Options: Access to a wide range of NVIDIA GPUs, including the latest H100 Tensor Core GPUs, enabling users to select the most appropriate hardware for their specific workloads.

- Flexible Configurations: Customizable virtual machines and bare-metal servers allow users to optimize resource allocation based on their unique requirements.

- Managed Kubernetes Service: Simplifies container orchestration, facilitating seamless deployment and scaling of applications.

- High-Performance Storage: Offers scalable and secure storage solutions with ultra-fast access, supporting the demands of data-intensive applications.

- Global Data Center Footprint: Operates multiple data centers across North America and Europe, ensuring low-latency access and redundancy.

CoreWeave Limitations:

- NVIDIA-Centric Infrastructure: CoreWeave's focus on NVIDIA GPUs may limit options for users seeking alternative hardware solutions.

- Service Delivery Challenges: There have been reports of delivery issues and missed deadlines, leading to the termination of some contracts with major clients.

CoreWeave Pricing:

- The pricing for an A100 PCIe GPU was approximately $2.46 per hour.

- CoreWeave offers reserved capacity options, providing discounts of up to 60% over on-demand prices for committed usage.

5. NVIDIA Virtual GPU

Via Nvidia vGPU

NVIDIA's Virtual GPU (vGPU) technology is ideal for organizations seeking to deliver high-performance, graphics-rich virtual workstations and applications to a distributed workforce.

It excels in environments where professionals require access to compute-intensive applications, such as 3D design, data science, and AI workloads, all within a virtualized infrastructure.

This solution is particularly beneficial for industries like architecture, engineering, media and entertainment, and healthcare, where remote collaboration and resource flexibility are key.

Key Features

- High-Performance Virtualization: Delivers GPU performance virtually indistinguishable from physical workstations, enabling seamless handling of demanding applications.

- Resource Allocation Flexibility: Allows provisioning of GPU resources with fractional or multi-GPU virtual machine (VM) instances, optimizing utilization based on workload requirements.

- Advanced Management and Monitoring: Integrates with common data center management tools, supporting features like live migration for continuous operation during maintenance.

- Enhanced Security: Ensures data remains secure within the data center, reducing the risk associated with endpoint devices.

NVIDIA Virtual GPU Limitations:

- Hardware Dependency: Requires compatible NVIDIA GPUs and certified servers, which may necessitate additional investment in specific hardware.

- Licensing Costs: Involves purchasing software licenses per concurrent user or per GPU, potentially increasing operational expenses.

- Complex Implementation: Deploying vGPU solutions can be complex, requiring specialized knowledge for optimal configuration and management.

NVIDIA Virtual GPU Pricing:

- Pricing starts at approximately $20 per concurrent user (CCU) for a perpetual license, with an additional annual Support, Upgrade, and Maintenance (SUMs) fee of $5 per CCU, typically requiring a minimum of four years upfront, totaling $40 per CCU.

6. Amazon EC2 UltraClusters

Amazon EC2 UltraClusters are designed for organizations requiring massive computational power for complex workloads, such as large-scale machine learning (ML) training, high-performance computing (HPC) applications, and generative AI tasks.

By integrating thousands of accelerated EC2 instances within a single AWS Availability Zone, UltraClusters provide on-demand access to supercomputing capabilities, enabling faster time-to-solution and accelerated innovation.

Key Features

- Massive Scalability: Scale from a few to thousands of NVIDIA A100 Tensor Core GPUs, accommodating the most demanding ML and HPC workloads.

- High-Performance Networking: Utilize Elastic Fabric Adapter (EFA) networking with up to 400 Gbps bandwidth, ensuring low-latency and high-throughput inter-instance communication.

- Integrated Storage Solutions: Access Amazon FSx for Lustre, a fully managed high-performance file system, facilitating rapid data processing with sub-millisecond latencies.

Amazon EC2 UltraClusters Limitations:

- Complexity: Deploying and managing UltraClusters may require specialized expertise in distributed computing and AWS services.

- Cost Considerations: The substantial computational resources come with significant costs, which may not be feasible for smaller organizations or projects with limited budgets.

Amazon EC2 UltraClusters Pricing:

Amazon EC2 UltraClusters operate on a pay-as-you-go model, with pricing varying based on instance types and configurations. For example:

- p4de.24xlarge Instance: Features 96 vCPUs, 1.1 TB RAM, and 8 NVIDIA A100 GPUs, priced at approximately $40.97 per hour. Cost Calc

- p5 Instances: Offer up to 8 NVIDIA H100 GPUs with enhanced performance, delivering up to 4x the performance of previous-generation GPU-based instances.

7. IBM GPU Cloud Server

IBM GPU Cloud Servers excel at empowering enterprises that depend heavily on intensive computing tasks such as complex analytics, deep neural network training, and high-performance graphics rendering.

Unlike standard cloud services, IBM's GPU servers offer a potent blend of powerful NVIDIA GPUs and customizable infrastructure, enabling faster processing speeds and improved performance for data-heavy applications.

This makes IBM GPU Cloud Servers especially appealing to industries requiring precision and speed—like financial services, scientific research, medical imaging, and media production. Its unique combination of bare-metal and virtual GPU options provides organizations the flexibility to precisely scale their computing power to their immediate needs.

Key Features

- Flexible Server Configurations: Offers both bare metal and virtual servers equipped with NVIDIA GPUs, allowing customization based on workload requirements.

- High-Performance Networking: Supports up to 40 Gbps networking, ensuring rapid data transfer and low latency, essential for HPC applications.

- Global Data Center Presence: Access to a globally distributed network of data centers, providing redundancy and compliance with regional data regulations.

IBM GPU Cloud Server Limitations:

- Higher Cost: IBM Cloud services are generally perceived as more expensive compared to competitors like AWS, Azure, or Google Cloud, which may impact cost-sensitive projects.

- Complex Setup: The initial setup and configuration can be less intuitive, potentially requiring specialized knowledge to optimize the environment effectively.

IBM GPU Cloud Server Pricing:

- Virtual Servers: Pricing starts at approximately $0.038 per hour or $25 per month for a public node with 1 core and 1 GB of RAM.

- GPU Servers: Servers equipped with an NVIDIA Tesla M60 GPU start at $3.50 per hour or $1,709 per month, catering to HPC, deep learning, and AI use cases.

- Promotional Offers: IBM provides promotional credits, such as $1,500 off for Virtual Server for VPC NVIDIA L40S GPU profiles for three months, using the promo code GPU1500. (Valid till 31st March)

8. Corvex.ai

Via Corvex.ai

Corvex.ai is a high-powered, private AI cloud platform designed specifically for organizations tackling some of the toughest computational challenges today.

If you're building massive generative AI models, training large language models, or running intensive scientific research, Corvex.ai offers exactly the kind of heavy-duty infrastructure you need.

It sets itself apart by providing dedicated environments equipped with cutting-edge NVIDIA GPUs and CPUs, ensuring your workloads run smoothly, quickly, and securely at lower costs than traditional big-name cloud providers.NVIDIA GPUs and CPUs, ensuring your workloads run smoothly, quickly, and securely at lower costs than traditional big-name cloud providers.

With ultra-fast GPU connections, generous built-in storage, and easy scaling options, teams can effortlessly manage huge datasets and speed up their AI development process.

Corvex.ai is especially beneficial for industries like finance, healthcare, media, and research organizations—anywhere groundbreaking performance and robust security matter most.

Key Features

- Dedicated AI Supercomputers: Offers configurations with up to 72 NVIDIA GB200 NVL72 GPUs and 36 Grace CPUs, providing exceptional computational power for demanding AI tasks.

- Interconnectivity: Features 130 TB/s NVLink interconnect, facilitating seamless communication between GPUs for efficient parallel processing.

- Secure Hosting: Ensures data protection with SOC2-certified, Tier III U.S. data centers, maintaining high standards of security and reliability.

Corvex.ai Limitations:

- Complexity of Implementation: May require specialized expertise, potentially posing challenges for organizations without dedicated IT resources.

- Cost Considerations: Required significant investment, which could be a consideration for smaller enterprises or startups.

Corvex.ai Pricing:

- NVIDIA GB200 NVL72 Servers: Starting at $4.49 per hour, featuring 72 Blackwell GPUs and 36 Grace CPUs.

- NVIDIA B200 Servers: Starting at $3.19 per hour, equipped with 8 NVIDIA Blackwell GPUs per server.

- NVIDIA H200 Servers: Starting at $2.15 per hour, featuring 8 H200 SXM5 GPUs and 2 Xeon 8568Y+ CPUs.

Conclusion: Why Runpod.io Is Still a Leading Choice?

We’re bias but we believe Runpod.io remains a top contender among GPU cloud computing platforms by consistently delivering a powerful combination of speed, flexibility, and affordability.

While the market grows crowded with options, Runpod continues to win user trust through its intuitive user experience, lightning-fast GPU deployment, and responsive support—addressing user concerns proactively.

If you're evaluating AI and machine learning solutions, here are ten standout reasons why Runpod.io still deserves your attention:

- Instant GPU Deployment (pods launch in milliseconds)

- Wide Variety of GPUs (including NVIDIA H100 and A100 GPUs)

- Flexible Serverless Computing (pay-per-second, auto-scaling)

- Global Infrastructure (data centers in 30+ regions)

- Competitive Pricing (cost-effective for teams of all sizes)

- User-Friendly Interface (pre-built templates for rapid setup)

- Robust Security (SOC2-certified infrastructure)

- High Availability (minimal downtime, high reliability)

- Scalable Performance (supports large-scale AI model training and inference)

- Outstanding User Support (consistently positive user testimonials)

Interested in trying out the powerful GPUs by Runpod.io? Get started here.

.webp)