We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

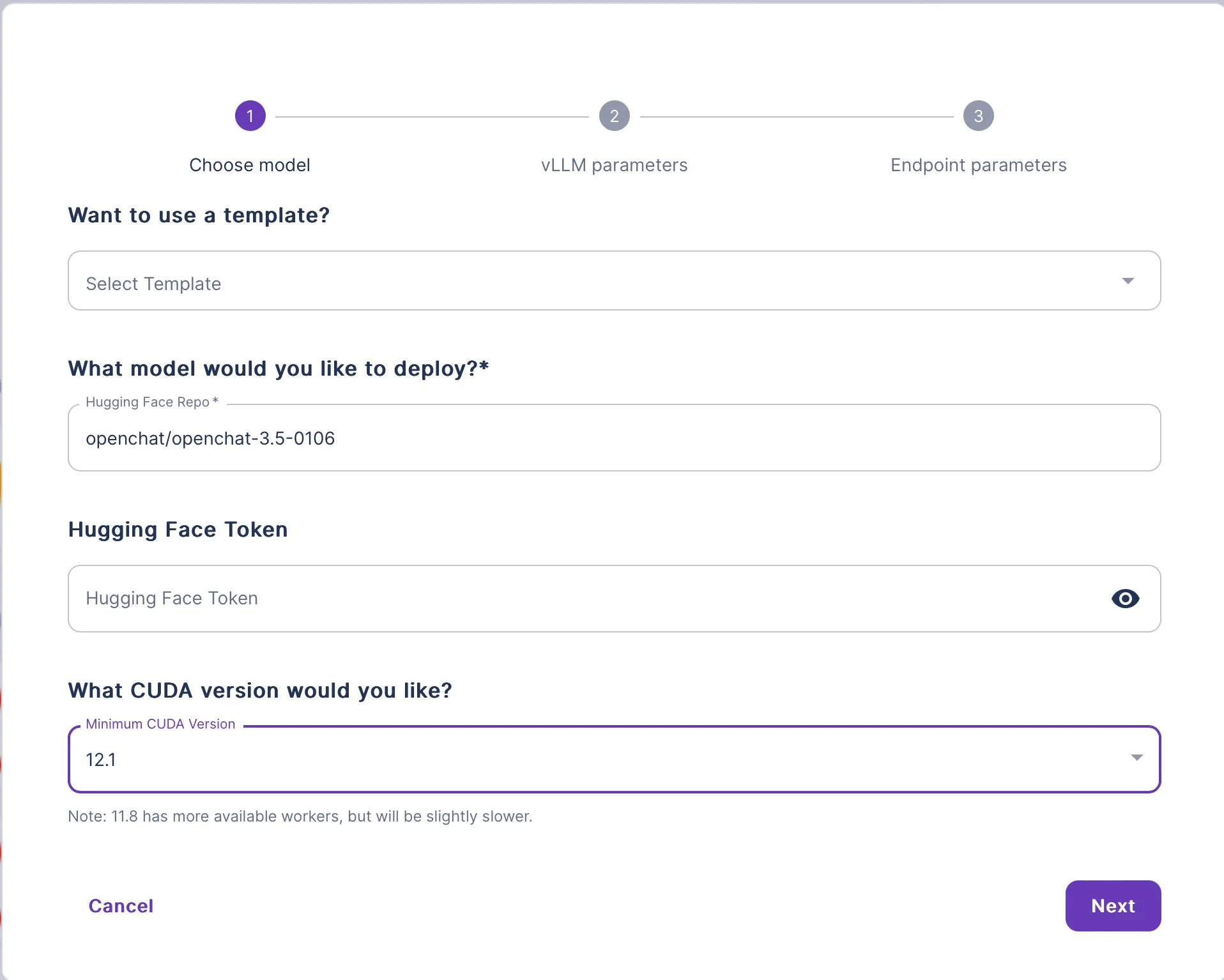

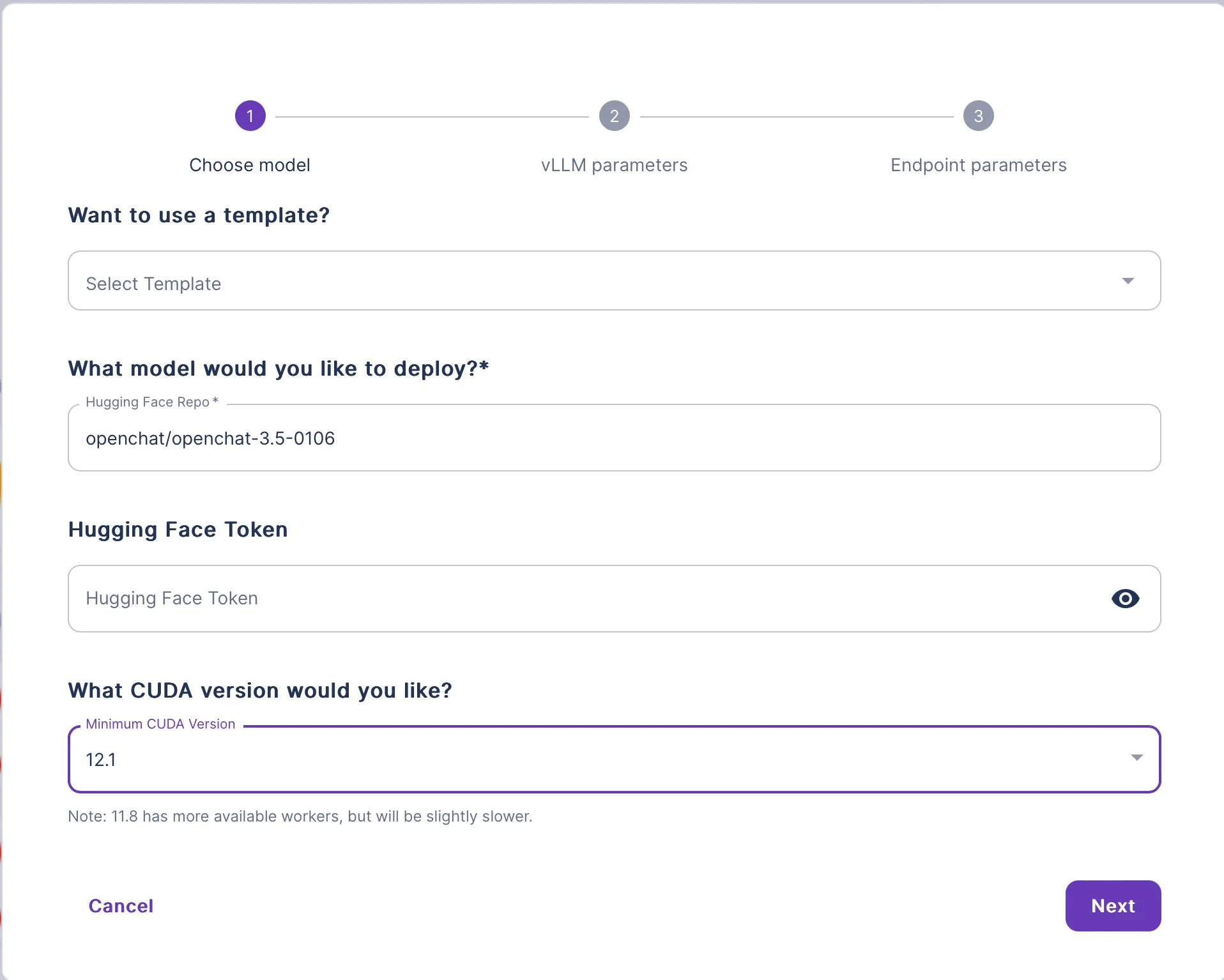

Runpod introduces Configurable Templates, a powerful feature that allows users to easily deploy and run any large language model.

With this feature, users can provide the Hugging Face model name and customize various template parameters to create tailored endpoints for their specific needs.

Configurable Templates offer several benefits to users:

Follow these steps to deploy a large language model using Configurable Templates:

Once the deployment is complete, your LLM will be accessible via an Endpoint. You can interact with your model using the provided API.

💡

Runpod supports any model architecture that can run on vLLM with configurable templates.

By integrating vLLM into the Configurable Templates feature, Runpod simplifies the process of deploying and running large language models. Users can focus on selecting their desired model and customizing the template parameters, while vLLM takes care of the low-level details of model loading, hardware configuration, and execution.

Deploy any Hugging Face large language model using Runpod’s configurable templates. Customize your endpoint with ease and launch scalable LLM deployments in just a few clicks.

Runpod introduces Configurable Templates, a powerful feature that allows users to easily deploy and run any large language model.

With this feature, users can provide the Hugging Face model name and customize various template parameters to create tailored endpoints for their specific needs.

Configurable Templates offer several benefits to users:

Follow these steps to deploy a large language model using Configurable Templates:

Once the deployment is complete, your LLM will be accessible via an Endpoint. You can interact with your model using the provided API.

💡

Runpod supports any model architecture that can run on vLLM with configurable templates.

By integrating vLLM into the Configurable Templates feature, Runpod simplifies the process of deploying and running large language models. Users can focus on selecting their desired model and customizing the template parameters, while vLLM takes care of the low-level details of model loading, hardware configuration, and execution.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.