We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

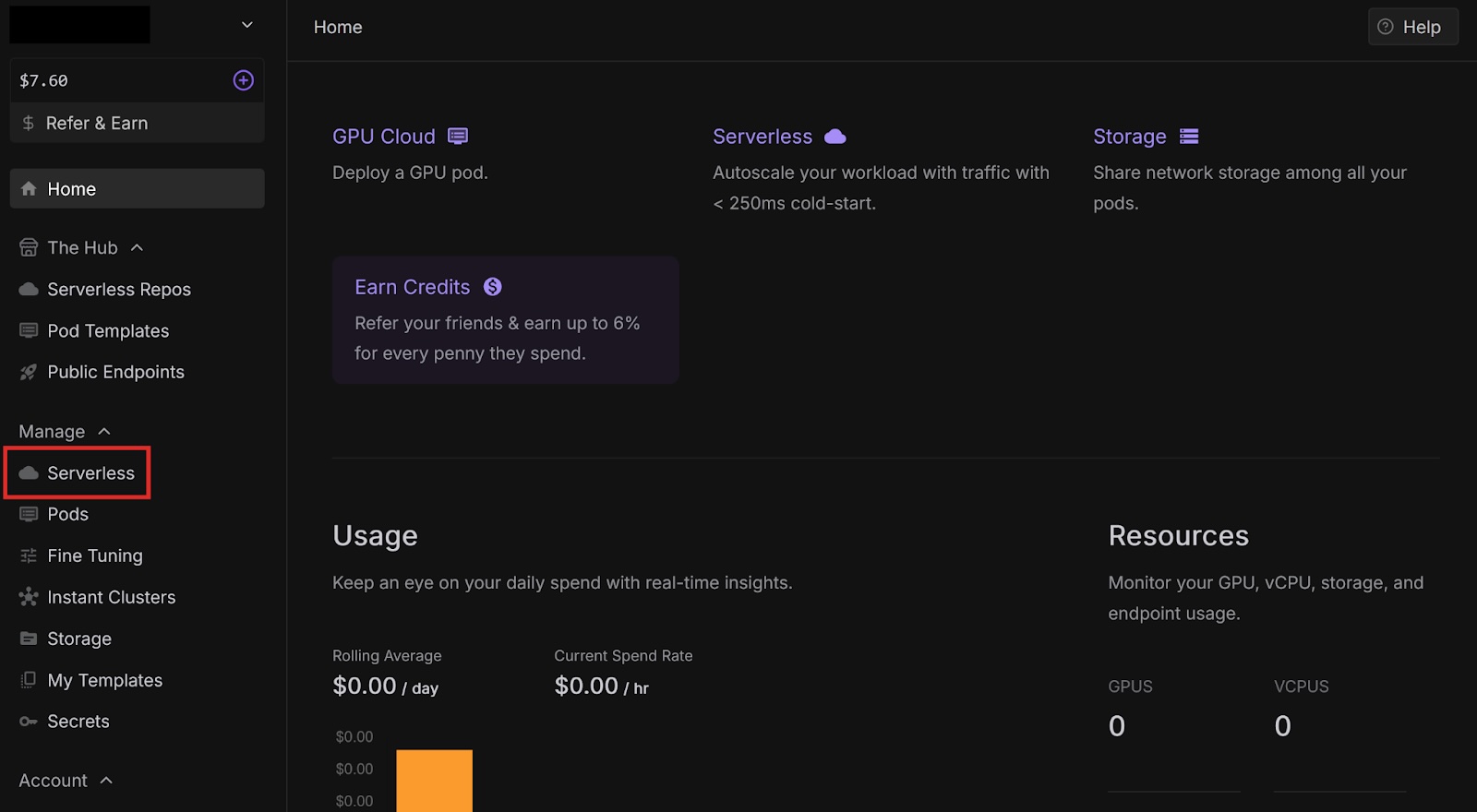

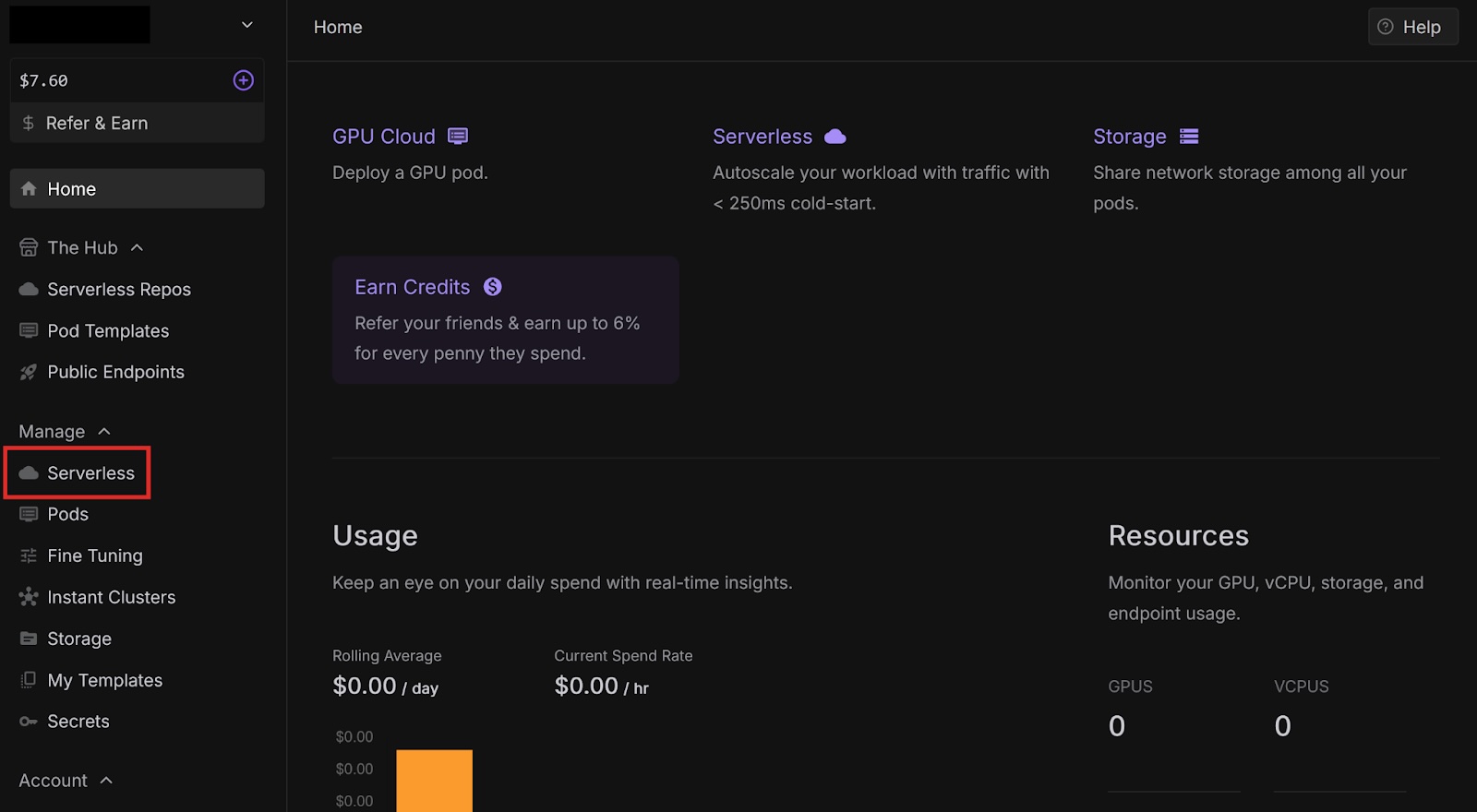

In a previous blog post, we explored Runpod Serverless, a pay-as-you-go cloud computing solution that doesn’t require managing servers to scale and maintain your applications. We deployed some basic code from templates that just printed some text to the console, but now let’s do something more performance-intensive.

ComfyUI is an open-source, node-based application for generative AI workflows. You can deploy ComfyUI as an API endpoint on Runpod Serverless, send workflows via API calls, and receive AI-generated images in response.

In this blog post you’ll learn how to:

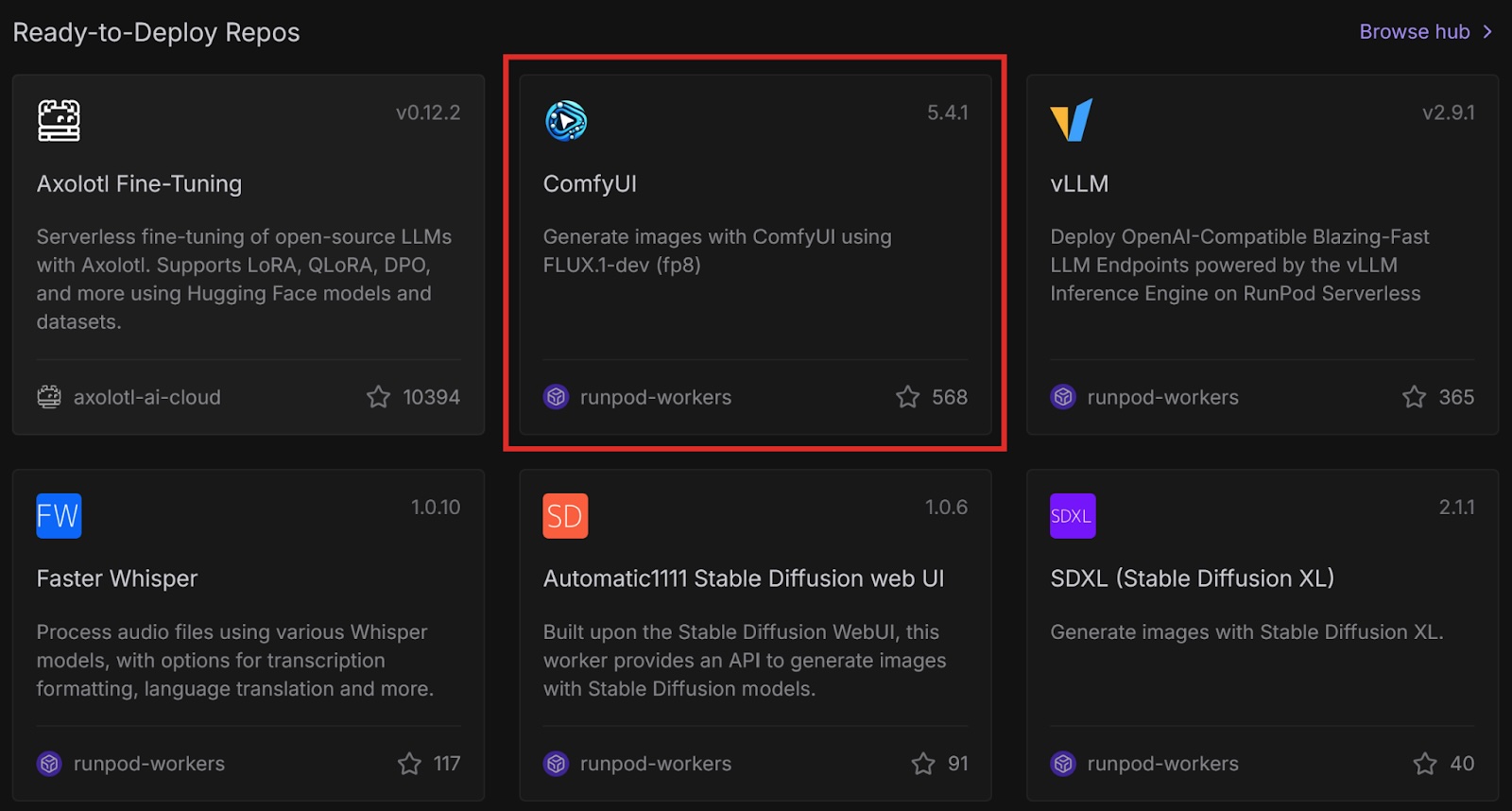

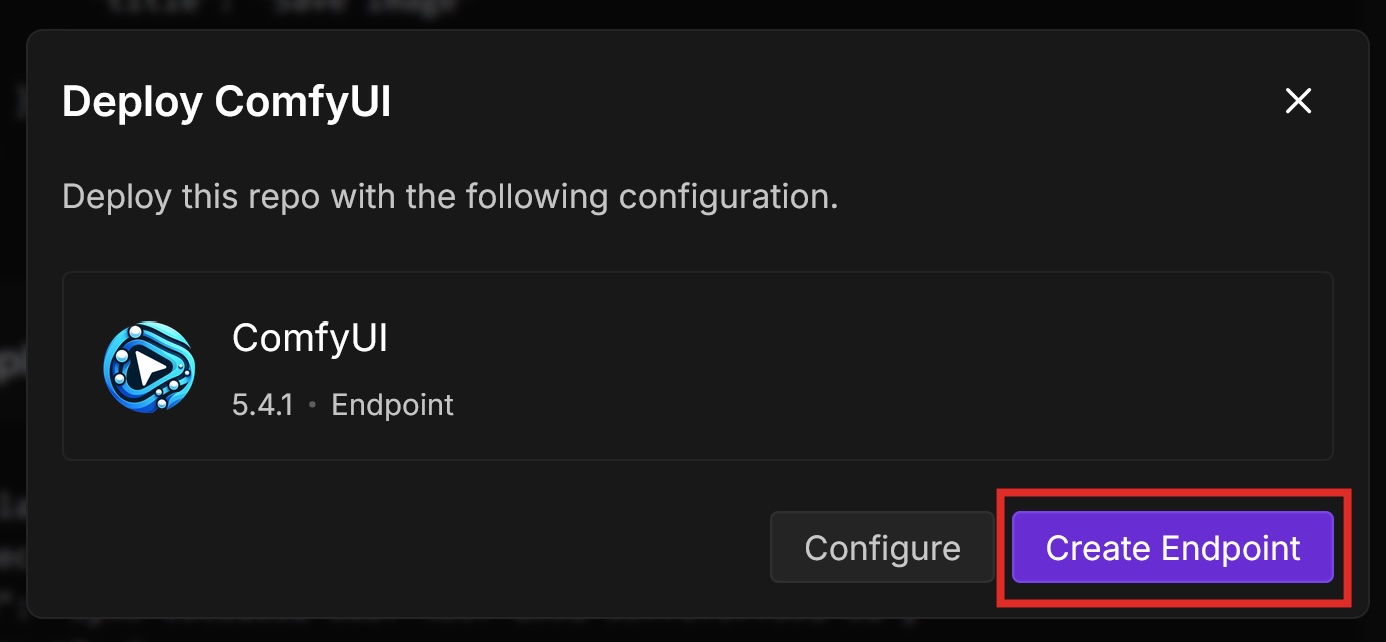

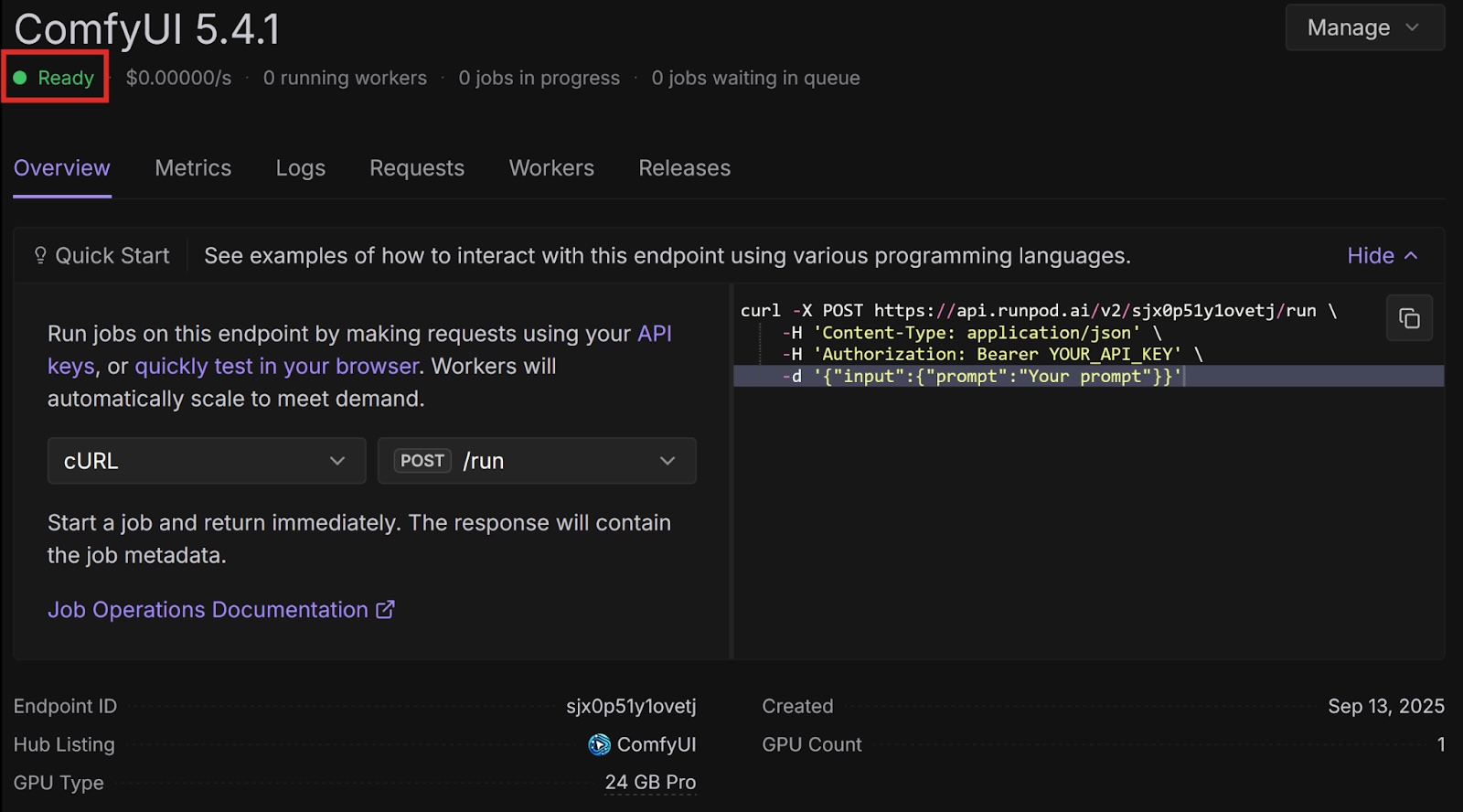

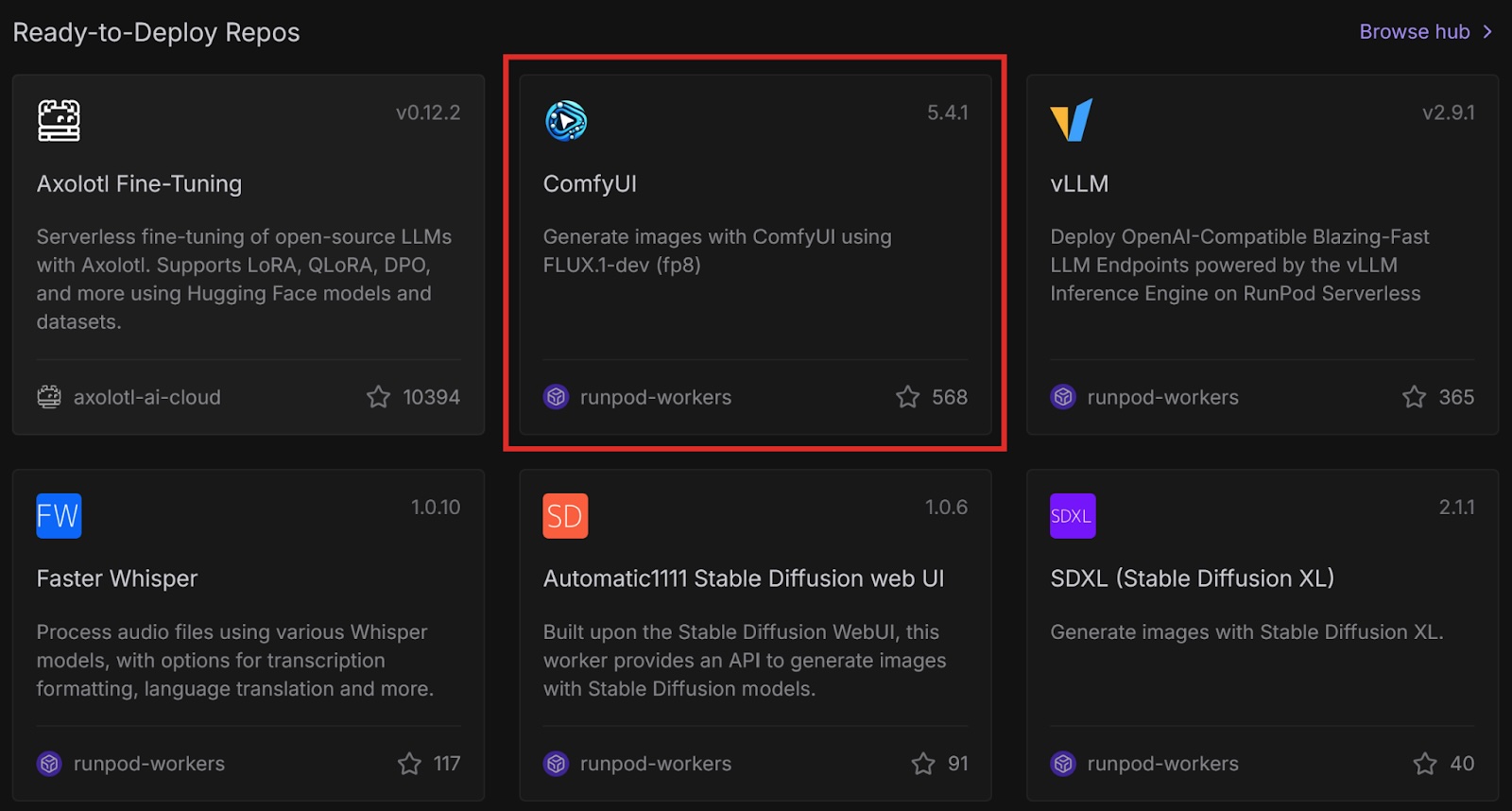

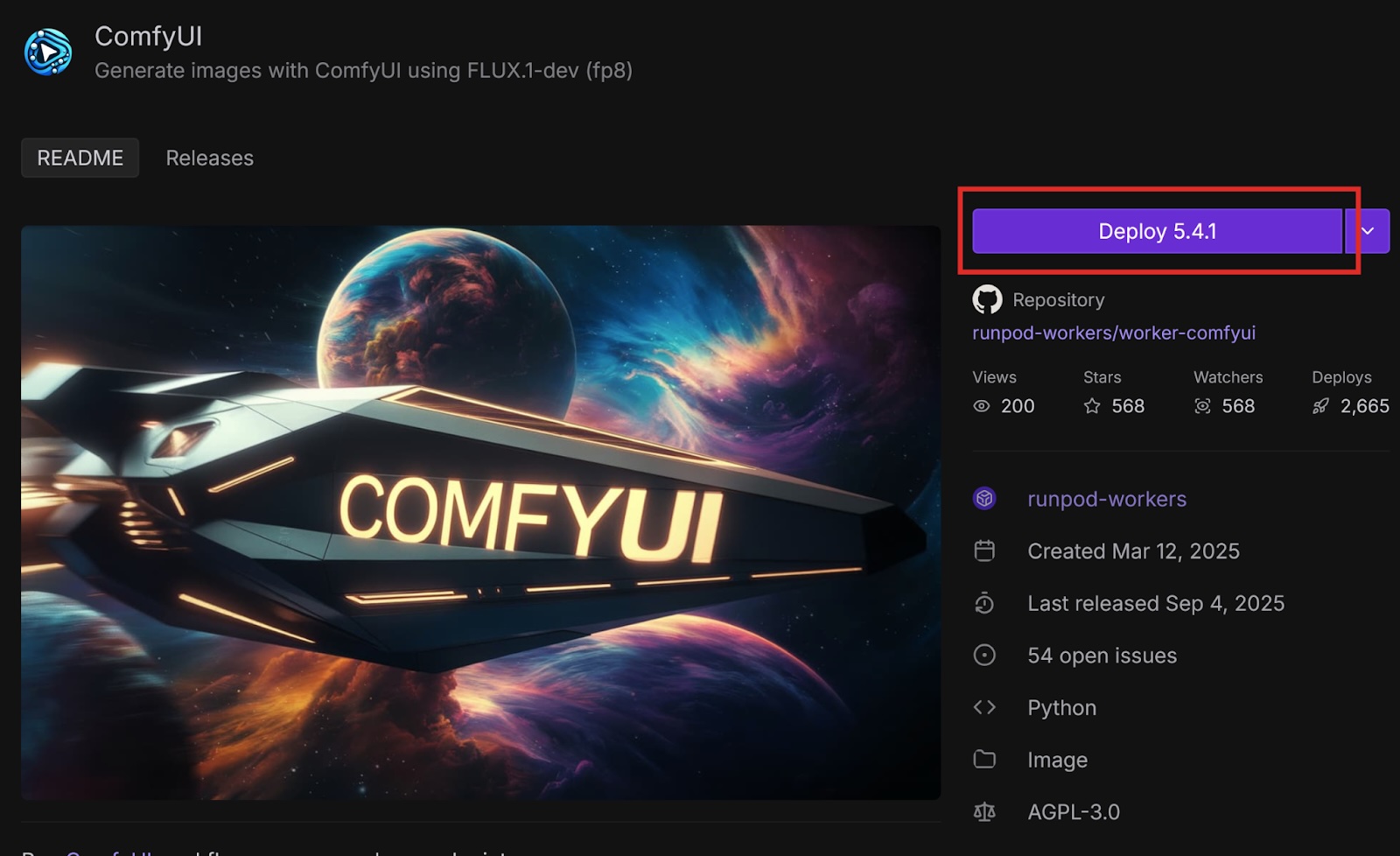

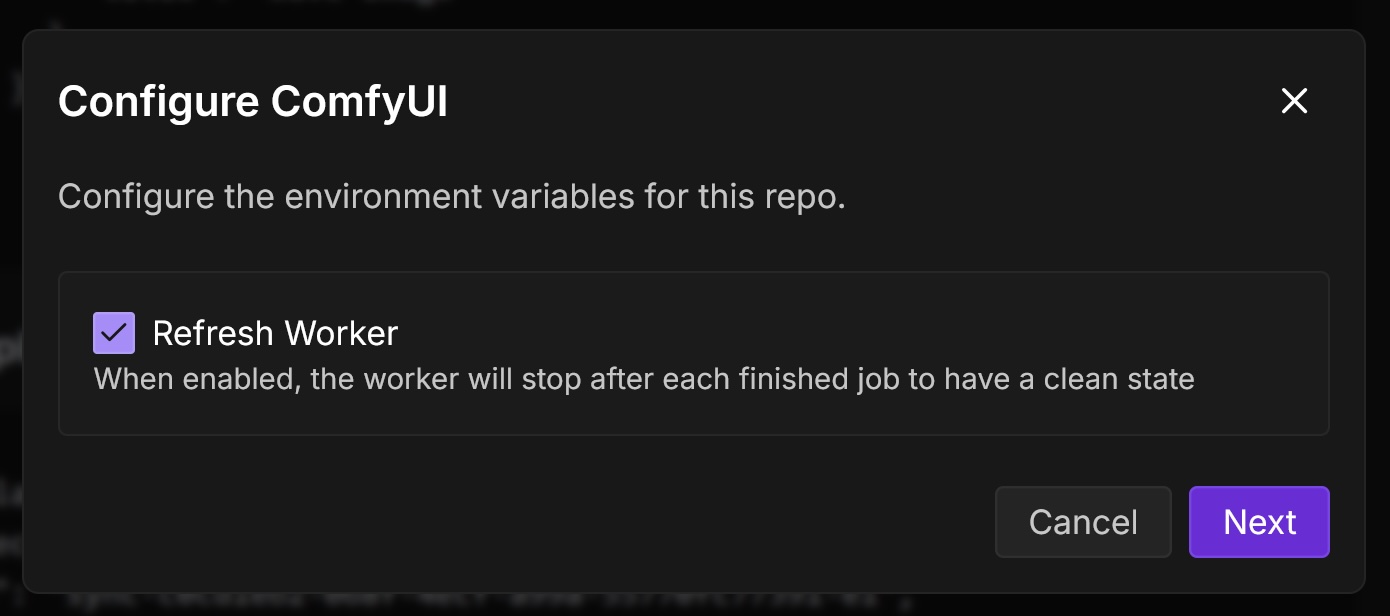

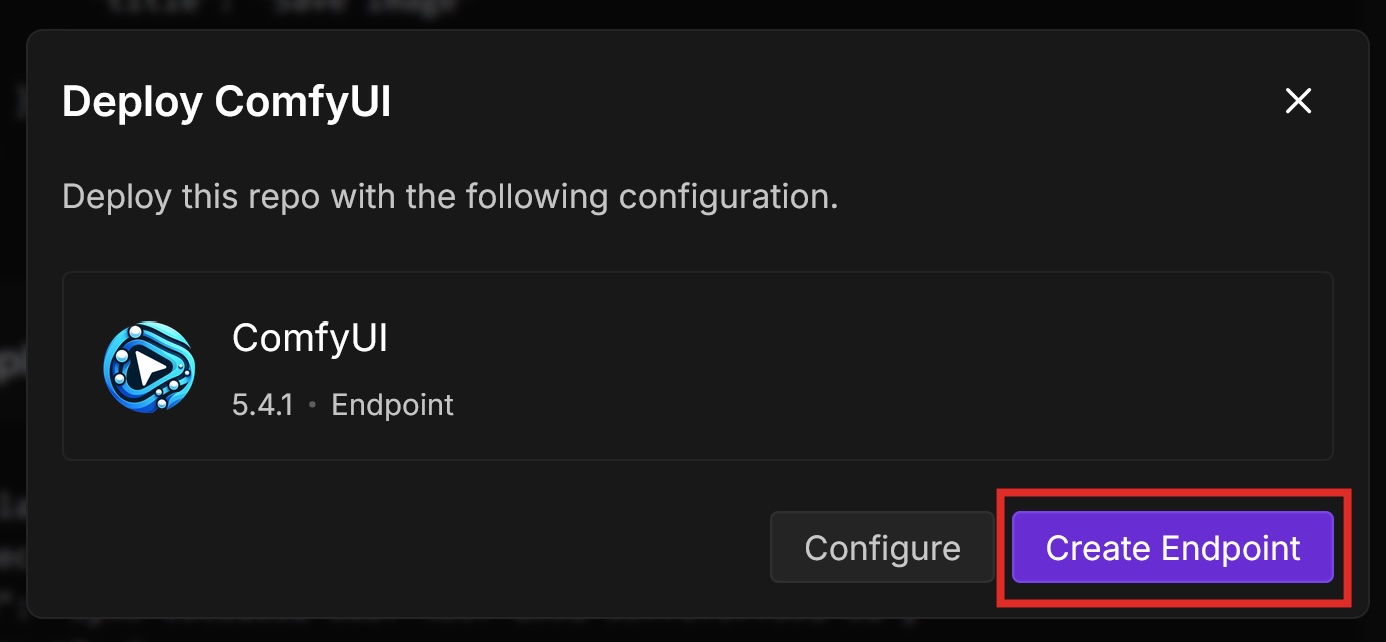

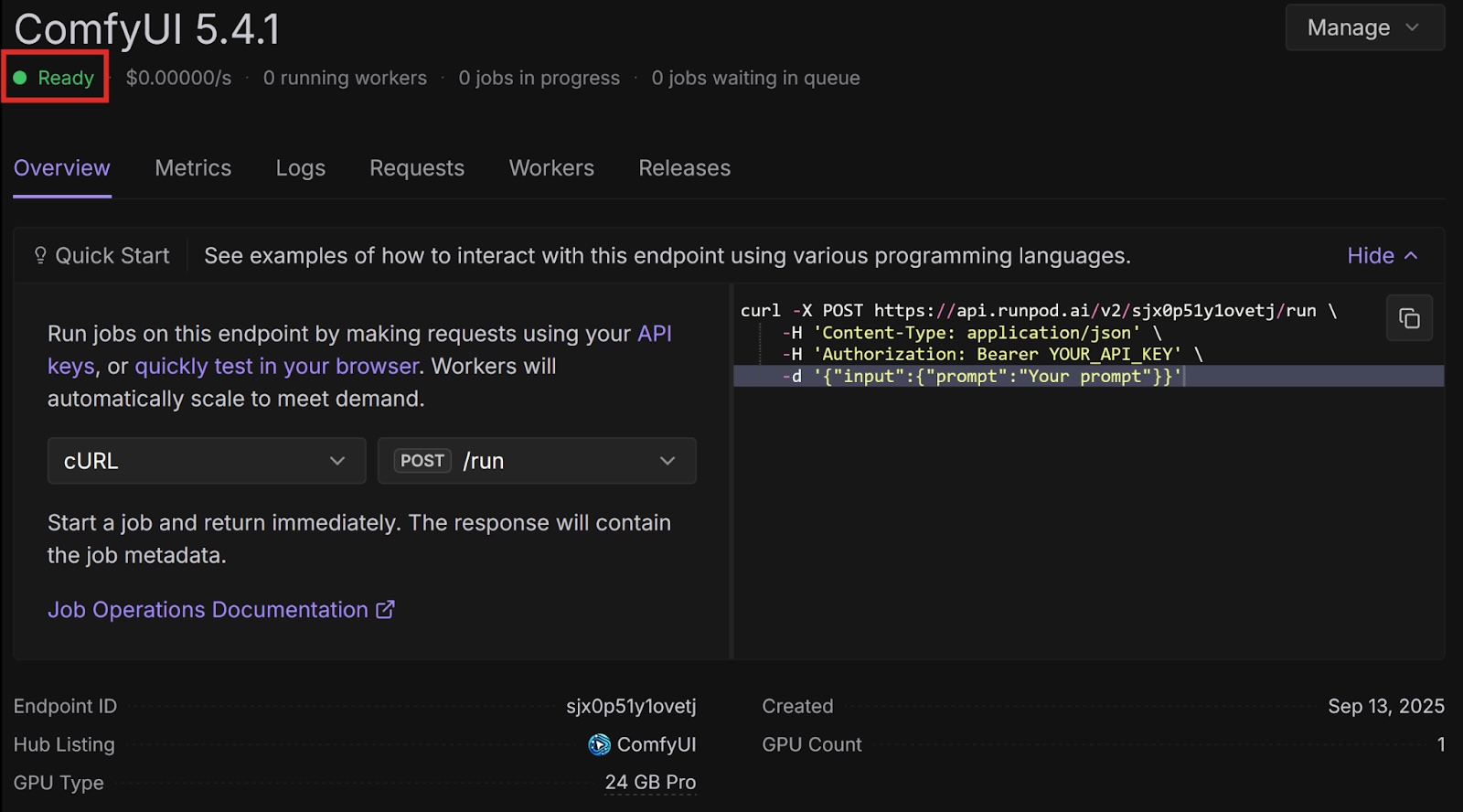

Runpod Hub provides convenient repositories that you can quickly deploy to Runpod Serverless without much setup. Let’s deploy the ComfyUI repo from Runpod Hub to a serverless endpoint, which will allow us to make requests to it from code.

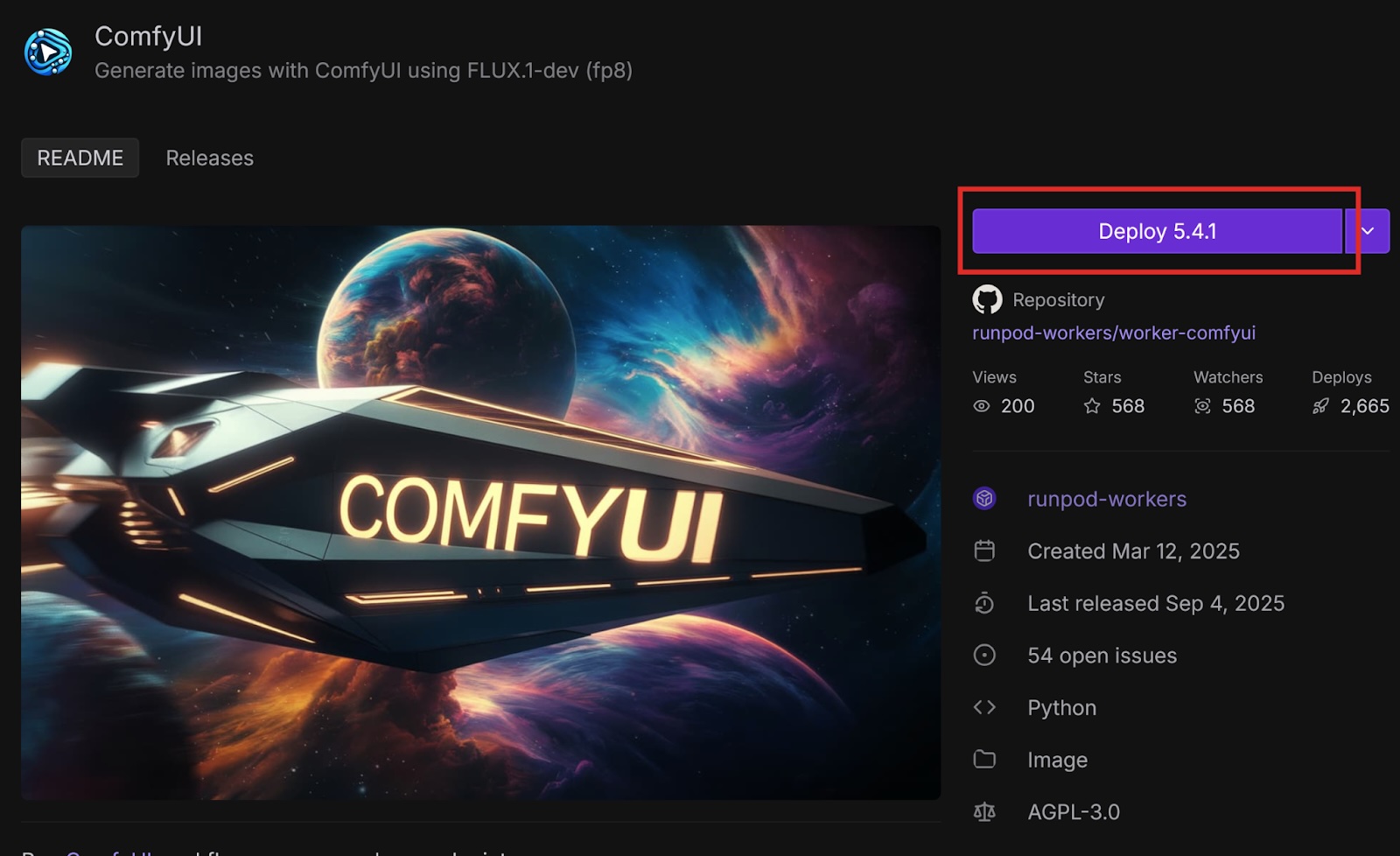

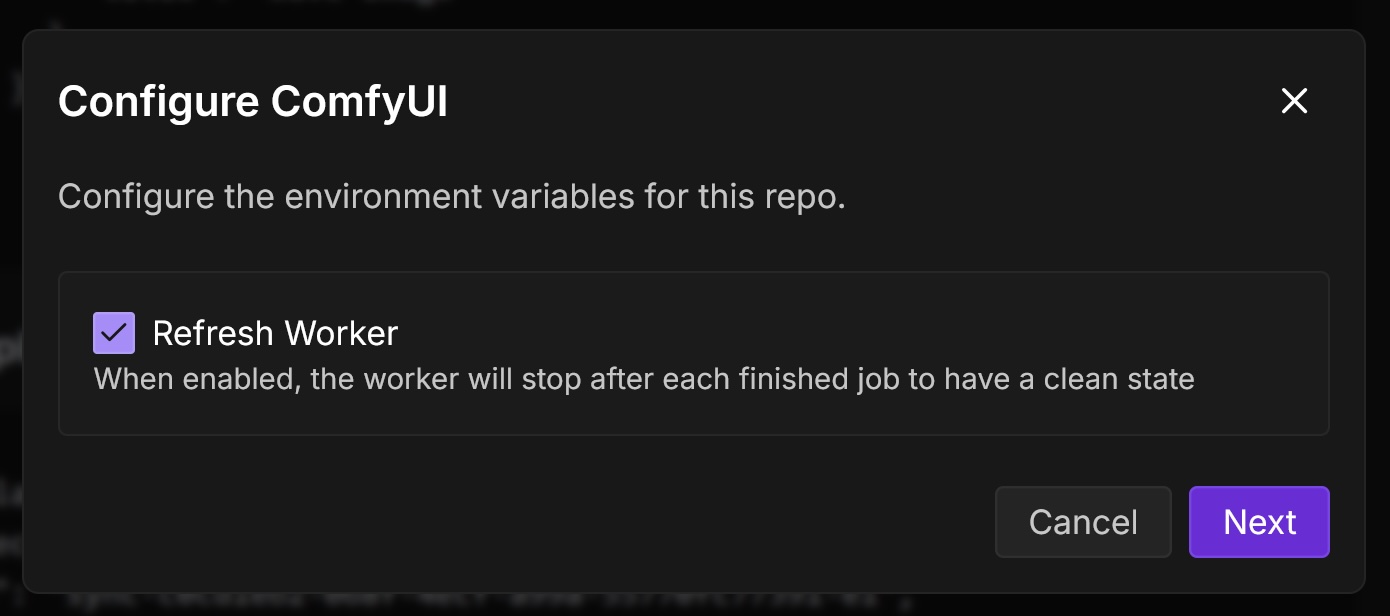

This is a ready-to-deploy template from the Runpod Hub. It uses the FLUX.1-dev-fp8 model and only works with this model. Later in this post, we will deploy this template with other models using Docker.

import base64

import requests

import runpodRequests to ComfyUI return images in the form of base-64 strings by default, so we need the base64 library to decode them.

The requests library helps us send requests to our API endpoint.

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR API KEY>'

}data = {

"input": {

"workflow": {

"6": {

"inputs": {

"text": "anime cat with massive fluffy fennec ears and a big fluffy tail blonde messy long hair blue eyes wearing a construction outfit placing a fancy black forest cake with candles on top of a dinner table of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere there are paintings on the walls",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Positive Prompt)"

}

},

"8": {

"inputs": {

"samples": ["31", 0],

"vae": ["30", 2]

},

"class_type": "VAEDecode",

"_meta": {

"title": "VAE Decode"

}

},

"9": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

},

"27": {

"inputs": {

"width": 512,

"height": 512,

"batch_size": 1

},

"class_type": "EmptySD3LatentImage",

"_meta": {

"title": "EmptySD3LatentImage"

}

},

"30": {

"inputs": {

"ckpt_name": "flux1-dev-fp8.safetensors"

},

"class_type": "CheckpointLoaderSimple",

"_meta": {

"title": "Load Checkpoint"

}

},

"31": {

"inputs": {

"seed": 243057879077961,

"steps": 10,

"cfg": 1,

"sampler_name": "euler",

"scheduler": "simple",

"denoise": 1,

"model": ["30", 0],

"positive": ["35", 0],

"negative": ["33", 0],

"latent_image": ["27", 0]

},

"class_type": "KSampler",

"_meta": {

"title": "KSampler"

}

},

"33": {

"inputs": {

"text": "",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Negative Prompt)"

}

},

"35": {

"inputs": {

"guidance": 3.5,

"conditioning": ["6", 0]

},

"class_type": "FluxGuidance",

"_meta": {

"title": "FluxGuidance"

}

},

"38": {

"inputs": {

"images": ["8", 0]

},

"class_type": "PreviewImage",

"_meta": {

"title": "Preview Image"

}

},

"40": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

}

}

}

}response = requests.post(

'<YOUR ENDPOINT URL>',

headers=headers,

json=data)

json = response.json()

base64_string = json['output']['images'][0]['data']imgdata = base64.b64decode(base64_string)

filename = 'image.jpg'

with open(filename, 'wb') as f:

f.write(imgdata)import base64

import requests

import runpod

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR API KEY>'

}

data = {

"input": {

"workflow": {

"6": {

"inputs": {

"text": "anime cat with massive fluffy fennec ears and a big fluffy tail blonde messy long hair blue eyes wearing a construction outfit placing a fancy black forest cake with candles on top of a dinner table of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere there are paintings on the walls",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Positive Prompt)"

}

},

"8": {

"inputs": {

"samples": ["31", 0],

"vae": ["30", 2]

},

"class_type": "VAEDecode",

"_meta": {

"title": "VAE Decode"

}

},

"9": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

},

"27": {

"inputs": {

"width": 512,

"height": 512,

"batch_size": 1

},

"class_type": "EmptySD3LatentImage",

"_meta": {

"title": "EmptySD3LatentImage"

}

},

"30": {

"inputs": {

"ckpt_name": "flux1-dev-fp8.safetensors"

},

"class_type": "CheckpointLoaderSimple",

"_meta": {

"title": "Load Checkpoint"

}

},

"31": {

"inputs": {

"seed": 243057879077961,

"steps": 10,

"cfg": 1,

"sampler_name": "euler",

"scheduler": "simple",

"denoise": 1,

"model": ["30", 0],

"positive": ["35", 0],

"negative": ["33", 0],

"latent_image": ["27", 0]

},

"class_type": "KSampler",

"_meta": {

"title": "KSampler"

}

},

"33": {

"inputs": {

"text": "",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Negative Prompt)"

}

},

"35": {

"inputs": {

"guidance": 3.5,

"conditioning": ["6", 0]

},

"class_type": "FluxGuidance",

"_meta": {

"title": "FluxGuidance"

}

},

"38": {

"inputs": {

"images": ["8", 0]

},

"class_type": "PreviewImage",

"_meta": {

"title": "Preview Image"

}

},

"40": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

}

}

}

}

response = requests.post('<YOUR ENDPOINT URL>', headers=headers, json=data)

json = response.json()

base64_string = json['output']['images'][0]['data']

imgdata = base64.b64decode(base64_string)

filename = 'image.png'

with open(filename, 'wb') as f:

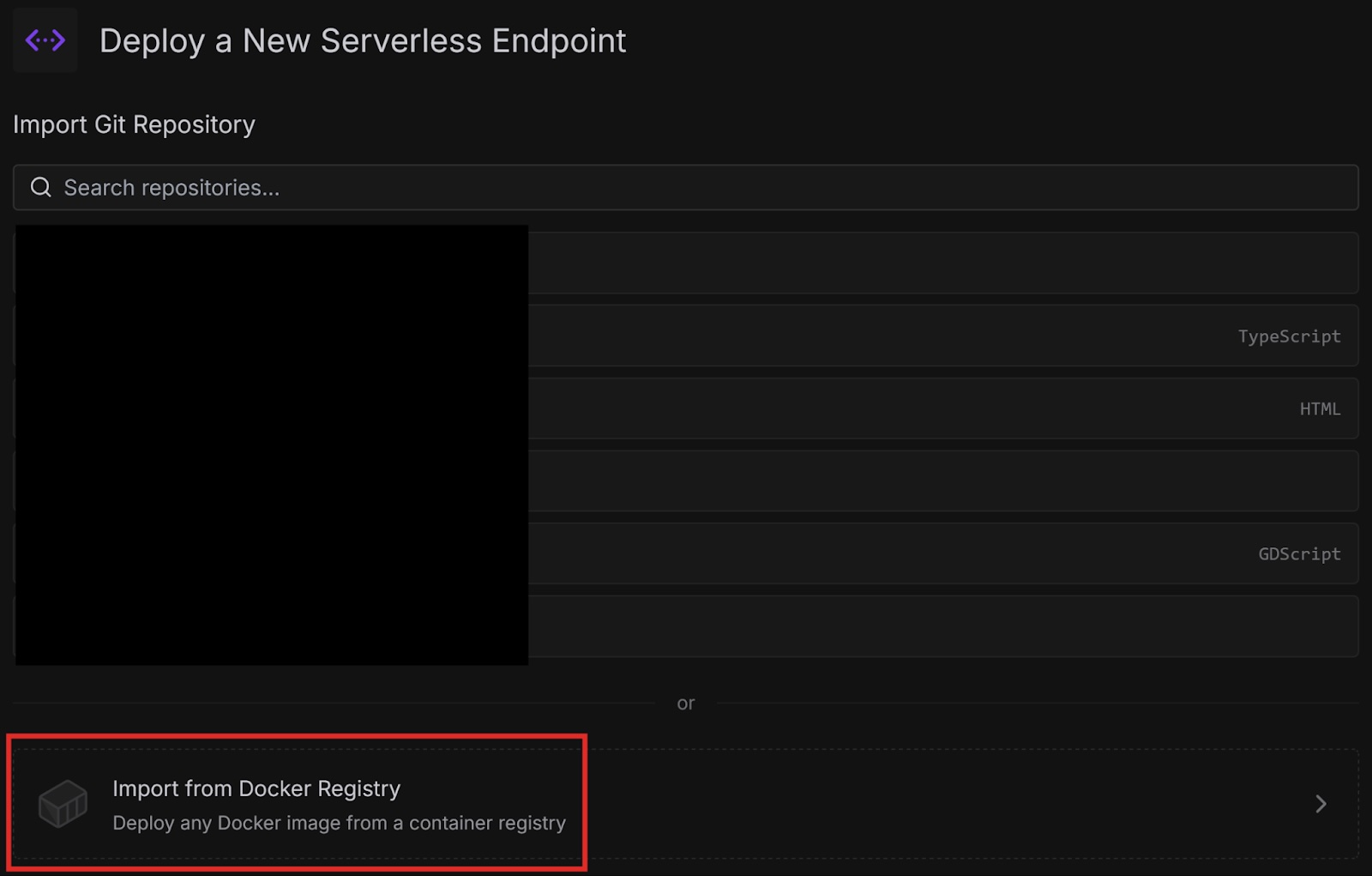

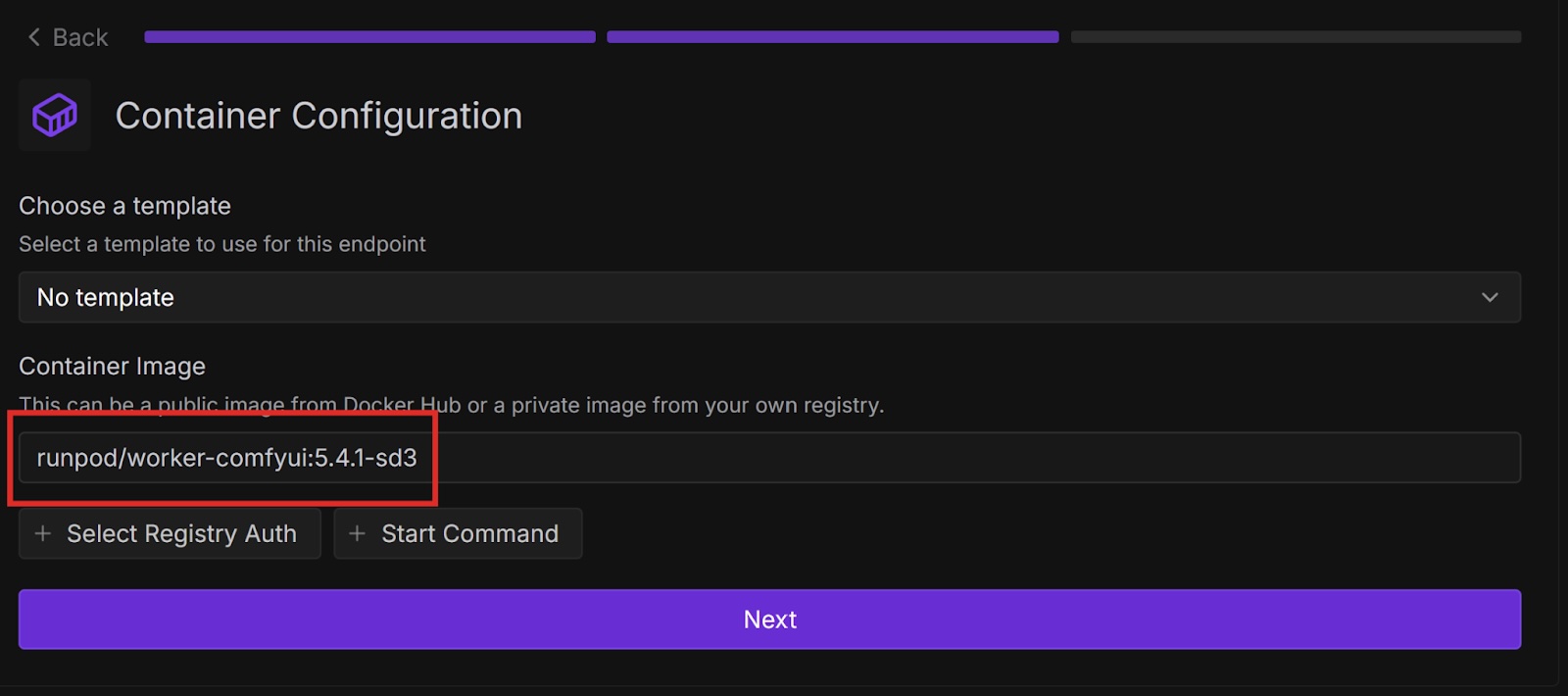

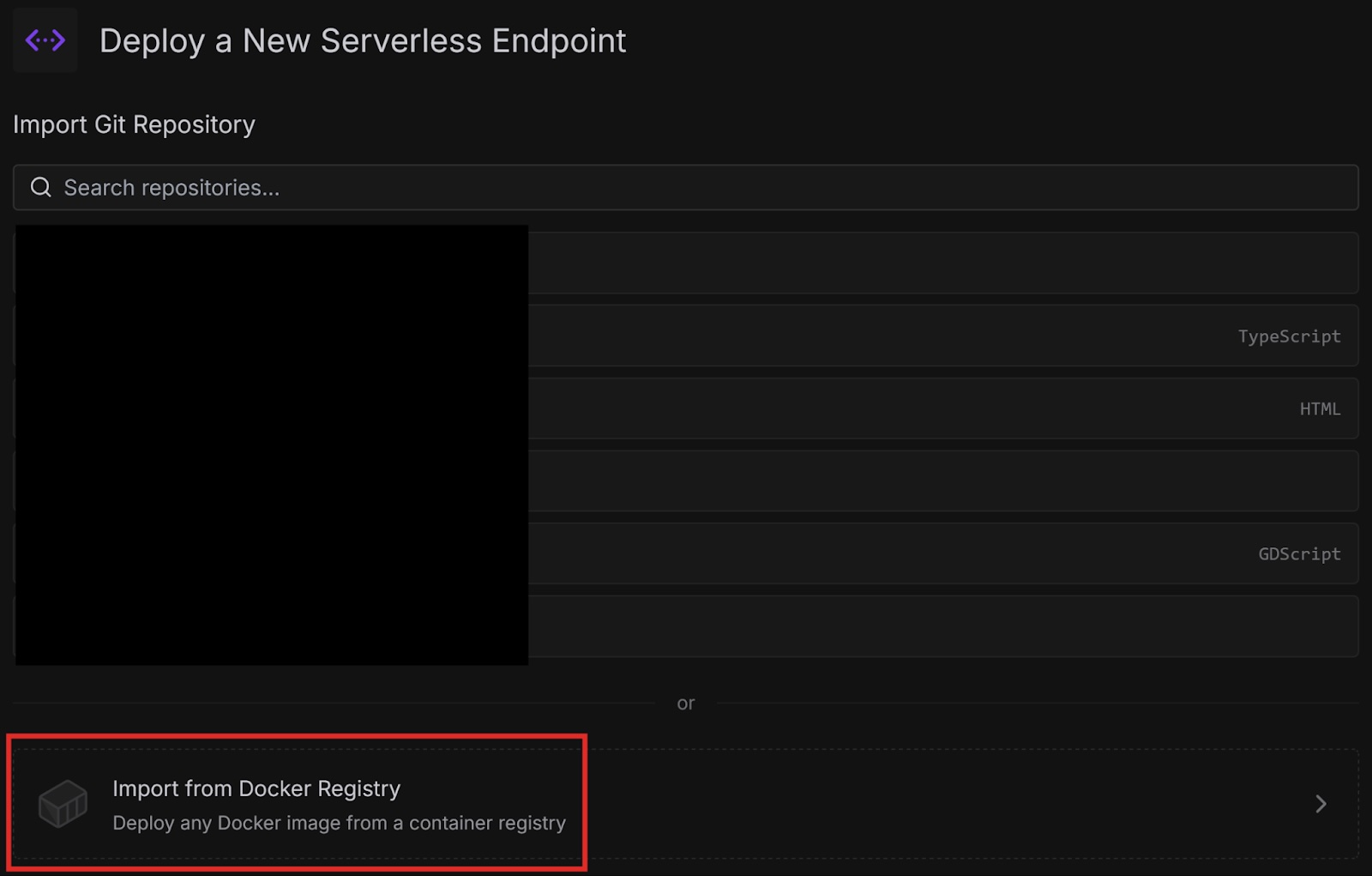

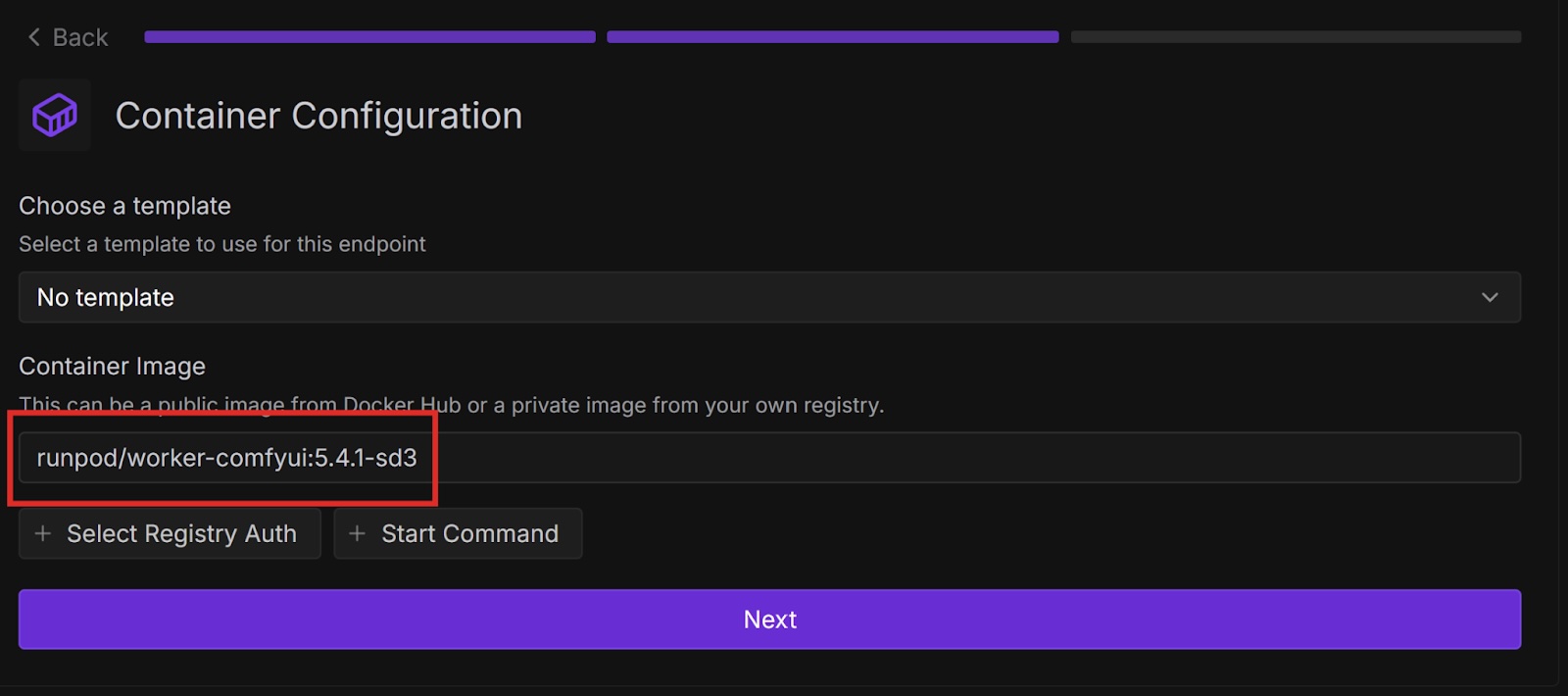

f.write(imgdata)The ComfyUI template on the Runpod Hub makes it easy to deploy as a serverless endpoint, but it is restricted to the FLUX.1-dev-fp8 model. If you want to use a different model, you can use the worker-comfyui repository on GitHub.

Runpod provides official container images on Docker Hub that deploy ComfyUI with various models. In this tutorial, we will use one of these images, but if you want to use a model that Runpod does not have an image for, you can use the latest base image and supply your own model.

requests.post() call).Congratulations, you successfully deployed ComfyUI to a serverless endpoint both from a Runpod Hub repository and a Docker image! Runpod provides many ways to quickly start running common AI workloads without much setup.

Is there a particular model that you want to use with ComfyUI, but isn’t in any of Runpod’s Docker images? Try customizing your setup by creating your own Dockerfile starting from one of the base images and baking the model you want into your image. Then deploy it to Runpod either from Docker or your own GitHub repository.

Learn how to deploy ComfyUI as a serverless API endpoint on Runpod to run AI image generation workflows at scale. The tutorial covers deploying from Runpod Hub templates or Docker images, integrating with Python for synchronous API calls, and customizing models such as FLUX.1-dev or Stable Diffusion 3. Runpod’s pay-as-you-go Serverless platform provides a simple, cost-efficient way to build, test, and scale ComfyUI for generative AI applications.

In a previous blog post, we explored Runpod Serverless, a pay-as-you-go cloud computing solution that doesn’t require managing servers to scale and maintain your applications. We deployed some basic code from templates that just printed some text to the console, but now let’s do something more performance-intensive.

ComfyUI is an open-source, node-based application for generative AI workflows. You can deploy ComfyUI as an API endpoint on Runpod Serverless, send workflows via API calls, and receive AI-generated images in response.

In this blog post you’ll learn how to:

Runpod Hub provides convenient repositories that you can quickly deploy to Runpod Serverless without much setup. Let’s deploy the ComfyUI repo from Runpod Hub to a serverless endpoint, which will allow us to make requests to it from code.

This is a ready-to-deploy template from the Runpod Hub. It uses the FLUX.1-dev-fp8 model and only works with this model. Later in this post, we will deploy this template with other models using Docker.

import base64

import requests

import runpodRequests to ComfyUI return images in the form of base-64 strings by default, so we need the base64 library to decode them.

The requests library helps us send requests to our API endpoint.

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR API KEY>'

}data = {

"input": {

"workflow": {

"6": {

"inputs": {

"text": "anime cat with massive fluffy fennec ears and a big fluffy tail blonde messy long hair blue eyes wearing a construction outfit placing a fancy black forest cake with candles on top of a dinner table of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere there are paintings on the walls",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Positive Prompt)"

}

},

"8": {

"inputs": {

"samples": ["31", 0],

"vae": ["30", 2]

},

"class_type": "VAEDecode",

"_meta": {

"title": "VAE Decode"

}

},

"9": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

},

"27": {

"inputs": {

"width": 512,

"height": 512,

"batch_size": 1

},

"class_type": "EmptySD3LatentImage",

"_meta": {

"title": "EmptySD3LatentImage"

}

},

"30": {

"inputs": {

"ckpt_name": "flux1-dev-fp8.safetensors"

},

"class_type": "CheckpointLoaderSimple",

"_meta": {

"title": "Load Checkpoint"

}

},

"31": {

"inputs": {

"seed": 243057879077961,

"steps": 10,

"cfg": 1,

"sampler_name": "euler",

"scheduler": "simple",

"denoise": 1,

"model": ["30", 0],

"positive": ["35", 0],

"negative": ["33", 0],

"latent_image": ["27", 0]

},

"class_type": "KSampler",

"_meta": {

"title": "KSampler"

}

},

"33": {

"inputs": {

"text": "",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Negative Prompt)"

}

},

"35": {

"inputs": {

"guidance": 3.5,

"conditioning": ["6", 0]

},

"class_type": "FluxGuidance",

"_meta": {

"title": "FluxGuidance"

}

},

"38": {

"inputs": {

"images": ["8", 0]

},

"class_type": "PreviewImage",

"_meta": {

"title": "Preview Image"

}

},

"40": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

}

}

}

}response = requests.post(

'<YOUR ENDPOINT URL>',

headers=headers,

json=data)

json = response.json()

base64_string = json['output']['images'][0]['data']imgdata = base64.b64decode(base64_string)

filename = 'image.jpg'

with open(filename, 'wb') as f:

f.write(imgdata)import base64

import requests

import runpod

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer <YOUR API KEY>'

}

data = {

"input": {

"workflow": {

"6": {

"inputs": {

"text": "anime cat with massive fluffy fennec ears and a big fluffy tail blonde messy long hair blue eyes wearing a construction outfit placing a fancy black forest cake with candles on top of a dinner table of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere there are paintings on the walls",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Positive Prompt)"

}

},

"8": {

"inputs": {

"samples": ["31", 0],

"vae": ["30", 2]

},

"class_type": "VAEDecode",

"_meta": {

"title": "VAE Decode"

}

},

"9": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

},

"27": {

"inputs": {

"width": 512,

"height": 512,

"batch_size": 1

},

"class_type": "EmptySD3LatentImage",

"_meta": {

"title": "EmptySD3LatentImage"

}

},

"30": {

"inputs": {

"ckpt_name": "flux1-dev-fp8.safetensors"

},

"class_type": "CheckpointLoaderSimple",

"_meta": {

"title": "Load Checkpoint"

}

},

"31": {

"inputs": {

"seed": 243057879077961,

"steps": 10,

"cfg": 1,

"sampler_name": "euler",

"scheduler": "simple",

"denoise": 1,

"model": ["30", 0],

"positive": ["35", 0],

"negative": ["33", 0],

"latent_image": ["27", 0]

},

"class_type": "KSampler",

"_meta": {

"title": "KSampler"

}

},

"33": {

"inputs": {

"text": "",

"clip": ["30", 1]

},

"class_type": "CLIPTextEncode",

"_meta": {

"title": "CLIP Text Encode (Negative Prompt)"

}

},

"35": {

"inputs": {

"guidance": 3.5,

"conditioning": ["6", 0]

},

"class_type": "FluxGuidance",

"_meta": {

"title": "FluxGuidance"

}

},

"38": {

"inputs": {

"images": ["8", 0]

},

"class_type": "PreviewImage",

"_meta": {

"title": "Preview Image"

}

},

"40": {

"inputs": {

"filename_prefix": "ComfyUI",

"images": ["8", 0]

},

"class_type": "SaveImage",

"_meta": {

"title": "Save Image"

}

}

}

}

}

response = requests.post('<YOUR ENDPOINT URL>', headers=headers, json=data)

json = response.json()

base64_string = json['output']['images'][0]['data']

imgdata = base64.b64decode(base64_string)

filename = 'image.png'

with open(filename, 'wb') as f:

f.write(imgdata)The ComfyUI template on the Runpod Hub makes it easy to deploy as a serverless endpoint, but it is restricted to the FLUX.1-dev-fp8 model. If you want to use a different model, you can use the worker-comfyui repository on GitHub.

Runpod provides official container images on Docker Hub that deploy ComfyUI with various models. In this tutorial, we will use one of these images, but if you want to use a model that Runpod does not have an image for, you can use the latest base image and supply your own model.

requests.post() call).Congratulations, you successfully deployed ComfyUI to a serverless endpoint both from a Runpod Hub repository and a Docker image! Runpod provides many ways to quickly start running common AI workloads without much setup.

Is there a particular model that you want to use with ComfyUI, but isn’t in any of Runpod’s Docker images? Try customizing your setup by creating your own Dockerfile starting from one of the base images and baking the model you want into your image. Then deploy it to Runpod either from Docker or your own GitHub repository.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.