We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

Runpod is excited to announce a significant update to our Command Line Interface (CLI) tool, focusing on Dockerless functionality. This revolutionary feature simplifies the AI development process, allowing you to deploy custom endpoints on our serverless platform without the complexities of Docker.

Runpod has always embraced a Bring-Your-Own-Container model for our GPU Cloud and Serverless offerings. While this flexibility is a strength, it presented hurdles in the development process for our serverless endpoints. To address these, we're introducing Runpod Projects and a new Dockerless Workflow in release 1.11.0 of our CLI tool runpodctl. This approach simplifies project development and deployment, bypassing the need for Docker.

To explore this workflow, ensure you have runpodctl version 1.11.0 or higher. The process involves configuring runpodctl with your API key, creating a new project, starting a development session, and deploying your serverless endpoint – all without the need for Docker.

The Dockerless workflow separates the components of a serverless worker, allowing for independent modification of system dependencies, custom code, code dependencies, and models. This setup streamlines the process of making and deploying changes.

Newly created projects have a specific file structure, and during development, any changes in the local project folder are synced to the project environment on Runpod. The runpod.toml file in your project directory allows you to configure various settings and paths for your project.

We've listened to your feedback and focused our efforts on enhancing the user experience with our CLI tool. Here's what you can expect:

Embarking on your Dockerless development journey with Runpod is straightforward. Here's a quick guide to get you started with our innovative CLI tool, runpodctl, and embrace a streamlined AI development process.

Before diving into project creation, ensure runpodctl is installed and up to date (version 1.11.0 or higher). Begin by configuring runpodctl with your Runpod API key:

Create a new project, test-project, by executing the following command. This step will prompt you for project settings and automatically navigate you into the project folder:

Open the project folder in a text editor to view the generated files, which include:

.runpodignore for specifying files to exclude during deployment.builder/requirements.txt for listing pip dependencies.runpod.toml for project config, containing deployment settings.src/handler.py for your handler source code.

Initiate a development session on Runpod with:

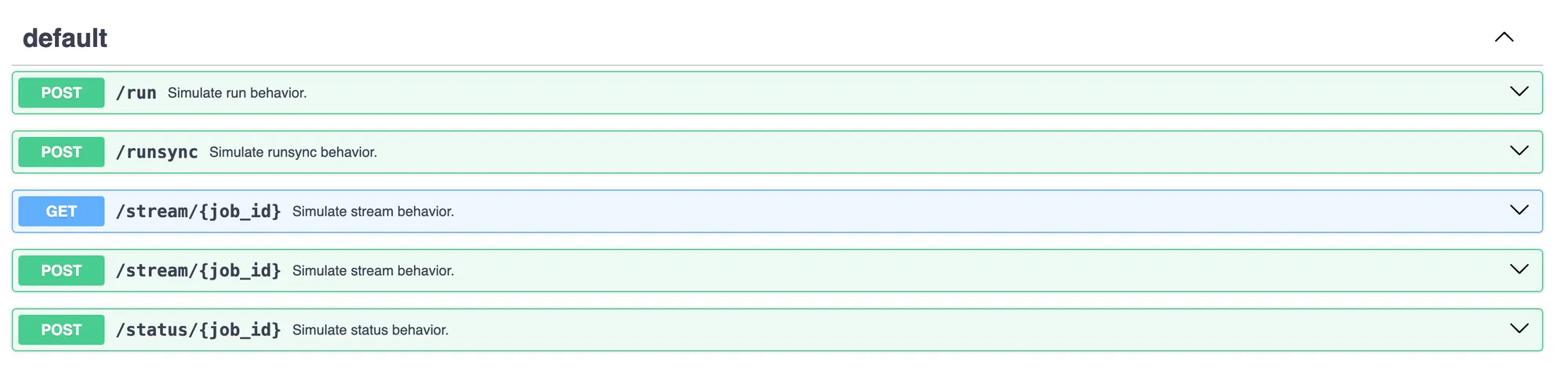

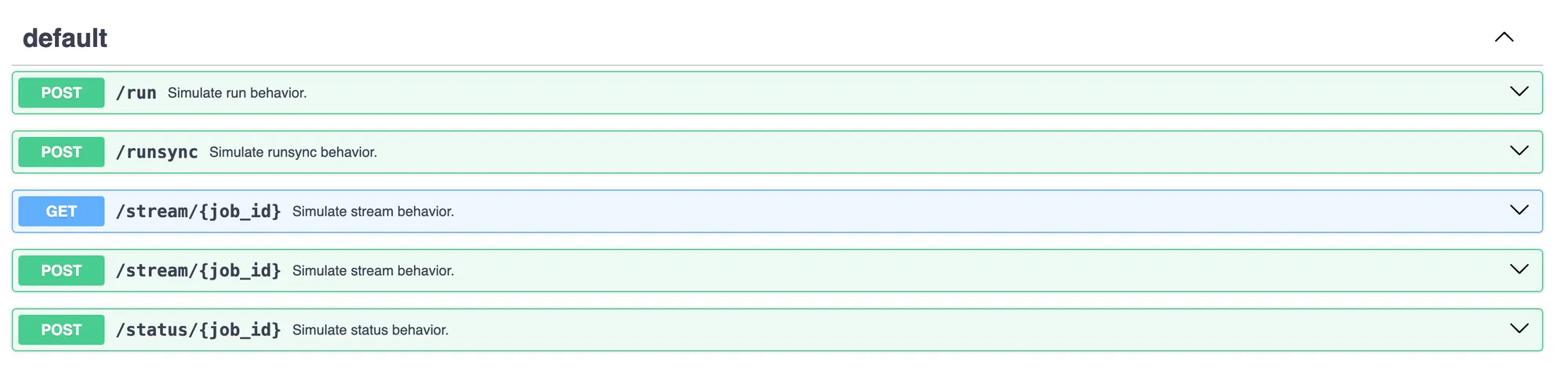

Select or create a network volume if it's your first session for this project. A pod will be created, and you'll see logs in your terminal. Once dependencies are set up, a URL for the testing API page will be provided. Visit this URL to send requests to the handler and observe real-time code changes.

Once satisfied with your code, deploy it as a serverless endpoint:

Congratulations! You've successfully deployed a Runpod serverless endpoint without the complexities of Docker.

The Dockerless workflow simplifies the development process by separating the components of a serverless worker, allowing for quick modifications. This method utilizes a base Docker image filled with common system dependencies, alongside your custom code and any necessary supporting packages or models.

base_image in runpod.toml.runpodctl project build command generates a Dockerfile for this purpose.Embark on your Dockerless development journey with Runpod and streamline your AI project workflows today.

We're keen to hear your feedback on our Dockerless CLI tool. Please head to our Discord server, create a post in our #feedback channel, and use the tag Dockerless. Your insights are invaluable to our continuous innovation, helping us refine and enhance our tools and services to better meet your needs in AI development.

With your collaboration, we're not just developing tools; we're shaping the future of AI. Explore the new possibilities with Runpod and be a part of this exciting journey.

Have you tried the new Dockerless CLI tool? What has been your experience? Share your thoughts and join the conversation.

Runpod’s new Dockerless CLI simplifies AI development—skip Docker, deploy faster, and iterate with ease using our CLI tool runpodctl 1.11.0+.

Runpod is excited to announce a significant update to our Command Line Interface (CLI) tool, focusing on Dockerless functionality. This revolutionary feature simplifies the AI development process, allowing you to deploy custom endpoints on our serverless platform without the complexities of Docker.

Runpod has always embraced a Bring-Your-Own-Container model for our GPU Cloud and Serverless offerings. While this flexibility is a strength, it presented hurdles in the development process for our serverless endpoints. To address these, we're introducing Runpod Projects and a new Dockerless Workflow in release 1.11.0 of our CLI tool runpodctl. This approach simplifies project development and deployment, bypassing the need for Docker.

To explore this workflow, ensure you have runpodctl version 1.11.0 or higher. The process involves configuring runpodctl with your API key, creating a new project, starting a development session, and deploying your serverless endpoint – all without the need for Docker.

The Dockerless workflow separates the components of a serverless worker, allowing for independent modification of system dependencies, custom code, code dependencies, and models. This setup streamlines the process of making and deploying changes.

Newly created projects have a specific file structure, and during development, any changes in the local project folder are synced to the project environment on Runpod. The runpod.toml file in your project directory allows you to configure various settings and paths for your project.

We've listened to your feedback and focused our efforts on enhancing the user experience with our CLI tool. Here's what you can expect:

Embarking on your Dockerless development journey with Runpod is straightforward. Here's a quick guide to get you started with our innovative CLI tool, runpodctl, and embrace a streamlined AI development process.

Before diving into project creation, ensure runpodctl is installed and up to date (version 1.11.0 or higher). Begin by configuring runpodctl with your Runpod API key:

Create a new project, test-project, by executing the following command. This step will prompt you for project settings and automatically navigate you into the project folder:

Open the project folder in a text editor to view the generated files, which include:

.runpodignore for specifying files to exclude during deployment.builder/requirements.txt for listing pip dependencies.runpod.toml for project config, containing deployment settings.src/handler.py for your handler source code.

Initiate a development session on Runpod with:

Select or create a network volume if it's your first session for this project. A pod will be created, and you'll see logs in your terminal. Once dependencies are set up, a URL for the testing API page will be provided. Visit this URL to send requests to the handler and observe real-time code changes.

Once satisfied with your code, deploy it as a serverless endpoint:

Congratulations! You've successfully deployed a Runpod serverless endpoint without the complexities of Docker.

The Dockerless workflow simplifies the development process by separating the components of a serverless worker, allowing for quick modifications. This method utilizes a base Docker image filled with common system dependencies, alongside your custom code and any necessary supporting packages or models.

base_image in runpod.toml.runpodctl project build command generates a Dockerfile for this purpose.Embark on your Dockerless development journey with Runpod and streamline your AI project workflows today.

We're keen to hear your feedback on our Dockerless CLI tool. Please head to our Discord server, create a post in our #feedback channel, and use the tag Dockerless. Your insights are invaluable to our continuous innovation, helping us refine and enhance our tools and services to better meet your needs in AI development.

With your collaboration, we're not just developing tools; we're shaping the future of AI. Explore the new possibilities with Runpod and be a part of this exciting journey.

Have you tried the new Dockerless CLI tool? What has been your experience? Share your thoughts and join the conversation.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.