We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

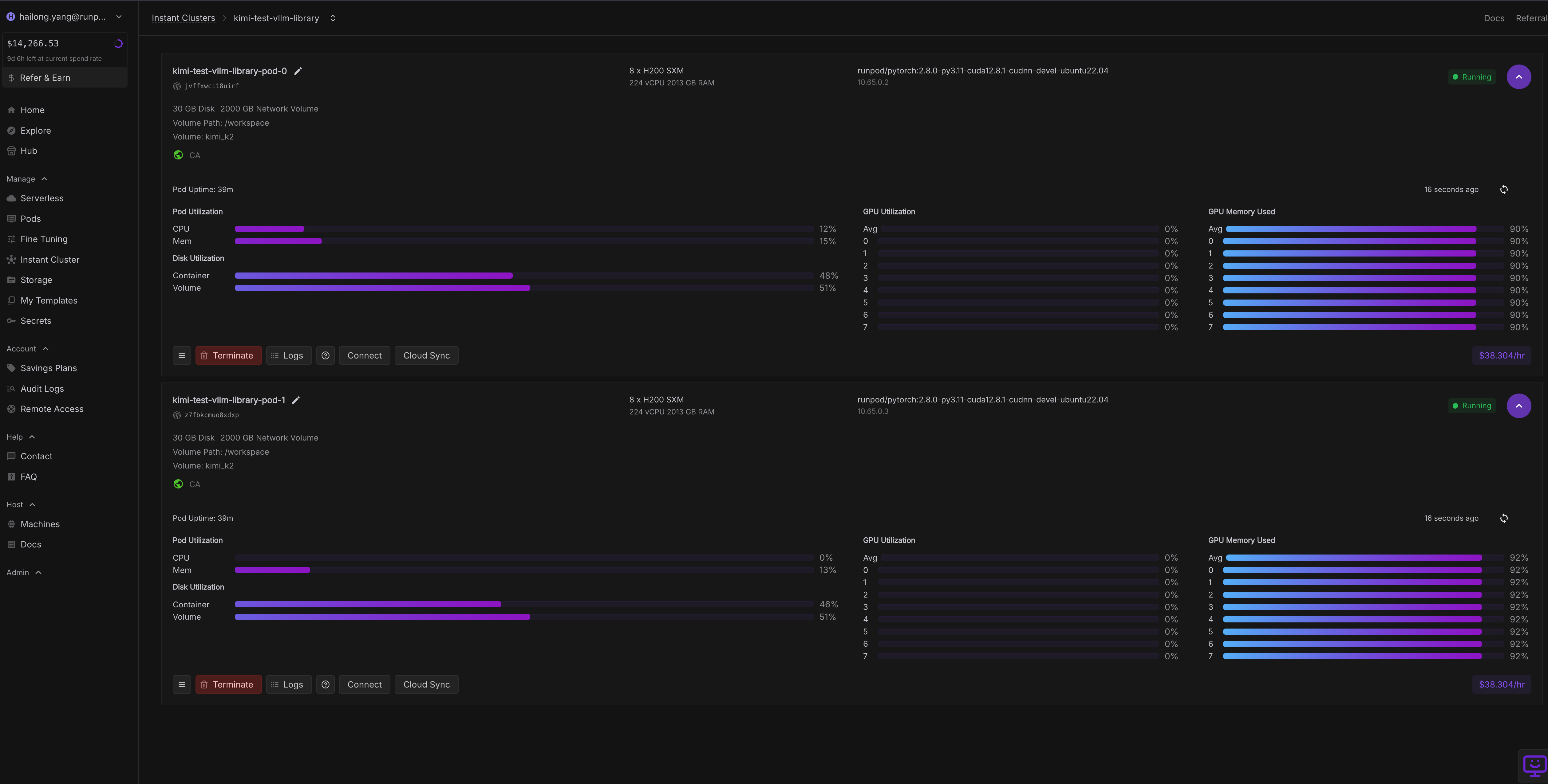

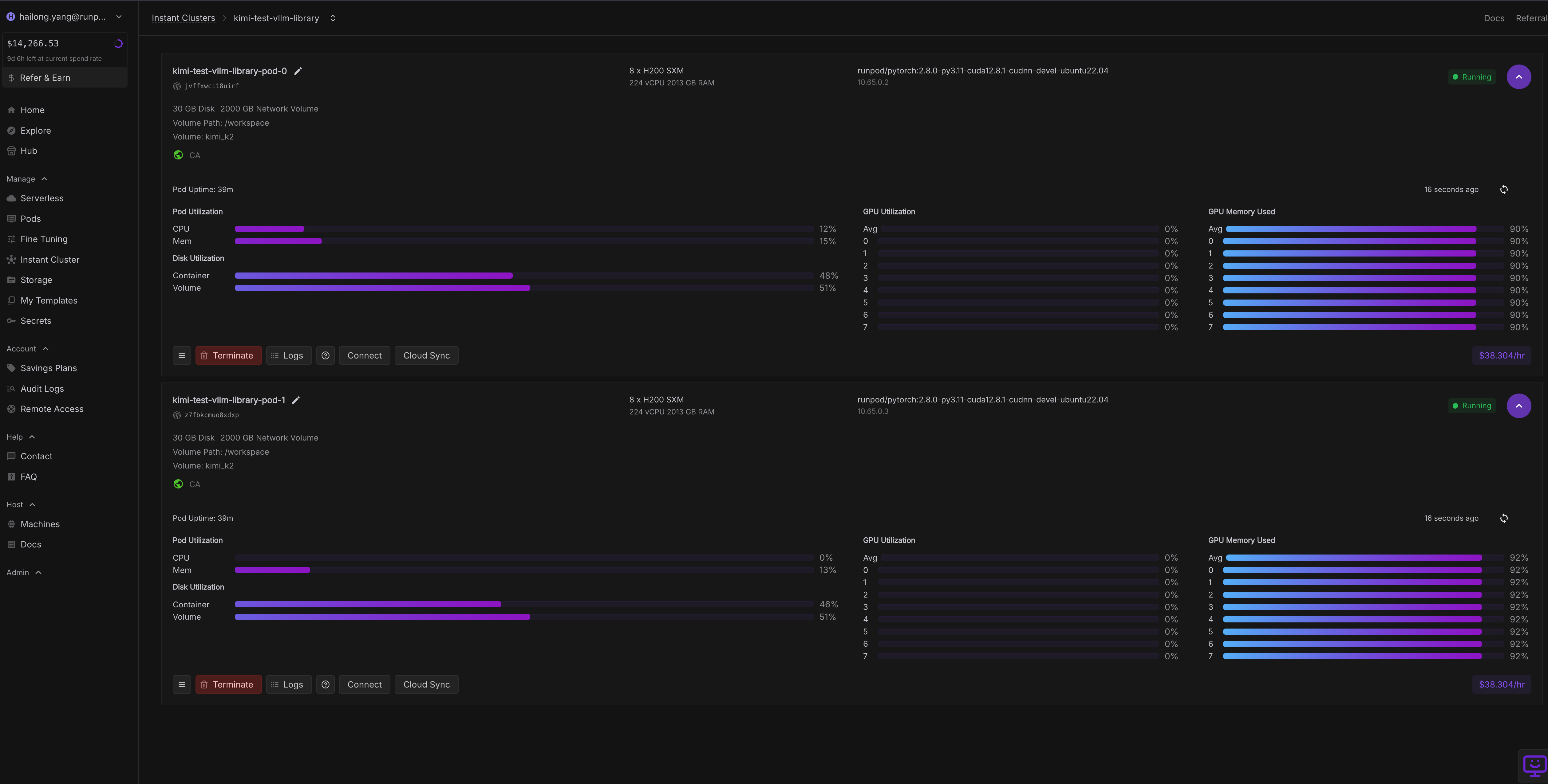

1. Create Network Storage (2TB), Use the CA-MTL-4 region (recommended for now).

2. Spin Up a Pod using Runpod official Pytorch template and mount the network volume you just created, once the pod is running, connect to Jupyter Lab

3. Download the Model

pip install huggingface_hub

huggingface-cli login

huggingface-cli download moonshotai/Kimi-K2-Instruct --local-dir /workspace/kimi-k24. Launch the Instant Cluster

1. installation on Node 0 with a shared volume

# node 0 start ray

export NCCL_SOCKET_IFNAME=ens1

export ENS1_IP=$(ip addr show ens1 | grep -oP 'inet \K[\d.]+')

ray start --head \

--node-ip-address="$ENS1_IP" \

--port=6379 \

--dashboard-port=8265# node 0 start ray

export NCCL_SOCKET_IFNAME=ens1

export ENS1_IP=$(ip addr show ens1 | grep -oP 'inet \K[\d.]+')

ray start --head --node-ip-address="$ENS1_IP" --port=6379 --dashboard-port=8265 2. Node 1 Instructions

# RUN THIS ON NODE 1 to start ray

# node 1

export NCCL_SOCKET_IFNAME=ens1

export HEAD_IP=10.65.0.2

export ENS1_IP=$(ip addr show ens1 | grep -oP 'inet \K[\d.]+')

cd /workspace

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

pip install vllm ray transformers accelerate hf-transfer blobfile

pip install -U vllm \

--pre \

--extra-index-url https://wheels.vllm.ai/nightly

ray start --address="${HEAD_IP}:6379" --node-ip-address="$ENS1_IP"3. You should see the following:

Resources

---------------------------------------------------------------

Total Usage:

0.0/380.0 CPU

0.0/16.0 GPU

0B/3.30TiB memory

0B/372.53GiB object_store_memory4. Run on node with ip as host.

export VLLM_HOST_IP=10.65.0.2

vllm serve $MODEL_PATH \

--port 8000 \

--served-model-name kimi-k2 \

--trust-remote-code \

--tensor-parallel-size 8 \

--pipeline-parallel-size 2 \

--enable-auto-tool-choice \

--tool-call-parser kimi_k2import requests

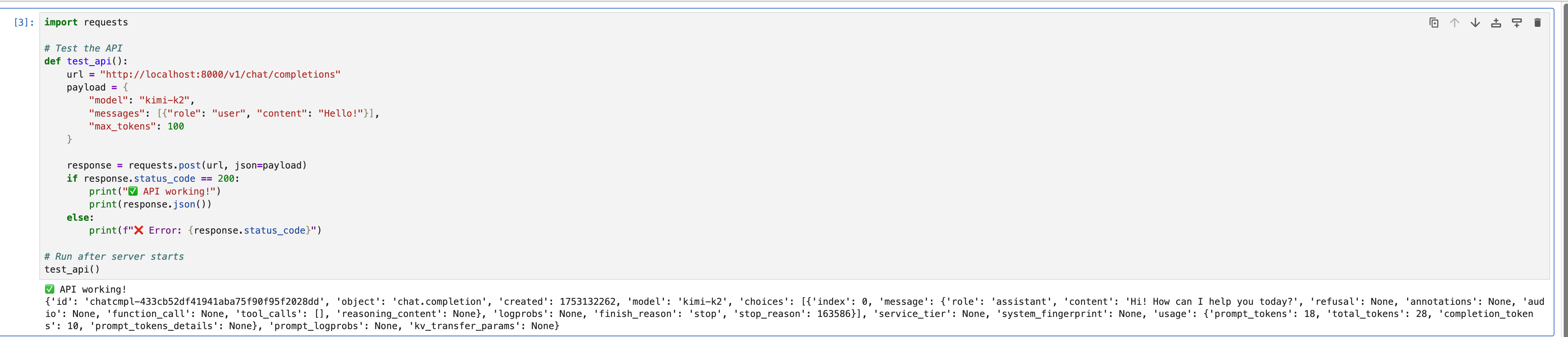

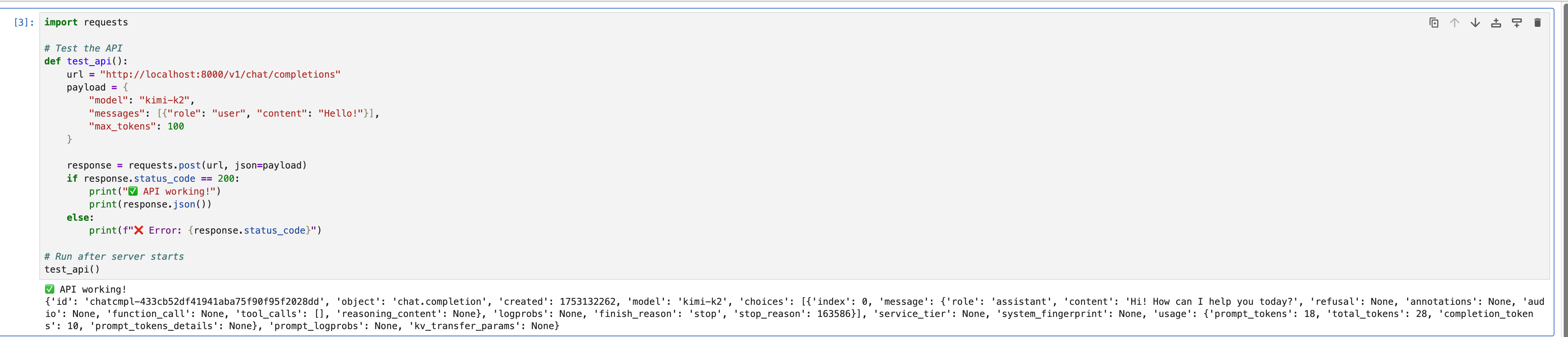

# Test the API

def test_api():

url = "http://localhost:8000/v1/chat/completions"

payload = {

"model": "kimi-k2",

"messages": [{"role": "user", "content": "Hello!"}],

"max_tokens": 100

}

response = requests.post(url, json=payload)

if response.status_code == 200:

print("✅ API working!")

print(response.json())

else:

print(f"❌ Error: {response.status_code}")

# Run after server starts

test_api()

from openai import OpenAI

client = OpenAI(

api_key="EMPTY", # vLLM doesn’t require a real API key

base_url="http://localhost:8000/v1" # Your vLLM server URL

)

def simple_chat(client: OpenAI, model_name: str, messages):

response = client.chat.completions.create(

model=model_name,

messages=messages,

stream=False,

temperature=0.6,

max_tokens=524

)

print(response.choices[0].message.content)

messages = [

{"role": "system", "content": "You are Kimi, an AI assistant created by Moonshot AI."},

{"role": "user", "content": [{"type": "text", "text": "What is Runpod."}]},

]

simple_chat(client, "kimi-k2", messages)RunPod is a cloud-computing platform designed specifically for **AI and machine-learning workloads**. It provides on-demand access to **GPU instances** (like NVIDIA A100s, H100s, RTX 4090s, etc.) at competitive prices, making it popular for training, fine-tuning, and deploying AI models without the hassle of managing physical hardware.

### **Key Features of RunPod:**

1. **GPU Rental** – Rent high-end GPUs by the hour or second, with options for **secure cloud** or **community GPUs** (cheaper, but shared).

2. **Serverless GPUs** – Deploy AI models as **serverless endpoints**, scaling automatically when needed.

3. **Prebuilt Templates** – One-click deployments for popular AI frameworks like **PyTorch**, **TensorFlow**, **Stable Diffusion**, and **LLMs**.

4. **Persistent Storage** – Attach scalable storage volumes for datasets and models.

5. **Global Availability** – Data centers in **US, EU, and Asia** for low-latency access.

6. **Pay-as-you-go** – No long-term contracts; pay only for what you use.

### **Who Uses RunPod?**

- **AI Researchers & Startups** – Train/fine-tune models without high upfront costs.

- **Developers** – Deploy **LLMs**, **image generation APIs**, or **inference endpoints**.

- **Students & Hobbyists** – Affordable access to GPUs for learning and experimentation.

- **Enterprises** – Spin up temporary GPU clusters for heavy workloads.

### **RunPod vs. Competitors**

- **vs. AWS/GCP/Azure**: Cheaper GPU pricing, simpler setup.

- **vs. Lambda Labs**: More flexible GPU choices and serverless options.

- **vs. Colab Pro**: More powerful GPUs and persistent environments.

### **Pricing Example (as of 2024)**

- **RTX 4090**: ~$0.44/hr

- **A100 80GB**: ~$1.89/hr

- **H100 80GB**: ~$2.79/hr

RunPod is **ideal** for anyone needing **GPU compute on demand** without long-term commitments.Known IssuesCurrently as of July 21st, vllm library is not up to date so need to build from nightly builds.

https://github.com/MoonshotAI/Kimi-K2/issues/19

uv environment on a Network Volume is slow to initialize ray, recommend any python environments be ran on the machine itself instead of on the Network Volume.

Run MoonshotAI’s Kimi-K2-Instruct on RunPod Instant Clusters using H200 SXM GPUs and a 2TB shared network volume for seamless multi-node training. This guide shows how to deploy with PyTorch templates, optimize Docker environments, and accelerate LLM inference with scalable, low-latency infrastructure.

1. Create Network Storage (2TB), Use the CA-MTL-4 region (recommended for now).

2. Spin Up a Pod using Runpod official Pytorch template and mount the network volume you just created, once the pod is running, connect to Jupyter Lab

3. Download the Model

pip install huggingface_hub

huggingface-cli login

huggingface-cli download moonshotai/Kimi-K2-Instruct --local-dir /workspace/kimi-k24. Launch the Instant Cluster

1. installation on Node 0 with a shared volume

# node 0 start ray

export NCCL_SOCKET_IFNAME=ens1

export ENS1_IP=$(ip addr show ens1 | grep -oP 'inet \K[\d.]+')

ray start --head \

--node-ip-address="$ENS1_IP" \

--port=6379 \

--dashboard-port=8265# node 0 start ray

export NCCL_SOCKET_IFNAME=ens1

export ENS1_IP=$(ip addr show ens1 | grep -oP 'inet \K[\d.]+')

ray start --head --node-ip-address="$ENS1_IP" --port=6379 --dashboard-port=8265 2. Node 1 Instructions

# RUN THIS ON NODE 1 to start ray

# node 1

export NCCL_SOCKET_IFNAME=ens1

export HEAD_IP=10.65.0.2

export ENS1_IP=$(ip addr show ens1 | grep -oP 'inet \K[\d.]+')

cd /workspace

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

pip install vllm ray transformers accelerate hf-transfer blobfile

pip install -U vllm \

--pre \

--extra-index-url https://wheels.vllm.ai/nightly

ray start --address="${HEAD_IP}:6379" --node-ip-address="$ENS1_IP"3. You should see the following:

Resources

---------------------------------------------------------------

Total Usage:

0.0/380.0 CPU

0.0/16.0 GPU

0B/3.30TiB memory

0B/372.53GiB object_store_memory4. Run on node with ip as host.

export VLLM_HOST_IP=10.65.0.2

vllm serve $MODEL_PATH \

--port 8000 \

--served-model-name kimi-k2 \

--trust-remote-code \

--tensor-parallel-size 8 \

--pipeline-parallel-size 2 \

--enable-auto-tool-choice \

--tool-call-parser kimi_k2import requests

# Test the API

def test_api():

url = "http://localhost:8000/v1/chat/completions"

payload = {

"model": "kimi-k2",

"messages": [{"role": "user", "content": "Hello!"}],

"max_tokens": 100

}

response = requests.post(url, json=payload)

if response.status_code == 200:

print("✅ API working!")

print(response.json())

else:

print(f"❌ Error: {response.status_code}")

# Run after server starts

test_api()

from openai import OpenAI

client = OpenAI(

api_key="EMPTY", # vLLM doesn’t require a real API key

base_url="http://localhost:8000/v1" # Your vLLM server URL

)

def simple_chat(client: OpenAI, model_name: str, messages):

response = client.chat.completions.create(

model=model_name,

messages=messages,

stream=False,

temperature=0.6,

max_tokens=524

)

print(response.choices[0].message.content)

messages = [

{"role": "system", "content": "You are Kimi, an AI assistant created by Moonshot AI."},

{"role": "user", "content": [{"type": "text", "text": "What is Runpod."}]},

]

simple_chat(client, "kimi-k2", messages)RunPod is a cloud-computing platform designed specifically for **AI and machine-learning workloads**. It provides on-demand access to **GPU instances** (like NVIDIA A100s, H100s, RTX 4090s, etc.) at competitive prices, making it popular for training, fine-tuning, and deploying AI models without the hassle of managing physical hardware.

### **Key Features of RunPod:**

1. **GPU Rental** – Rent high-end GPUs by the hour or second, with options for **secure cloud** or **community GPUs** (cheaper, but shared).

2. **Serverless GPUs** – Deploy AI models as **serverless endpoints**, scaling automatically when needed.

3. **Prebuilt Templates** – One-click deployments for popular AI frameworks like **PyTorch**, **TensorFlow**, **Stable Diffusion**, and **LLMs**.

4. **Persistent Storage** – Attach scalable storage volumes for datasets and models.

5. **Global Availability** – Data centers in **US, EU, and Asia** for low-latency access.

6. **Pay-as-you-go** – No long-term contracts; pay only for what you use.

### **Who Uses RunPod?**

- **AI Researchers & Startups** – Train/fine-tune models without high upfront costs.

- **Developers** – Deploy **LLMs**, **image generation APIs**, or **inference endpoints**.

- **Students & Hobbyists** – Affordable access to GPUs for learning and experimentation.

- **Enterprises** – Spin up temporary GPU clusters for heavy workloads.

### **RunPod vs. Competitors**

- **vs. AWS/GCP/Azure**: Cheaper GPU pricing, simpler setup.

- **vs. Lambda Labs**: More flexible GPU choices and serverless options.

- **vs. Colab Pro**: More powerful GPUs and persistent environments.

### **Pricing Example (as of 2024)**

- **RTX 4090**: ~$0.44/hr

- **A100 80GB**: ~$1.89/hr

- **H100 80GB**: ~$2.79/hr

RunPod is **ideal** for anyone needing **GPU compute on demand** without long-term commitments.Known IssuesCurrently as of July 21st, vllm library is not up to date so need to build from nightly builds.

https://github.com/MoonshotAI/Kimi-K2/issues/19

uv environment on a Network Volume is slow to initialize ray, recommend any python environments be ran on the machine itself instead of on the Network Volume.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.