We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

Mistral AI released two notable open models in early December: Mistral Large 3, announced on December 2, and Devstral 2, released on December 9. Mistral Large 3 is a frontier-class mixture-of-experts model with 41 billion active parameters out of 675 billion total, designed for strong general reasoning and long-context workloads. Devstral 2 is a developer-focused open model optimized for coding, tool use, and agent workflows, offering a more targeted option for teams building production systems. Both models are released under the Apache 2.0 license, allowing developers to run, fine-tune, and deploy them directly on their own GPU infrastructure.

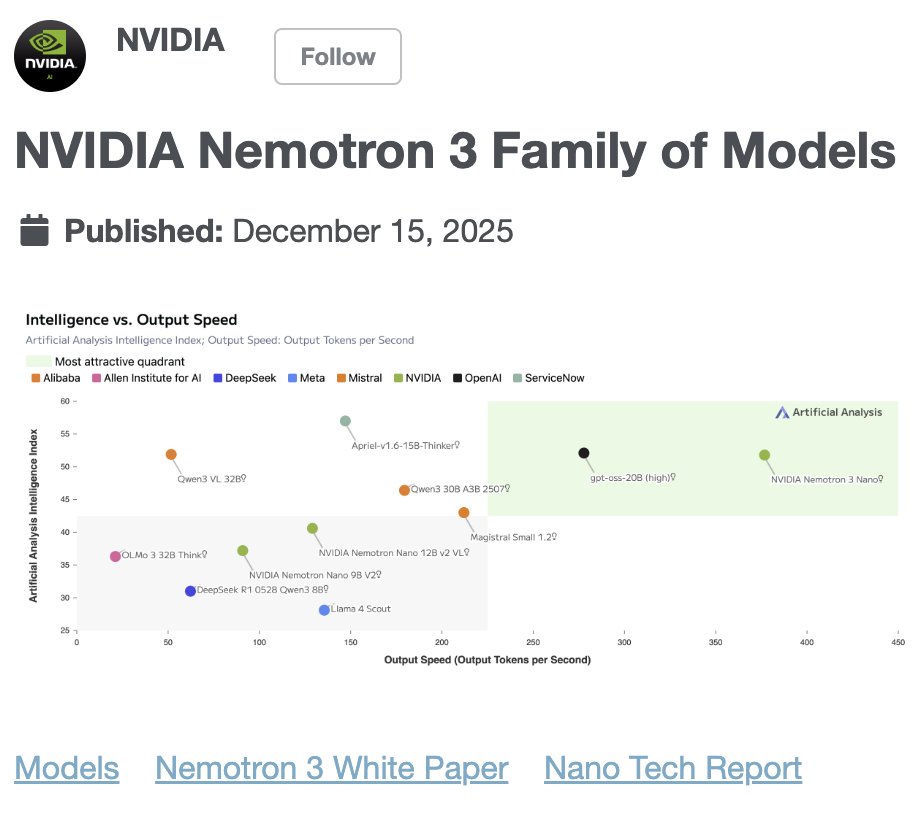

Nvidia announced the Nemotron 3 family of open source models, marking a notable shift toward releasing open weights alongside its hardware and software stack. The Nemotron models target a range of use cases, from lightweight agent workflows to more capable general-purpose reasoning tasks, and are designed to run efficiently on Nvidia GPUs.

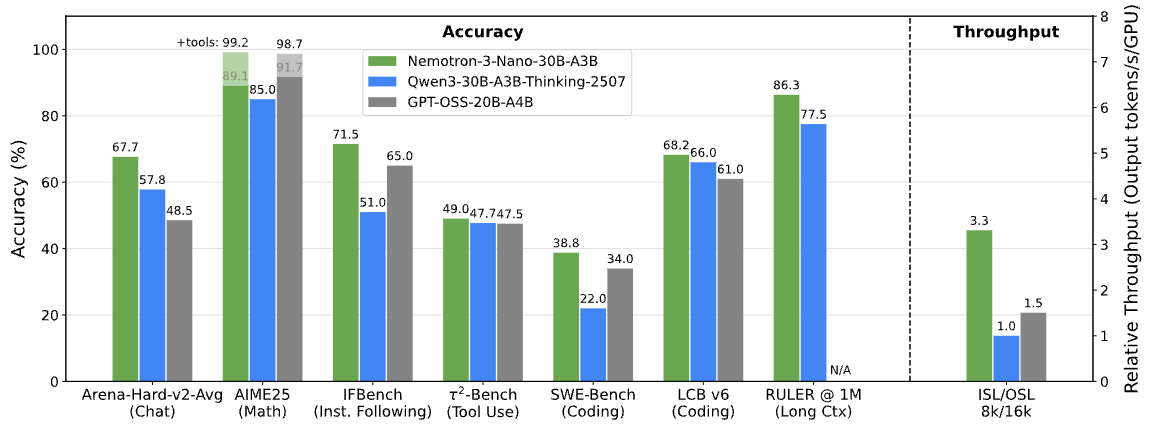

Nemotron 3 Nano 30B beat GPT-OSS and Qwen3-30B and runs 2.2–3.3× faster:

In parallel, Nvidia introduced new tooling aimed at making customization and fine-tuning easier across RTX, DGX, and data center environments. These tools focus on reducing friction for teams that want to adapt open models for specific domains or workloads.

Nvidia also announced its acquisition of SchedMD, the primary company behind the open source Slurm scheduler. Slurm remains one of the most widely used workload managers for HPC and AI clusters, and continued investment strengthens a critical part of the open infrastructure stack used for large-scale training and distributed inference.

Together, these moves signal Nvidia’s growing role not just as a GPU provider, but as a long-term steward of open source infrastructure that underpins modern AI systems.

The appeal of open models is simple. You can run them, test them, and decide if they are worth keeping. That only works if your infrastructure lets you move quickly and change your mind.

This is the kind of work Pods and Instant Clusters are designed for. They make it easy to benchmark new models, compare setups, and scale experiments without committing to a fixed stack or long-term assumptions.

Early December highlighted a clear shift toward open models and open infrastructure. Frontier-class open releases, combined with deeper investment in scheduling and GPU tooling, are making it easier for teams to build powerful AI systems without relying exclusively on closed platforms.

For developers who care about performance, cost control, and transparency, this momentum creates real opportunity. Open models and open infrastructure are no longer niche. They are becoming a core part of how modern AI systems are built and deployed.

Sources:

Mistral AI – Introducing Mistral 3 | https://mistral.ai/news/mistral-3

NVIDIA Newsroom – NVIDIA debuts Nemotron 3 family of open models | https://nvidianews.nvidia.com/news/nvidia-debuts-nemotron-3-family-of-open-models

NVIDIA Research – NVIDIA Nemotron 3 family of models | https://research.nvidia.com/labs/nemotron/Nemotron-3/

NVIDIA Blog – NVIDIA acquires open-source workload management provider SchedMD | https://blogs.nvidia.com/blog/nvidia-acquires-schedmd/

Reuters – Nvidia buys AI software provider SchedMD to expand open-source AI push | https://www.reuters.com/business/nvidia-buys-ai-software-provider-schedmd-expand-open-source-ai-push-2025-12-15/

In early December 2025, Mistral AI released Mistral Large 3 and Devstral 2, two open models under the Apache 2.0 license, with Mistral Large 3 targeting frontier-scale reasoning and long-context workloads and Devstral 2 focusing on developer use cases like coding and agent workflows. At the same time, Nvidia expanded its open source footprint with the release of the Nemotron 3 model family and the acquisition of SchedMD, reinforcing a broader shift toward open models and open infrastructure that teams can run, benchmark, and scale on their own GPU platforms.

Mistral AI released two notable open models in early December: Mistral Large 3, announced on December 2, and Devstral 2, released on December 9. Mistral Large 3 is a frontier-class mixture-of-experts model with 41 billion active parameters out of 675 billion total, designed for strong general reasoning and long-context workloads. Devstral 2 is a developer-focused open model optimized for coding, tool use, and agent workflows, offering a more targeted option for teams building production systems. Both models are released under the Apache 2.0 license, allowing developers to run, fine-tune, and deploy them directly on their own GPU infrastructure.

Nvidia announced the Nemotron 3 family of open source models, marking a notable shift toward releasing open weights alongside its hardware and software stack. The Nemotron models target a range of use cases, from lightweight agent workflows to more capable general-purpose reasoning tasks, and are designed to run efficiently on Nvidia GPUs.

Nemotron 3 Nano 30B beat GPT-OSS and Qwen3-30B and runs 2.2–3.3× faster:

In parallel, Nvidia introduced new tooling aimed at making customization and fine-tuning easier across RTX, DGX, and data center environments. These tools focus on reducing friction for teams that want to adapt open models for specific domains or workloads.

Nvidia also announced its acquisition of SchedMD, the primary company behind the open source Slurm scheduler. Slurm remains one of the most widely used workload managers for HPC and AI clusters, and continued investment strengthens a critical part of the open infrastructure stack used for large-scale training and distributed inference.

Together, these moves signal Nvidia’s growing role not just as a GPU provider, but as a long-term steward of open source infrastructure that underpins modern AI systems.

The appeal of open models is simple. You can run them, test them, and decide if they are worth keeping. That only works if your infrastructure lets you move quickly and change your mind.

This is the kind of work Pods and Instant Clusters are designed for. They make it easy to benchmark new models, compare setups, and scale experiments without committing to a fixed stack or long-term assumptions.

Early December highlighted a clear shift toward open models and open infrastructure. Frontier-class open releases, combined with deeper investment in scheduling and GPU tooling, are making it easier for teams to build powerful AI systems without relying exclusively on closed platforms.

For developers who care about performance, cost control, and transparency, this momentum creates real opportunity. Open models and open infrastructure are no longer niche. They are becoming a core part of how modern AI systems are built and deployed.

Sources:

Mistral AI – Introducing Mistral 3 | https://mistral.ai/news/mistral-3

NVIDIA Newsroom – NVIDIA debuts Nemotron 3 family of open models | https://nvidianews.nvidia.com/news/nvidia-debuts-nemotron-3-family-of-open-models

NVIDIA Research – NVIDIA Nemotron 3 family of models | https://research.nvidia.com/labs/nemotron/Nemotron-3/

NVIDIA Blog – NVIDIA acquires open-source workload management provider SchedMD | https://blogs.nvidia.com/blog/nvidia-acquires-schedmd/

Reuters – Nvidia buys AI software provider SchedMD to expand open-source AI push | https://www.reuters.com/business/nvidia-buys-ai-software-provider-schedmd-expand-open-source-ai-push-2025-12-15/

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.