We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

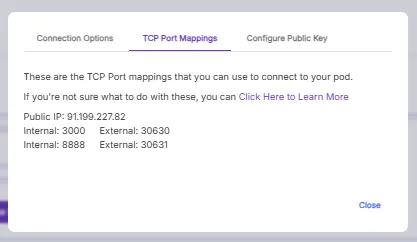

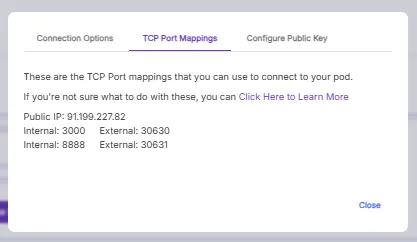

RunPod uses a proxy system to ensure that you have easy accessibility to your pods without needing to make any configuration changes. This proxy utilizes Cloudflare for ease of both implementation and access, which comes with several benefits and drawbacks. Let's go into a little explainer about specifically how the RunPod proxy works and when it's most appropriate to use - and when you may want to bypass it.

The proxy ensures that you always have the same method of accessing a pod on a given port, no matter what networking changes might occur, and that format is pod-ID, port, and then proxy.runpod.net, e.g. in the following format:

https://s7breobom8crgs-3000.proxy.runpod.net/

So for this ComfyUI pod that is defaulted to accept http requests on port 3000, no matter what changes, you can plug in that URL and it will always work. While Secure Cloud pod IP addresses generally should not change very often, there are situations where it happens, often due to network maintenance. Community Cloud IP pod addresses are liable to change at the discretion of the host, so those are not nearly as set in stone.

There are two main drawbacks to the proxy that should be considered:

Most official RunPod templates are set up to use the proxy. But if you'd rather decline the use of it, here's how to do that:

If you need to define the IP address and port in code, naturally, it's going to be easiest to maintain if you set a variable for the URL, rather than hard-coding it.

So the upshot is, the proxy is genuinely useful to streamline and standardized pod template and give a way to access them easily by smoothing out wrinkles that are natural in networking, but there's also a lot of reasons why you may not want to use it. Ultimately, you have to decide what's right for you, but we want to give you the tools to complete the workflows you want them to be completed.

Questions? Feel free to run them by our Discord!

Wondering when to use RunPod’s built-in proxy system for pod access? This guide breaks down its use cases, limitations, and when direct connection is a better choice.

%20RunPod%27s%20Proxy.webp)

RunPod uses a proxy system to ensure that you have easy accessibility to your pods without needing to make any configuration changes. This proxy utilizes Cloudflare for ease of both implementation and access, which comes with several benefits and drawbacks. Let's go into a little explainer about specifically how the RunPod proxy works and when it's most appropriate to use - and when you may want to bypass it.

The proxy ensures that you always have the same method of accessing a pod on a given port, no matter what networking changes might occur, and that format is pod-ID, port, and then proxy.runpod.net, e.g. in the following format:

https://s7breobom8crgs-3000.proxy.runpod.net/

So for this ComfyUI pod that is defaulted to accept http requests on port 3000, no matter what changes, you can plug in that URL and it will always work. While Secure Cloud pod IP addresses generally should not change very often, there are situations where it happens, often due to network maintenance. Community Cloud IP pod addresses are liable to change at the discretion of the host, so those are not nearly as set in stone.

There are two main drawbacks to the proxy that should be considered:

Most official RunPod templates are set up to use the proxy. But if you'd rather decline the use of it, here's how to do that:

If you need to define the IP address and port in code, naturally, it's going to be easiest to maintain if you set a variable for the URL, rather than hard-coding it.

So the upshot is, the proxy is genuinely useful to streamline and standardized pod template and give a way to access them easily by smoothing out wrinkles that are natural in networking, but there's also a lot of reasons why you may not want to use it. Ultimately, you have to decide what's right for you, but we want to give you the tools to complete the workflows you want them to be completed.

Questions? Feel free to run them by our Discord!

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.