We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

Runpod is excited to announce its latest integration with SkyPilot, an open-source framework for running LLMs, AI, and batch jobs on any cloud. This collaboration is designed to significantly enhance the efficiency and cost-effectiveness of your development process, particularly for training, fine-tuning, and deploying models.

SkyPilot is a powerful framework for running AI models and batch jobs on any cloud and Kubernetes environment. As a user, once you connect your cloud credentials via the CLI tool, you can seamlessly aggregate the most cost-effective compute options across different clouds.

SkyPilot originates from UC Berkeley’s Sky Computing—a paradigm that enables workloads to run on one or more cloud providers transparently. With SkyPilot, AI teams can use multiple clouds/infra as a single compute pool. SkyPilot automatically sends AI workloads to the cheapest and most available cloud (hint: This is where Runpod is often the top choice!) to execute and orchestrate the jobs/services.

With this integration, Runpod is joining the AI “Sky”.

This new integration leverages the Runpod CLI infrastructure that was recently launched, streamlining the process of spinning up on-demand pods and deploying serverless endpoints. With this tool, accessing and managing Runpod’s GPU cloud resources becomes incredibly easy and efficient.

To begin using Runpod with SkyPilot, follow these steps:

pip install "runpod>=1.5.1" to install the latest version of Runpod.pip install "skypilot-nightly[runpod]" to install the SkyPilot Runpod cloud.After setting up your environment, you can seamlessly spin up a cluster in minutes:

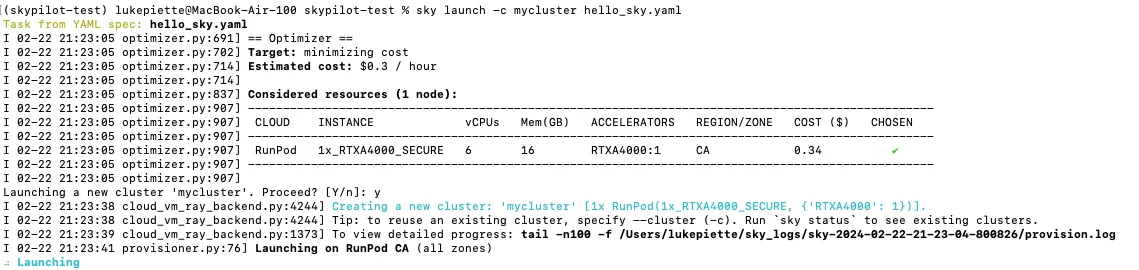

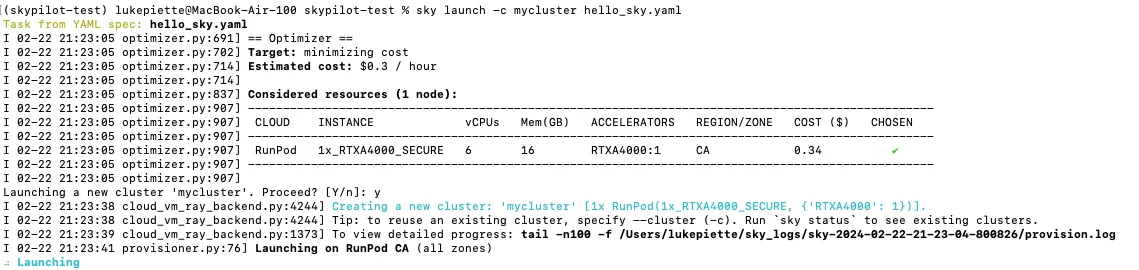

mkdir hello-sky to create a new directory for your project.cd hello-sky.cat > hello_sky.yaml and input the following configuration details:4. Launch Your Project: With your configuration file created, launch your project on the cluster by running sky launch -c mycluster hello_sky.yaml.

5. Confirm Your GPU Type: You should see the available GPU options on Secure Cloud appear in your command line. Once you confirm your GPU type, your cluster will start spinning up.

The team at Runpod is honored to have worked alongside the brilliant folks at SkyPilot. Special thanks to Zongheng, Zhanghao, Wei-Lin, and the rest of the SkyPilot team for making this possible.

Runpod is committed to continue working together with SkyPilot, further simplifying the challenges of cloud resource utilization for developers everywhere.

PS: If you have any feedback about the SkyPilot integration and/or CLI tool, message @LukePiette on Twitter or @lukepiette on Discord. We’d love to hear what you think!

Runpod now integrates with SkyPilot, enabling even more flexible scheduling and multi-cloud orchestration for LLMs, batch jobs, and custom AI workloads.

Runpod is excited to announce its latest integration with SkyPilot, an open-source framework for running LLMs, AI, and batch jobs on any cloud. This collaboration is designed to significantly enhance the efficiency and cost-effectiveness of your development process, particularly for training, fine-tuning, and deploying models.

SkyPilot is a powerful framework for running AI models and batch jobs on any cloud and Kubernetes environment. As a user, once you connect your cloud credentials via the CLI tool, you can seamlessly aggregate the most cost-effective compute options across different clouds.

SkyPilot originates from UC Berkeley’s Sky Computing—a paradigm that enables workloads to run on one or more cloud providers transparently. With SkyPilot, AI teams can use multiple clouds/infra as a single compute pool. SkyPilot automatically sends AI workloads to the cheapest and most available cloud (hint: This is where Runpod is often the top choice!) to execute and orchestrate the jobs/services.

With this integration, Runpod is joining the AI “Sky”.

This new integration leverages the Runpod CLI infrastructure that was recently launched, streamlining the process of spinning up on-demand pods and deploying serverless endpoints. With this tool, accessing and managing Runpod’s GPU cloud resources becomes incredibly easy and efficient.

To begin using Runpod with SkyPilot, follow these steps:

pip install "runpod>=1.5.1" to install the latest version of Runpod.pip install "skypilot-nightly[runpod]" to install the SkyPilot Runpod cloud.After setting up your environment, you can seamlessly spin up a cluster in minutes:

mkdir hello-sky to create a new directory for your project.cd hello-sky.cat > hello_sky.yaml and input the following configuration details:4. Launch Your Project: With your configuration file created, launch your project on the cluster by running sky launch -c mycluster hello_sky.yaml.

5. Confirm Your GPU Type: You should see the available GPU options on Secure Cloud appear in your command line. Once you confirm your GPU type, your cluster will start spinning up.

The team at Runpod is honored to have worked alongside the brilliant folks at SkyPilot. Special thanks to Zongheng, Zhanghao, Wei-Lin, and the rest of the SkyPilot team for making this possible.

Runpod is committed to continue working together with SkyPilot, further simplifying the challenges of cloud resource utilization for developers everywhere.

PS: If you have any feedback about the SkyPilot integration and/or CLI tool, message @LukePiette on Twitter or @lukepiette on Discord. We’d love to hear what you think!

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.