We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

DeepSeek's release of V3.1 in August 2025 represents a significant architectural and strategic evolution from the V3-0324 model released in March. While V3-0324 was primarily an incremental improvement over the original V3, V3.1 introduces fundamental changes that reshape how we think about hybrid reasoning models and hardware compatibility in AI systems.

The most significant change in V3.1 is its hybrid architecture design. While DeepSeek-V3-0324 demonstrates notable improvements over its predecessor, DeepSeek-V3, in several key aspects, it maintained the same underlying model structure as the original V3. V3.1 breaks this pattern entirely.

DeepSeek-V3.1 is a hybrid model that supports both thinking mode and non-thinking mode within a single architecture. This represents a fundamental departure from previous approaches where reasoning capabilities required separate models or manual mode switching. The hybrid design allows the model to dynamically choose between fast, direct responses and deeper chain-of-thought reasoning based on query complexity. This approach employs template-based control where reasoning behavior is governed by tokenizer parameters rather than separate model architectures.

The technical implementation relies on chat template modifications rather than architectural branching. One model supports both thinking mode and non-thinking mode by changing the chat template, making this an elegant solution that avoids the computational overhead of maintaining separate model paths. The thinking mode uses the prefix pattern <|Assistant|><think> while non-thinking mode employs <|Assistant|></think>, creating a clean separation without duplicate inference pipelines.

import transformers

tokenizer = transformers.AutoTokenizer.from_pretrained("deepseek-ai/DeepSeek-V3.1")

messages = [

{"role": "system", "content": "You are a helpful assistant"},

{"role": "user", "content": "Who are you?"},

{"role": "assistant", "content": "<think>Hmm</think>I am DeepSeek"},

{"role": "user", "content": "1+1=?"}

]

# Thinking mode enabled

tokenizer.apply_chat_template(messages, tokenize=False, thinking=True, add_generation_prompt=True)

# Output: '<|begin▁of▁sentence|>You are a helpful assistant<|User|>Who are you?<|Assistant|></think>I am DeepSeek<|end▁of▁sentence|><|User|>1+1=?<|Assistant|><think>'

# Non-thinking mode

tokenizer.apply_chat_template(messages, tokenize=False, thinking=False, add_generation_prompt=True)

# Output: '<|begin▁of▁sentence|>You are a helpful assistant<|User|>Who are you?<|Assistant|></think>I am DeepSeek<|end▁of▁sentence|><|User|>1+1=?<|Assistant|></think>'The model was trained to recognize these specific token patterns during post-training. The model learned to:

<think> tokens</think> tokensV3.1's hybrid approach enables flexible deployment scenarios - developers can use non-thinking mode for fast interactions and thinking mode when reasoning transparency is required. The model switches dynamically without architectural changes, making it ideal for applications requiring both speed and occasional deep reasoning.

In comparison, R1's dedicated approach provides consistent reasoning patterns but lacks flexibility. The model cannot provide quick responses for simple queries, always investing computational resources in detailed reasoning chains. There may be situations where you want this, but constantly loading and unloading models this large isn’t particularly feasible, and flexibility is often warranted to prevent unnecessary transition time between models or cold starts in serverless.

The comparison reveals two successful but distinct philosophies for AI reasoning implementation. DeepSeek R1 represents specialized excellence - a dedicated reasoning system that consistently provides transparent, deep analysis at the cost of speed and flexibility. DeepSeek V3.1 embodies adaptive intelligence - a hybrid system that dynamically balances reasoning depth with practical efficiency based on user needs and query complexity.

The transition from V3-0324 to V3.1 involved significant changes in model sizing and context handling. V3-0324 maintained the 685B total parameter count of the original V3, while V3.1 adopts a more efficient structure with 671B total params, 37B activated params, 128K context length. For those on a budget and trying to run the model in, say, an 8xH100 NVL pod in 8 bit where you would have about 685GB occupied by the model, this represents a not insignificant amount of additional wiggle room for additional context length. Inference patterns between the two models do differ significantly. V3.1's hybrid design allows efficient resource allocation based on query complexity, while R1 consistently uses full reasoning overhead.

The performance improvements from V3-0324 to V3.1 are substantial across multiple domains. In mathematical reasoning, V3.1's thinking mode achieves AIME 2024 (Pass@1): 66.3 for non-thinking mode, 93.1 for thinking mode compared to V3-0324's 59.4. Code performance shows similar dramatic improvements, with LiveCodeBench (2408-2505) (Pass@1): 56.4 for non-thinking, 74.8 for thinking versus V3-0324's 43.0.

The agent capabilities demonstrate the practical impact of architectural changes. SWE Verified (Agent mode): 66.0 for V3.1 compared to 45.4 for V3-0324 represents a 45% improvement in software engineering tasks. This suggests that the hybrid architecture and enhanced tool calling provide tangible benefits for complex, multi-step reasoning tasks.

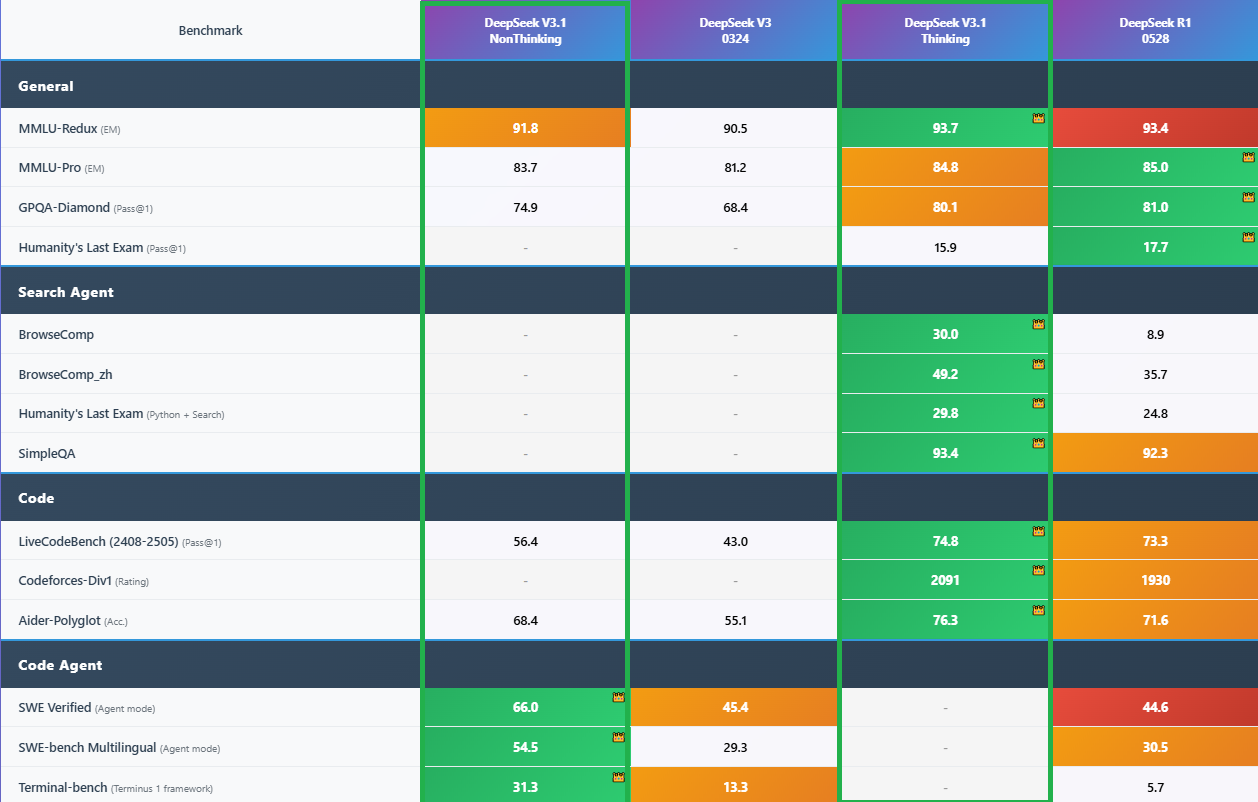

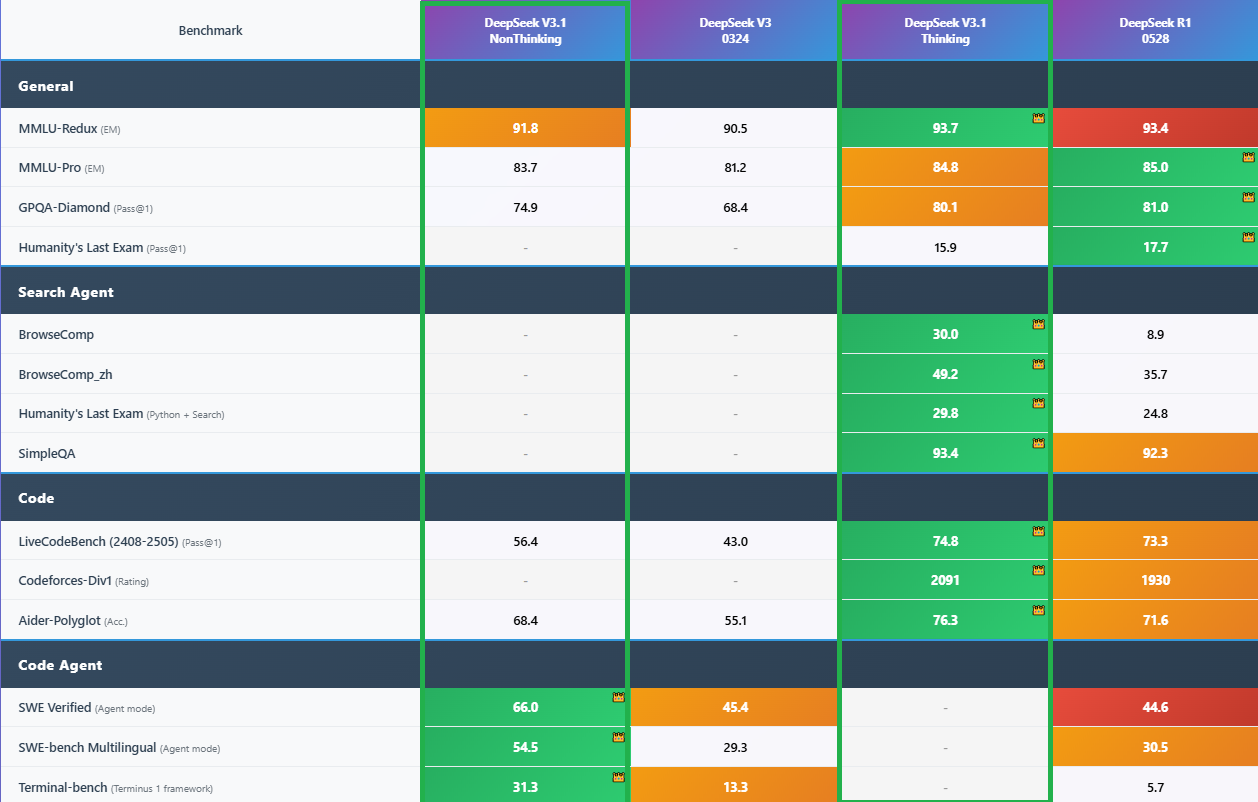

Here’s how the model shapes up compared to previous iterations in thinking and non-thinking modes:

As always, the general rule for loading an LLM at full weights is 2 * the number of parameters, plus 10% for context and cache. So you could be looking at approximately 1500 GB of RAM required for this model. This will require an Instant Clusters setup; we’ve previously gone into setting up Kimi K2 on our Youtube channel, and you can use the same process to run the new Deepseek in a cluster.

For more budget oriented setups you can always use a GGUF quantization such as those provided by Unsloth, and we’ve also got a video on how to get those up and running using KoboldCPP.

(FWIW, one amusing but fairly on the nose rule of thumb for quantizations is ‘the number of bits is how many hours of sleep the model got last night.’)

The architectural patterns established in V3.1—hybrid reasoning modes, custom precision formats, and enhanced agent capabilities—likely preview the direction of next-generation language models. Rather than scaling parameters indefinitely, the focus appears to be shifting toward architectural efficiency, specialized capabilities, and hardware optimization. We’ve already seen this with the industry-wide shift away from dense models; most of the large closed-source models are known or theorized to be MoE models, and doubly true with the open-source community with very large dense models seeming to fall out of favor (such as the previous Llama-3 405b.)

The architectural patterns established in V3.1—hybrid reasoning modes, custom precision formats, and enhanced agent capabilities—likely preview the direction of next-generation language models. Rather than scaling parameters indefinitely, the focus appears to be shifting toward architectural efficiency, specialized capabilities, and hardware optimization. We’ve written before about the importance of speed when it comes to LLM inference — faster models incur less GPU time, and thus less billing, especially important in a serverless environment where you pay per second.

We’re super excited to see what you create with this new model! Feel free to hop onto our Discord if you have any questions.

DeepSeek V3.1 introduces a breakthrough hybrid reasoning architecture that dynamically toggles between fast inference and deep chain-of-thought logic using token-controlled templates—enhancing performance, flexibility, and hardware efficiency over its predecessor V3-0324. This update positions V3.1 as a powerful foundation for real-world AI applications, with benchmark gains across math, code, and agent tasks, now fully deployable on RunPod Instant Clusters.

DeepSeek's release of V3.1 in August 2025 represents a significant architectural and strategic evolution from the V3-0324 model released in March. While V3-0324 was primarily an incremental improvement over the original V3, V3.1 introduces fundamental changes that reshape how we think about hybrid reasoning models and hardware compatibility in AI systems.

The most significant change in V3.1 is its hybrid architecture design. While DeepSeek-V3-0324 demonstrates notable improvements over its predecessor, DeepSeek-V3, in several key aspects, it maintained the same underlying model structure as the original V3. V3.1 breaks this pattern entirely.

DeepSeek-V3.1 is a hybrid model that supports both thinking mode and non-thinking mode within a single architecture. This represents a fundamental departure from previous approaches where reasoning capabilities required separate models or manual mode switching. The hybrid design allows the model to dynamically choose between fast, direct responses and deeper chain-of-thought reasoning based on query complexity. This approach employs template-based control where reasoning behavior is governed by tokenizer parameters rather than separate model architectures.

The technical implementation relies on chat template modifications rather than architectural branching. One model supports both thinking mode and non-thinking mode by changing the chat template, making this an elegant solution that avoids the computational overhead of maintaining separate model paths. The thinking mode uses the prefix pattern <|Assistant|><think> while non-thinking mode employs <|Assistant|></think>, creating a clean separation without duplicate inference pipelines.

import transformers

tokenizer = transformers.AutoTokenizer.from_pretrained("deepseek-ai/DeepSeek-V3.1")

messages = [

{"role": "system", "content": "You are a helpful assistant"},

{"role": "user", "content": "Who are you?"},

{"role": "assistant", "content": "<think>Hmm</think>I am DeepSeek"},

{"role": "user", "content": "1+1=?"}

]

# Thinking mode enabled

tokenizer.apply_chat_template(messages, tokenize=False, thinking=True, add_generation_prompt=True)

# Output: '<|begin▁of▁sentence|>You are a helpful assistant<|User|>Who are you?<|Assistant|></think>I am DeepSeek<|end▁of▁sentence|><|User|>1+1=?<|Assistant|><think>'

# Non-thinking mode

tokenizer.apply_chat_template(messages, tokenize=False, thinking=False, add_generation_prompt=True)

# Output: '<|begin▁of▁sentence|>You are a helpful assistant<|User|>Who are you?<|Assistant|></think>I am DeepSeek<|end▁of▁sentence|><|User|>1+1=?<|Assistant|></think>'The model was trained to recognize these specific token patterns during post-training. The model learned to:

<think> tokens</think> tokensV3.1's hybrid approach enables flexible deployment scenarios - developers can use non-thinking mode for fast interactions and thinking mode when reasoning transparency is required. The model switches dynamically without architectural changes, making it ideal for applications requiring both speed and occasional deep reasoning.

In comparison, R1's dedicated approach provides consistent reasoning patterns but lacks flexibility. The model cannot provide quick responses for simple queries, always investing computational resources in detailed reasoning chains. There may be situations where you want this, but constantly loading and unloading models this large isn’t particularly feasible, and flexibility is often warranted to prevent unnecessary transition time between models or cold starts in serverless.

The comparison reveals two successful but distinct philosophies for AI reasoning implementation. DeepSeek R1 represents specialized excellence - a dedicated reasoning system that consistently provides transparent, deep analysis at the cost of speed and flexibility. DeepSeek V3.1 embodies adaptive intelligence - a hybrid system that dynamically balances reasoning depth with practical efficiency based on user needs and query complexity.

The transition from V3-0324 to V3.1 involved significant changes in model sizing and context handling. V3-0324 maintained the 685B total parameter count of the original V3, while V3.1 adopts a more efficient structure with 671B total params, 37B activated params, 128K context length. For those on a budget and trying to run the model in, say, an 8xH100 NVL pod in 8 bit where you would have about 685GB occupied by the model, this represents a not insignificant amount of additional wiggle room for additional context length. Inference patterns between the two models do differ significantly. V3.1's hybrid design allows efficient resource allocation based on query complexity, while R1 consistently uses full reasoning overhead.

The performance improvements from V3-0324 to V3.1 are substantial across multiple domains. In mathematical reasoning, V3.1's thinking mode achieves AIME 2024 (Pass@1): 66.3 for non-thinking mode, 93.1 for thinking mode compared to V3-0324's 59.4. Code performance shows similar dramatic improvements, with LiveCodeBench (2408-2505) (Pass@1): 56.4 for non-thinking, 74.8 for thinking versus V3-0324's 43.0.

The agent capabilities demonstrate the practical impact of architectural changes. SWE Verified (Agent mode): 66.0 for V3.1 compared to 45.4 for V3-0324 represents a 45% improvement in software engineering tasks. This suggests that the hybrid architecture and enhanced tool calling provide tangible benefits for complex, multi-step reasoning tasks.

Here’s how the model shapes up compared to previous iterations in thinking and non-thinking modes:

As always, the general rule for loading an LLM at full weights is 2 * the number of parameters, plus 10% for context and cache. So you could be looking at approximately 1500 GB of RAM required for this model. This will require an Instant Clusters setup; we’ve previously gone into setting up Kimi K2 on our Youtube channel, and you can use the same process to run the new Deepseek in a cluster.

For more budget oriented setups you can always use a GGUF quantization such as those provided by Unsloth, and we’ve also got a video on how to get those up and running using KoboldCPP.

(FWIW, one amusing but fairly on the nose rule of thumb for quantizations is ‘the number of bits is how many hours of sleep the model got last night.’)

The architectural patterns established in V3.1—hybrid reasoning modes, custom precision formats, and enhanced agent capabilities—likely preview the direction of next-generation language models. Rather than scaling parameters indefinitely, the focus appears to be shifting toward architectural efficiency, specialized capabilities, and hardware optimization. We’ve already seen this with the industry-wide shift away from dense models; most of the large closed-source models are known or theorized to be MoE models, and doubly true with the open-source community with very large dense models seeming to fall out of favor (such as the previous Llama-3 405b.)

The architectural patterns established in V3.1—hybrid reasoning modes, custom precision formats, and enhanced agent capabilities—likely preview the direction of next-generation language models. Rather than scaling parameters indefinitely, the focus appears to be shifting toward architectural efficiency, specialized capabilities, and hardware optimization. We’ve written before about the importance of speed when it comes to LLM inference — faster models incur less GPU time, and thus less billing, especially important in a serverless environment where you pay per second.

We’re super excited to see what you create with this new model! Feel free to hop onto our Discord if you have any questions.

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.