We've cooked up a bunch of improvements designed to reduce friction and make the.

Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur.

Block quote

Ordered list

Unordered list

Bold text

Emphasis

Superscript

Subscript

Today, we're announcing the integration between Runpod and dstack, an open-source orchestration engine, that aims to simplify the development, training, and deployment of AI models while leveraging the open-source ecosystem.

While dstack shares a number of similarities with Kubernetes, it is more lightweight and focuses entirely on the training and deployment of AI workloads. With dstack, you can describe workloads declaratively and conveniently run them via the CLI. it supports development environments, tasks, and services.

To use Runpod with dstack, you only need to install dstack and configure it with your Runpod API key.

Then, specify your Runpod API key in ~/.dstack/server/config.yml:

Once it's configured, the dstack server can be started:

Now, you can use dstack's CLI (or API) to run and manage workloads on Runpod.

dstack supports three types of configurations: dev-environment (for provisioning interactive development environments), task (for running training, fine-tuning, and various other jobs), and service (for deploying models).

Here's an example of a task:

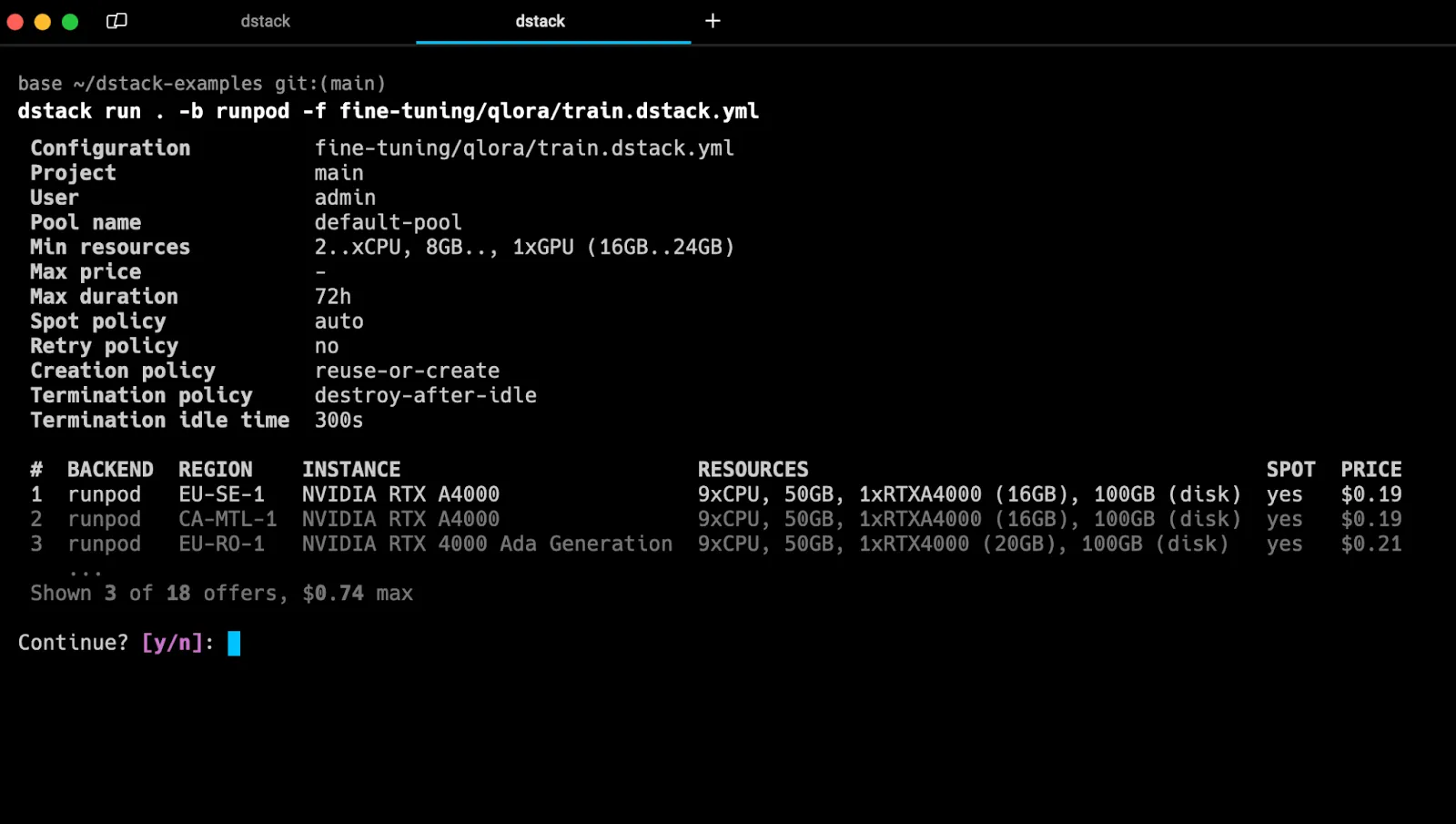

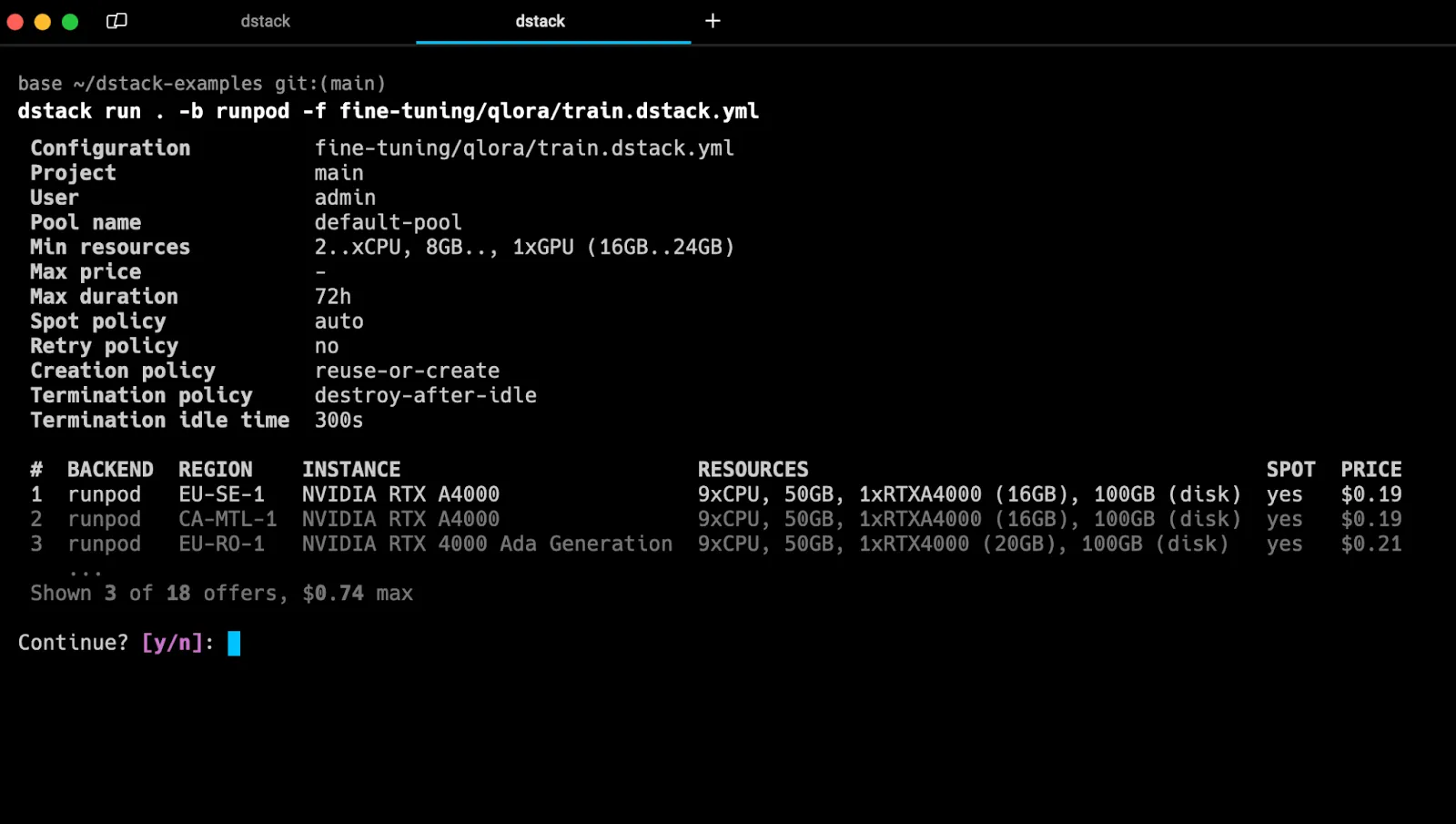

Once defined, one can run the configuration via the dstack CLI.

It will automatically provision resources via Runpod, and take care of everything, including uploading code, port-forwarding, etc.

You can find more examples of various training and deployment configurations here, we'd love to hear about your deployments either in the dstack or Runpod Discord servers!

Start Up a dstack installation on Runpod

Learn how to use dstack, a lightweight open-source orchestration engine, to declaratively manage development, training, and deployment workflows on Runpod.

Today, we're announcing the integration between Runpod and dstack, an open-source orchestration engine, that aims to simplify the development, training, and deployment of AI models while leveraging the open-source ecosystem.

While dstack shares a number of similarities with Kubernetes, it is more lightweight and focuses entirely on the training and deployment of AI workloads. With dstack, you can describe workloads declaratively and conveniently run them via the CLI. it supports development environments, tasks, and services.

To use Runpod with dstack, you only need to install dstack and configure it with your Runpod API key.

Then, specify your Runpod API key in ~/.dstack/server/config.yml:

Once it's configured, the dstack server can be started:

Now, you can use dstack's CLI (or API) to run and manage workloads on Runpod.

dstack supports three types of configurations: dev-environment (for provisioning interactive development environments), task (for running training, fine-tuning, and various other jobs), and service (for deploying models).

Here's an example of a task:

Once defined, one can run the configuration via the dstack CLI.

It will automatically provision resources via Runpod, and take care of everything, including uploading code, port-forwarding, etc.

You can find more examples of various training and deployment configurations here, we'd love to hear about your deployments either in the dstack or Runpod Discord servers!

Start Up a dstack installation on Runpod

The most cost-effective platform for building, training, and scaling machine learning models—ready when you are.