I'm gonna be straight with you - the RTX 5090 isn't just another GPU launch. It's NVIDIA basically saying "screw it, let's see how fast we can make this thing go" and then slapping a price tag that'll make your wallet file for bankruptcy.

After weeks of testing (and explaining to my wife why our gaming room now sounds like a jet engine), I've got some thoughts. This thing is simultaneously amazing and absolutely bonkers, and I'm not sure who it's actually for.

Let me break down what I found, including whether dropping two grand on a graphics card makes any sense in 2025 - spoiler alert: it probably doesn't, but here we are anyway.

Here's What We're Covering (Feel Free to Skip Around):

- TL;DR: The Essential Takeaways

- NVIDIA RTX 5090: The Card That Broke My Power Bill

- 4 Smart Alternatives That Won't Require a Second Mortgage

- Frequently Asked Questions

- Final Thoughts: Should You Actually Buy This Thing?

TL;DR: The Essential Takeaways

The RTX 5090 is about 27-35% faster than the RTX 4090 but costs 25% more at $1,999 MSRP. And that's if you can actually find one at that price - good luck with that. I'm seeing these listed for $3,500 on eBay already.

That 32GB of GDDR7 memory is genuinely impressive for AI stuff and professional work. But the power consumption? Holy crap. This thing pulls 575W, which is basically like having another gaming PC running inside your gaming PC. My UPS kept beeping during gaming sessions until I realized this card pulls more power than my microwave.

The memory temps had me genuinely worried I was going to fry a $2,000 card - we're talking 89-90°C during heavy loads. Cost-per-frame is essentially the same as the two-year-old RTX 4090, which is... not great.

For most people, cloud GPU services make way more sense than actually buying this thing.

RTX 5090 Reality Check Table

NVIDIA RTX 5090: The Card That Broke My Power Bill

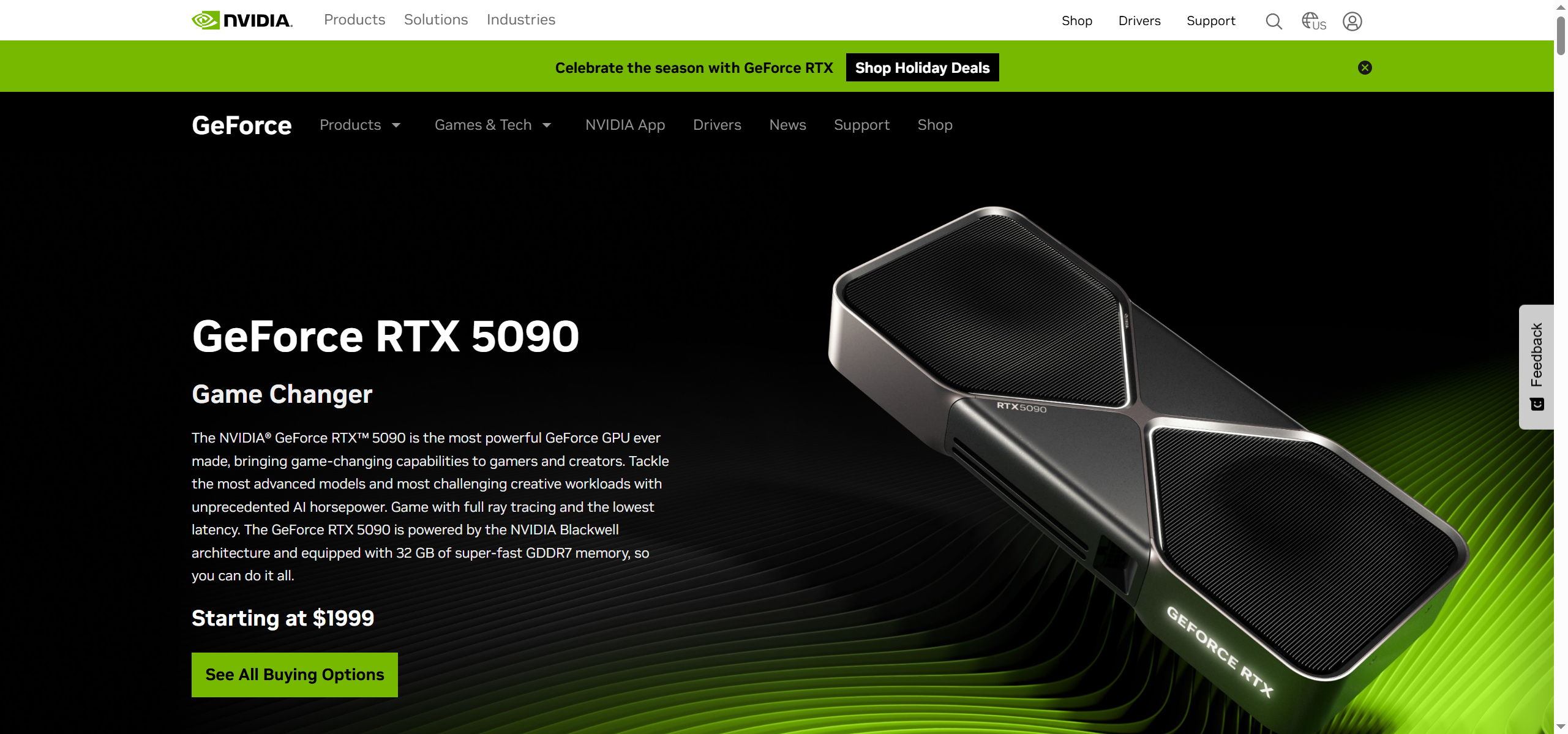

What This Beast Actually Is

The RTX 5090 is NVIDIA taking their Blackwell architecture and cranking everything to 11. We're talking 33% more cores than the RTX 4090 and enough VRAM to make your entire system jealous. This thing has more RAM than most people's computers - 32GB of the fastest memory you can buy.

The box is stupidly heavy - I thought they shipped me a brick by mistake. Installation took forever because I had to rearrange half my cable management, and now my cat keeps sleeping next to the case because it's basically a space heater.

The Good Stuff That Actually Matters

NVIDIA did pack some genuinely cool tech into this card. The new DLSS 4 can create up to three fake frames for every real one, which sounds like cheating but works surprisingly well. That massive memory pool means I loaded up a 4K video project that would normally choke my old card, and it just... handled it. Like it was nothing.

The cooling is actually impressive engineering - they managed to fit all this power into a 2-slot card when previous flagship cards needed 3-4 slots. Though "2-slot" is generous when you factor in the power connectors that stick out like angry tentacles.

Where the RTX 5090 Actually Shines

Gaming That'll Melt Your Face Off

The numbers don't lie - this thing is 27-35% faster than the RTX 4090 in most games, with some titles hitting 50% improvements. Ray tracing performance jumps 31-37% at 4K. I spent more time benchmarking than actually gaming, which is probably missing the point, but the frames are definitely there.

Professional Workloads That Actually Matter

That 32GB VRAM eliminates every memory bottleneck I could throw at it. 8K timeline scrubbing without proxy files? No problem. AI training that used to take days? Now it takes hours. If you're making money with your GPU, this thing pays for itself.

Cooling That Doesn't Suck

Despite pulling enough power to run a small appliance, the Founders Edition keeps GPU temps around 72°C. It's not silent, but it's not the jet engine I expected either.

Future-Proofing for Days

32GB means you won't hit memory limits anytime soon. Even if games suddenly decide they need ridiculous amounts of VRAM, you're covered.

The Reality Check Nobody Wants to Hear

Pricing That Makes No Sense

$1,999 MSRP is just the starting point. Real-world pricing is hitting $2,500-$3,000+ because of course it is. I kept refreshing the checkout page for like 10 minutes before hitting 'buy' - two grand is two grand, you know?

Power Draw That'll Trip Your Breakers

575W continuous draw affects household electrical circuits. I'm not kidding - running extended gaming sessions can trip breakers in older homes. My power strip started making weird noises, and I had to upgrade my UPS because this thing was overloading it.

Memory Temps That Keep Me Up at Night

Those 89-90°C memory temperatures during testing had me genuinely worried. Half the time I felt like I was beta testing NVIDIA's thermal limits rather than enjoying a finished product.

Good Luck Finding One

Launch availability is going to be a nightmare. Even if you've got the cash, actually getting one at MSRP is like winning the lottery. I've seen people setting up stock alerts like they're trying to buy concert tickets.

How It Actually Performs in Real Life

Gaming Performance: 4.5/5

At 4K where this card belongs, it's genuinely impressive. The performance gains diminish at lower resolutions because your CPU becomes the bottleneck, but for 4K gaming, nothing else comes close. Though let's be honest - most of us are just gonna run the same five games we always play.

Value Proposition: 2/5

This is where reality hits hard. The cost-per-frame improvement is basically the same as what we got from the RTX 4090 two years ago, but now it costs 25% more. It's like upgrading from a Honda Civic to a Ferrari to drive to the grocery store.

Power Efficiency: 3/5

The 100W increase in power consumption over the 4090 means higher idle draw (46W vs 28-29W) and more heat in your room. Summer gaming sessions are gonna be rough without good AC.

Memory & Future-Proofing: 5/5

That 32GB with 78% more bandwidth than the RTX 4090 is genuinely impressive. This is enough VRAM to hold my entire Steam library... if games actually used that much.

Build Quality: 4.5/5

The 2-slot design with excellent thermal management is solid engineering, but those concerning memory temperatures keep me from giving it a perfect score. The RGB lighting is nice, but it's like having a nightlight that costs $2,000.

Professional Use: 5/5

For AI workloads, content creation, and research, this thing dominates. Up to 3x faster than RTX 4090 in some AI tasks. If you're making money with GPU compute, the math works out.

What People Are Actually Saying

Professional reviewers are conflicted. Tom's Hardware gave it 4/5 stars but specifically called out thermal concerns and noted that "drivers could use a bit more time baking in Jensen's oven." GamersNexus praised the engineering but tore apart NVIDIA's misleading marketing showing "2x performance" improvements that rely on comparing different DLSS settings.

My Discord buddies are either jealous or think I've lost my mind - no in-between. The PC building community is split between "holy grail" and "capitalism gone wrong." Even the tech YouTubers seem conflicted about this thing.

One comment that stuck with me: "I'm still tempted to get one since I'd like to upgrade from the 3090, though I really have no reason to." That pretty much sums up the entire RTX 5090 experience.

The Economics Are Brutal

The $1,999 MSRP is just fantasy pricing. Based on every other flagship launch, expect immediate sellouts followed by scalper pricing. Real availability will likely see prices between $2,500-$3,000+ for months.

This puts the RTX 5090 in a weird spot - too expensive for enthusiasts, but potentially cost-effective for professionals who can write it off as a business expense. Part of me wonders if I should've just gotten a 4090 and saved myself a thousand bucks.

Where to Actually Buy One (Good Luck)

Launch day availability will be brutal across all retailers. NVIDIA's official store will have Founders Edition cards, while Best Buy, Amazon, and Newegg will stock both FE and partner cards.

AIB partners will have their own versions, but availability will be equally constrained. I'd recommend setting up stock alerts and being prepared to buy immediately when they drop. It's like trying to buy concert tickets, except the concert costs two grand.

4 Smart Alternatives That Won't Require a Second Mortgage

NVIDIA RTX 4090: The Sensible Choice

Still crushes 4K gaming, just 20-30% slower than the RTX 5090. The $1,600 MSRP (when you can find it) offers better value, though finding one at retail price is still a challenge.

Lower 450W power consumption means it won't murder your electric bill or require a PSU upgrade. Best for people who want flagship performance without the RTX 5090's extreme pricing. The 24GB VRAM handles current games just fine, and honestly, you probably won't notice the difference in most titles.

AMD RX 7900 XTX: The Budget 4K Option

Competitive performance at $870-$900 makes this the value play. Ray tracing lags behind NVIDIA, but for regular gaming, it delivers solid 4K results at a fraction of the cost.

Perfect if you prioritize resolution over ray tracing and want something you can actually buy without camping retailer websites. Plus, AMD cards are usually available when NVIDIA's aren't.

NVIDIA RTX 4080 Super: The Goldilocks Card

About 70-80% of RTX 5090 performance at $999 MSRP with much better availability. The 16GB VRAM handles current games well, though it might become limiting down the road.

With the RTX 5080 launching soon, this represents solid middle-ground performance. Great for 1440p and entry-level 4K without the flagship price tag.

Runpod GPU Cloud: The Smart Money Play

Here's where things get interesting. Instead of dropping $2,500+ on hardware that sits idle most of the time, Runpod lets you rent RTX 5090s, H100s, and other cutting-edge GPUs on-demand.

The economics make way more sense - pay only for GPU time you actually use. No upfront capital, no cooling infrastructure, no maintenance headaches. I've seen professionals realize that cloud GPU access beats owning these expensive cards, especially with current availability and inflated pricing.

For AI research, content creation, and businesses testing GPU performance, this approach provides better flexibility and cost-effectiveness than ownership.

Where to Find These Alternatives

RTX 4090s require patience and stock alerts across multiple retailers. Micro Center often has better in-store availability, while B&H Photo maintains reasonable pricing when stock appears.

AMD options enjoy much better availability through standard retail channels. AMD's direct store frequently has reference cards in stock.

Cloud GPU services through Runpod eliminate availability concerns entirely - you can deploy RTX 5090 instances within minutes rather than waiting months for hardware.

The Real-World Stuff Nobody Mentions

Beyond benchmarks, the RTX 5090 creates practical problems that marketing ignores. That 575W continuous draw can trip breakers in older homes during extended sessions. Cooling becomes critical - performance scales with case airflow and ambient temperature. Summer gaming without good AC will see thermal throttling.

The upgrade cycle economics get brutal at these prices. Depreciation accelerates when next-gen cards arrive, making resale values unpredictable. My rational brain says this is overkill. My gamer brain says 'but what if I need those extra frames?'

Frequently Asked Questions

Is upgrading from RTX 4090 worth it?

For most people? Hell no. The 27-35% performance improvement doesn't justify the cost difference, especially since the 4090 already crushes 4K gaming. Only consider this if you've got specific professional workloads that need the extra VRAM, or if you've got money burning a hole in your pocket.

What power supply do I need?

NVIDIA says 850W minimum, but I'd go 1000W for headroom. The 575W GPU plus a high-end CPU can easily exceed 750W total system draw. Don't cheap out here - this card will find your PSU's limits.

Will prices drop after launch?

Based on history, expect inflated pricing for 6-12 months due to supply constraints. The RTX 4090 took nearly a year to hit stable MSRP pricing. Same story here, probably worse given the higher starting price.

Should I wait for RTX 5080?

Probably, yeah. The RTX 5080 will likely offer 80-85% of RTX 5090 performance at roughly half the price. Unless you specifically need maximum memory and performance, waiting makes financial sense for normal humans.

Final Thoughts: Should You Actually Buy This Thing?

The RTX 5090 represents a weird crossroads in GPU evolution. NVIDIA delivered exceptional engineering and performance, but the pricing pushes flagship cards into professional workstation territory. This creates a gap in the enthusiast market that may never get filled at reasonable prices.

For professionals in AI, content creation, and research, the RTX 5090's capabilities can justify the investment through productivity gains. For gamers and enthusiasts, the value proposition is increasingly questionable. A graphics card that costs more than some people's cars. What a time to be alive.

Do I recommend this card? Honestly, probably not. Am I keeping mine? Yeah, because I'm an idiot with expensive hobbies and I've already committed to this madness.

The future likely belongs to cloud-based GPU access for many use cases, with ownership reserved for users with consistent, high-utilization workloads. The RTX 5090 may be remembered as the card that finally pushed GPU pricing beyond mainstream accessibility.

Look, if you've got money burning a hole in your pocket and you absolutely need the best of everything, go for it. But if you're a normal person who just wants to play games at 4K, save your money and get something sensible. Your wallet will thank you, and honestly, you probably won't even notice the difference.

Whether this represents progress or a concerning trend depends on your perspective and bank account. What's certain is that the RTX 5090 sets a new benchmark for both performance and pricing that'll influence the entire industry. I'm honestly not sure who this card is actually for, but here we are, living in the timeline where graphics cards cost more than used cars.

Three months from now, will I even notice the difference from my old card? Probably not. But those benchmark numbers sure do look pretty.

.webp)